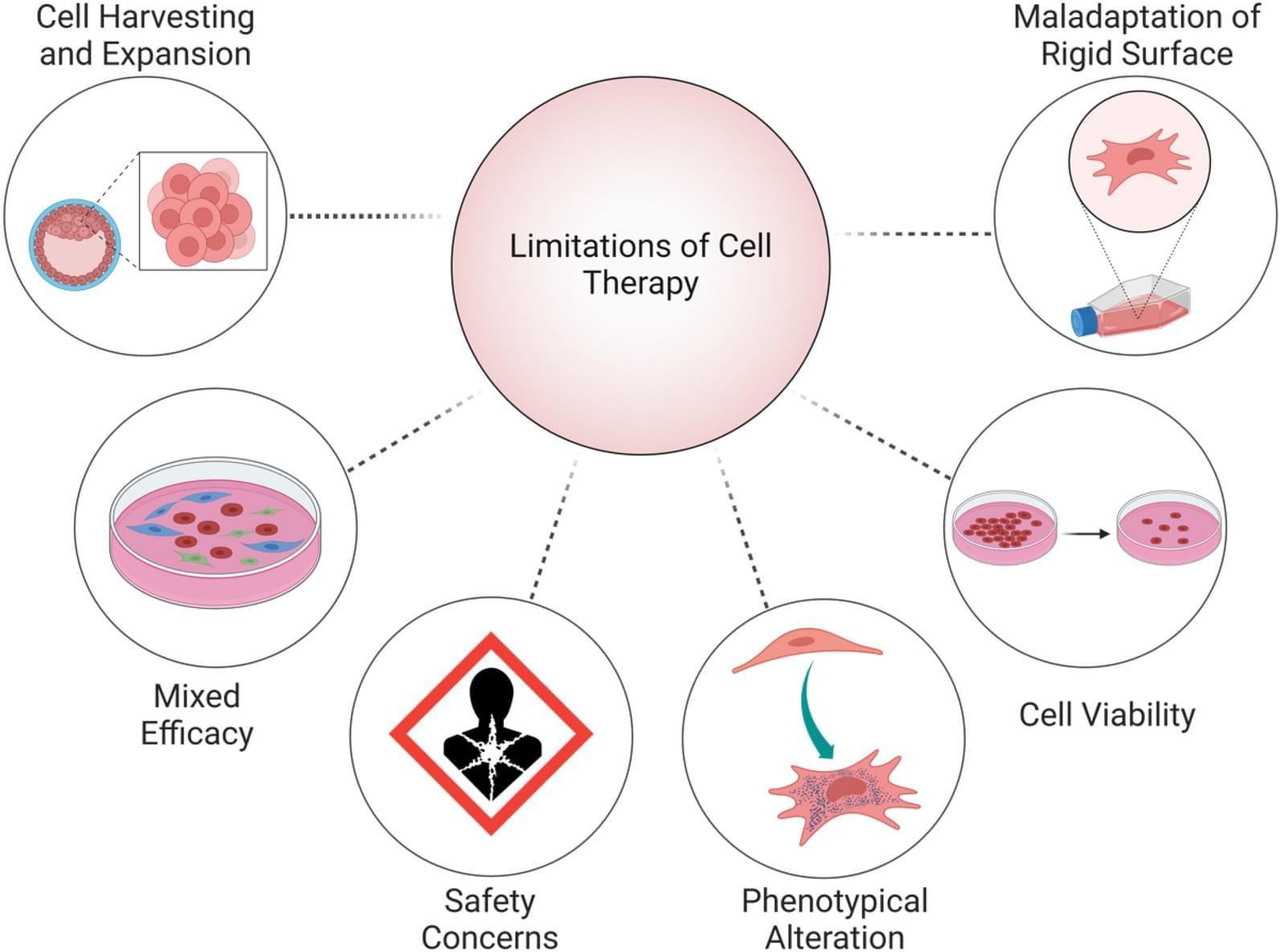

There is an urgent need for precision immunotherapy strategies that simultaneously target both tumor cells and immune cells to enhance treatment efficacy. Identifying genes with dual functions in both cancer and immune cells opens new possibilities for overcoming tumor resistance and improving patient survival.

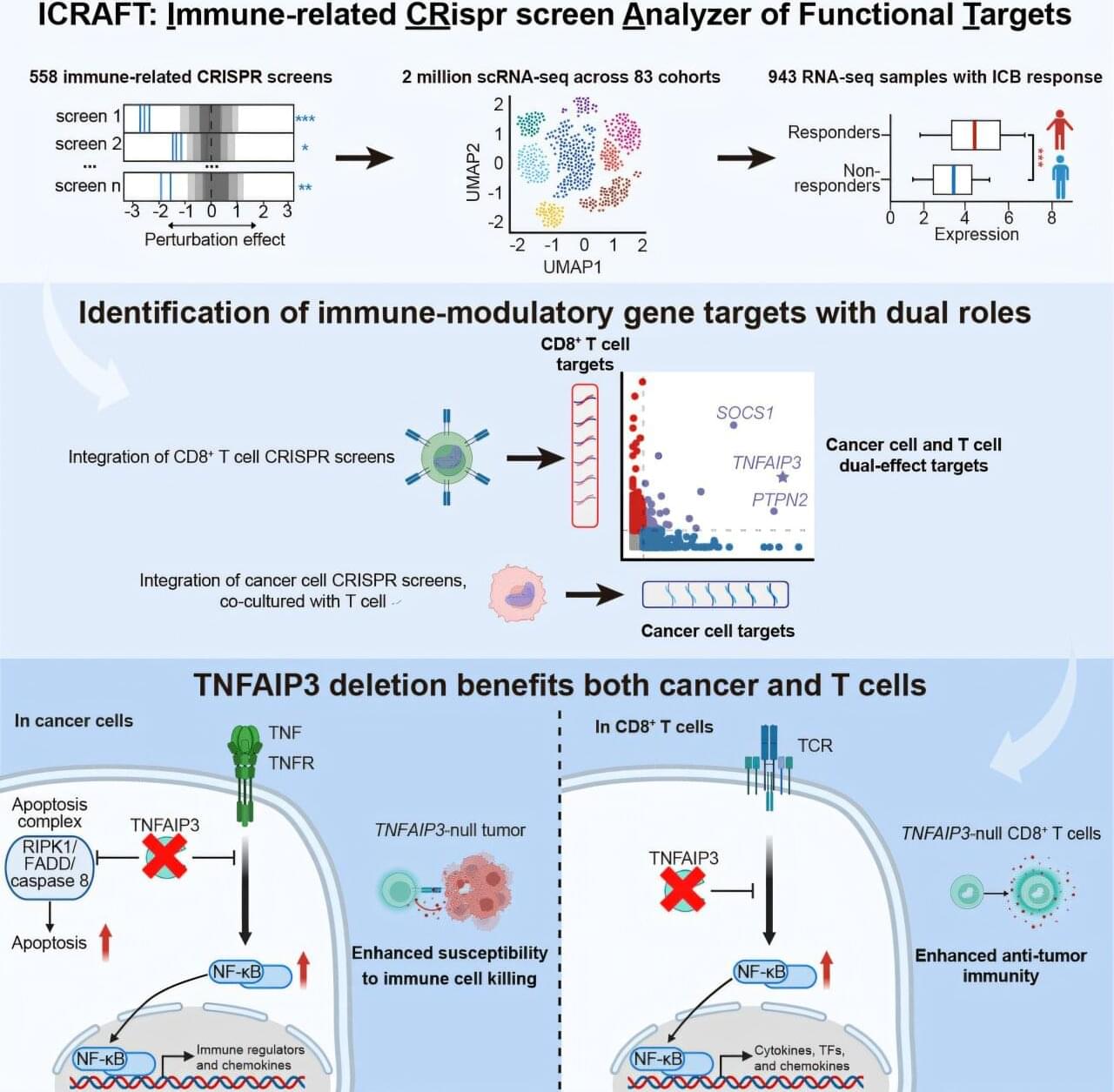

Professor Zeng Zexian’s team from the Center for Quantitative Biology at the Peking University Academy for Advanced Interdisciplinary Studies, in collaboration with the Peking University-Tsinghua University Joint Center for Life Sciences, has developed ICRAFT, an innovative computational platform for identifying cancer immunotherapy targets. Their study has been published in Immunity.

ICRAFT integrates 558 CRISPR screening datasets, 2 million single-cell RNA sequencing datasets, and 943 RNA-Seq datasets from clinical immunotherapy samples.