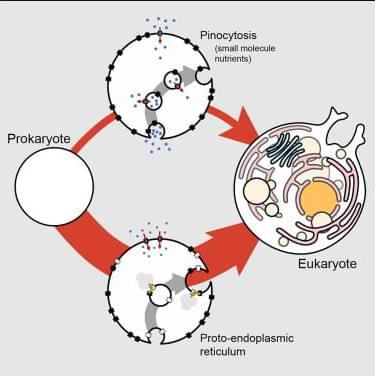

Scientists from The University of Manchester have changed our understanding of how cells in living organisms divide, which could revise what students are taught at school. In a study published today in Science, the researchers challenge conventional wisdom taught in schools for over 100 years.

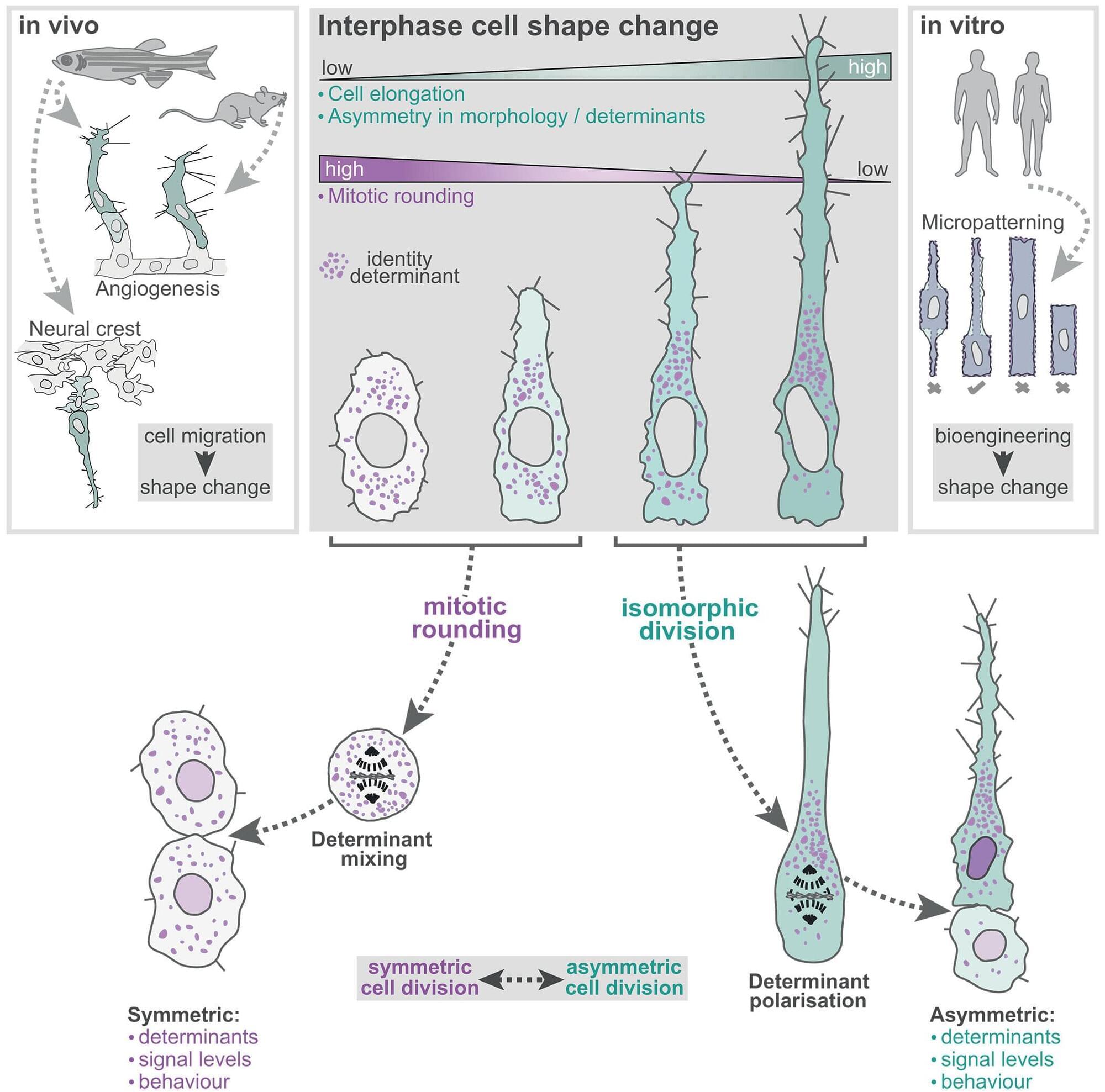

Students are currently taught that during cell division, a parent cell will become spherical before splitting into two daughter cells of equal size and shape. However, the study reveals that cell rounding is not a universal feature of cell division and is not how it often works in the body.

Dividing cells, the researchers show, often don’t round up into sphere-like shapes. This lack of rounding breaks the symmetry of division to generate two daughter cells that differ from each other in both size and function, known as asymmetric division.