Some transcription factors are known as master regulators, and they can have an impact on many different biochemical pathways and processes in cells. | Cell And Molecular Biology.

Category: biological – Page 121

Dimitar Sasselov — What is the Far Future of Intelligence in the Universe?

Free access to Closer To Truth’s library of 5,000 videos: https://closertotruth.com/

Our universe has been developing for about 14 billion years, but human-level intelligence, at least on Earth, has emerged in a remarkably short period of time, measured in tens or hundreds of thousands of years. What then is the future of intelligence? With the exponential growth of computing, will non-biological intelligence dominate?

Support the show with Closer To Truth merchandise: https://bit.ly/3P2ogje.

Watch more interviews on the future of the universe: https://rb.gy/xxeav.

Sasselov has been a professor at Harvard since 1998. He arrived to CfA in 1990 as a Harvard-Smithsonian Center post-doctoral fellow. Between 1999 and 2003 he was the Head Tutor of the Astronomy Department.

Register for free at CTT.com for subscriber-only exclusives: https://bit.ly/3He94Ns.

Quantum biology: Your nose and house plant are experts at particle physics

Quantum physics governs the world of the very small and that of the very cold. Your dog cannot quantum-tunnel her way through the fence, nor will you see your cat exhibit wave-like properties. But physics is funny, and it is continually surprising us. Quantum physics is starting to show up in unexpected places. Indeed, it is at work in animals, plants, and our own bodies.

We once thought that biological systems are too warm, too wet, and too chaotic for quantum physics to play any part in how they work. But it now seems that life is employing feats of quantum physics every day in messy, real-world systems, including quantum tunneling, wave-particle duality, and even entanglement. To see how it all works, we can start by looking right inside our own noses.

The human nose can distinguish over one trillion smells. But how exactly the sense of smell works is still a mystery. When a molecule referred to as an odorant enters our nose, it binds to receptors. Initially, the prevailing theory held that these receptors used the shape of the odorants to differentiate smells. The so-called lock and key model suggests that when an odorant finds the right receptor, it fits into it and triggers a specific smell. But the lock and key model ran into trouble when tested. Subjects were able to tell two scents apart, even when the odorant molecules were identical in shape. Some other process must be at work.

Researchers observe rubber-like elasticity in liquid glycerol for the first time

Simple molecular liquids such as water or glycerol are of great importance for technical applications, in biology or even for understanding properties in the liquid state. Researchers at the Max Planck Institut für Struktur und Dynamik der Materie (MPSD) have now succeeded in observing liquid glycerol in a completely unexpected rubbery state.

In their article published in Proceedings of the National Academy of Sciences, the researchers report how they created rapidly expanding bubbles on the surface of the liquid in vacuum using a pulsed laser. However, the thin, micrometers-thick liquid envelope of the bubble did not behave like a viscous liquid dissipating deformation energy as expected, but like the elastic envelope of a rubber toy balloon, which can store and release elastic energy.

It is the first time an elasticity dominating the flow behavior in a Newtonian liquid like glycerol has been observed. Its existence is difficult to reconcile with common ideas about the interactions in liquid glycerol and motivates the search for more comprehensive descriptions. Surprisingly, the elasticity persists over such long timescales of several microseconds that it could be important for very rapid engineering applications such as micrometer-confined flows under high pressure. Yet, the question remains unsettled whether this behavior is a specific property of liquid glycerol, or rather a phenomenon that occurs in many molecular liquids under similar conditions but has not been observed so far.

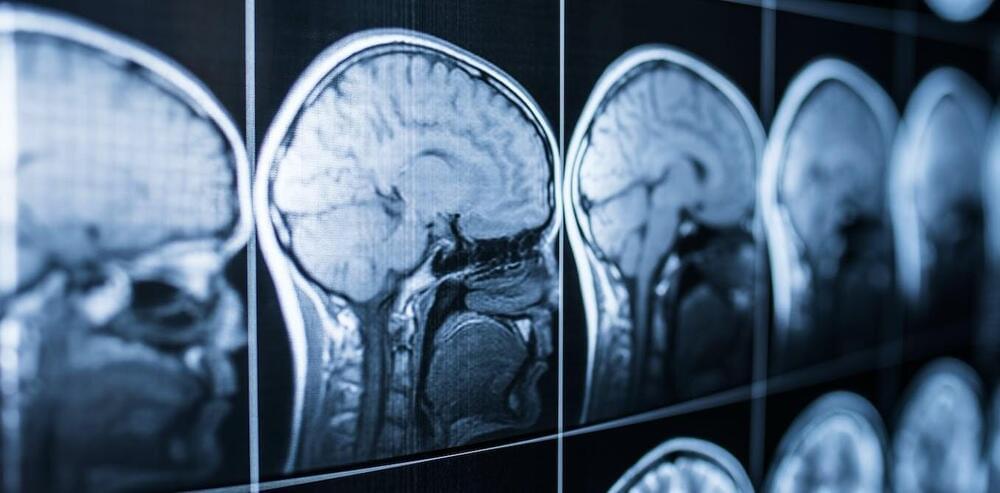

How uploading our minds to a computer might become possible

The idea that our mind could live on in another form after our physical body dies has been a recurring theme in science fiction since the 1950s. Recent television series such as Black Mirror and Upload, as well as some games, demonstrate our continued fascination with this idea. The concept is known as mind uploading.

Recent developments in science and technology are taking us closer to a time when mind uploading could graduate from science fiction to reality.

In 2016, BBC Horizon screened a programme called The Immortalist, in which a

Russian millionaire unveiled his plans to work with neuroscientists, robot builders and other experts to create technology that would allow us to upload our minds to a computer in order to live forever.

At the time, he confidently predicted that this would be achieved by 2045. This seems unlikely, but we are making small but significant steps towards a better understanding of the human brain — and potentially the ability to emulate, or reproduce, it.

Technology Roadmap for Flexible Sensors

Humans rely increasingly on sensors to address grand challenges and to improve quality of life in the era of digitalization and big data. For ubiquitous sensing, flexible sensors are developed to overcome the limitations of conventional rigid counterparts. Despite rapid advancement in bench-side research over the last decade, the market adoption of flexible sensors remains limited. To ease and to expedite their deployment, here, we identify bottlenecks hindering the maturation of flexible sensors and propose promising solutions. We first analyze challenges in achieving satisfactory sensing performance for real-world applications and then summarize issues in compatible sensor-biology interfaces, followed by brief discussions on powering and connecting sensor networks.

Alan Turing and the Limits of Computation

Note: June 23 is Alan Turing’s birth anniversary.

Alan Turing wore many scientific hats in his lifetime: a code-breaker in World War II, a prophetic figure of artificial intelligence (AI), a pioneer of theoretical biology, and a founding figure of theoretical computer science. While the former of his roles continue to catch the fancy of popular culture, his fundamental contribution to the development of computing as a mathematical discipline is possibly where his significant scientific impact persists to date.

In a first, scientists use AI to create brand new enzymes

In a scientific first, researchers have used machine learning-powered AI to design de novo enzymes — never-before-existing proteins that accelerate biochemical reactions in living organisms. Enzymes drive a wide range of critical processes, from digestion to building muscle to breathing.

A team led by the University of Washington’s Institute for Protein Design, along with colleagues at UCLA and China’s Xi’an Jiaotong University, used their AI engine to create new enzymes of a kind called luciferases. Luciferases — as their name implies — catalyze chemical reactions that emit light; they’re what give fireflies their flare.

“Living organisms are remarkable chemists,” David Baker, a professor of biochemistry at UW and the study’s senior author, said.

“Hydration Solids”: The New Class of Matter Shaking Up Science

For many years, the fields of physics and chemistry have held the belief that the properties of solid materials are fundamentally determined by the atoms and molecules they consist of. For instance, the crystalline nature of salt is credited to the ionic bond formed between sodium and chloride ions. Similarly, metals such as iron or copper owe their robustness to the metallic bonds between their respective atoms, and the elasticity of rubbers stems from the flexible bonds in the polymers that form them. This principle also applies to substances like fungi, bacteria, and wood.

Or so the story goes.

A new paper recently published in Nature upends that paradigm, and argues that the character of many biological materials is actually created by the water that permeates these materials. Water gives rise to a solid and goes on to define the properties of that solid, all the while maintaining its liquid characteristics.