Even the most highly trained and experienced person sometimes needs a hand.

As I begin to understand the future of the internet and its evolving technology, I believe this author has it right and has cleverly synthesized a coherent image of a future sustainable as NFT, VR, AR and Metaverse Web 3.0. #Metaverse #NFT #web3 #VR #AR

NFTs are here to stay and will be foundational to our new world.

It’s no secret that AI is everywhere, yet it’s not always clear when we’re interacting with it, let alone which specific techniques are at play. But one subset is easy to recognize: If the experience is intelligent and involves photos or videos, or is visual in any way, computer vision is likely working behind the scenes.

Computer vision is a subfield of AI, specifically of machine learning. If AI allows machines to “think,” then computer vision is what allows them to “see.” More technically, it enables machines to recognize, make sense of, and respond to visual information like photos, videos, and other visual inputs.

Over the last few years, computer vision has become a major driver of AI. The technique is used widely in industries like manufacturing, ecommerce, agriculture, automotive, and medicine, to name a few. It powers everything from interactive Snapchat lenses to sports broadcasts, AR-powered shopping, medical analysis, and autonomous driving capabilities. And by 2,022 the global market for the subfield is projected to reach $48.6 billion annually, up from just $6.6 billion in 2015.

We speak to AR experts about the future of the Oculus Quest.

Oculus is getting into AR, and it has big repercussions for the future direction of the company and its popular line of VR headsets – especially the eventual Oculus Quest 3.

The Facebook-owned company recently announced its intention to open up its Oculus platform to augmented reality developers, allowing them to use the Oculus Quest 2 headset to host AR games and apps rather than simply VR titles – setting the scene for an explosion of both consumer and business applications on the popular standalone headset.

Lambda, an AI infrastructure company, this week announced it raised $15 million in a venture funding round from 1517, Gradient Ventures, Razer, Bloomberg Beta, Georges Harik, and others, plus a $9.5 million debt facility. The $24.5 million investment brings the company’s total raised to $28.5 million, following an earlier $4 million seed tranche.

In 2013, San Francisco, California-based Lambda controversially launched a facial recognition API for developers working on apps for Google Glass, Google’s ill-fated heads-up augmented reality display. The API — which soon expanded to other platforms — enabled apps to do things like “remember this face” and “find your friends in a crowd,” Lambda CEO Stephen Balaban told TechCrunch at the time. The API has been used by thousands of developers and was, at least at one point, seeing over 5 million API calls per month.

Since then, however, Lambda has pivoted to selling hardware systems designed for AI, machine learning, and deep learning applications. Among these are the TensorBook, a laptop with a dedicated GPU, and a workstation product with up to four desktop-class GPUs for AI training. Lambda also offers servers, including one designed to be shared between teams and a server cluster, called Echelon, that Balaban describes as “datacenter-scale.”

This video was made possible by NordPass. Sign up with this link and get 70% off your premium subscription + 1 monrth for free! https://nordpass.com/futurology.

Visit Our Parent Company EarthOne For Sustainable Living Made Simple ➤

https://earthone.io/

The story of humanity is progress, from the origins of humanity with slow disjointed progress to the agricultural revolution with linear progress and furthermore to the industrial revolution with exponential almost unfathomable progress.

This accelerating rate of change of progress is due to the compounding effect of technology, in which it enables countless more from 3D printing, autonomous vehicles, blockchain, batteries, remote surgeries, virtual and augmented reality, robotics – the list can go on and on. These devices in turn will lead to mass changes in society from energy generation, monetary systems, space colonization, automation and much more!

This trajectory of progress is now leading us into a time period that is, “characterized by a fusion of technologies that is blurring the lines between the physical, digital and biological spheres”, called by many the technological revolution or the 4th industrial revolution — in which everything will change, from the underlying structure and fundamental institutions of society to how we live our day-to-day lives.

00:00 Intro.

EcoTech Recycling’s patented thermodynamic process turns waste rubber into a nontoxic synthetic material for new tires, auto parts and insulation.

If you’ve ever seen a tire graveyard piled high with trashed rubber, you can easily understand that Israeli company EcoTech Recycling has a green gem of an idea.

EcoTech’s nontoxic process produces a unique material, Active Rubber (AR), from end-of-life tires. With1.6 billion tires manufactured annually, and 290 million tires discarded each year in the United States alone, tires are the world’s largest source of waste rubber.

“Rubber is a valuable commodity, and we are making it reusable,” says CEO and President Gideon Drori.

Deep-tech healthcare & energy investments for a sustainable future — dr. anil achyuta, investment director / founding member, TDK ventures.

Dr. Anil Achyuta is an Investment Director and a Founding Member at TDK Ventures, which is a deep-tech corporate venture fund of TDK Corporation, the Japanese multinational electronics company that manufactures electronic materials, electronic components, and recording and data-storage media.

Anil is passionate about energy and healthcare sectors as he believes these are the most impactful areas to building a sustainable future – a mission directly in line with TDK Ventures’ goal.

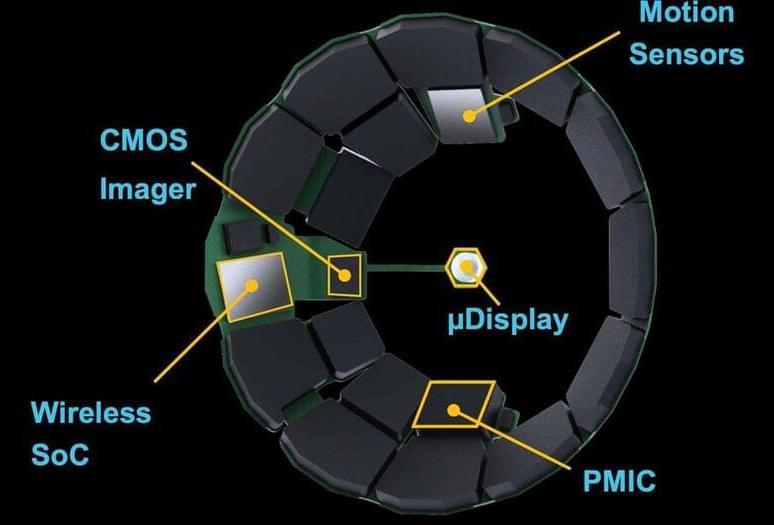

At TDK Ventures, Anil has reviewed over 1050 start-ups and invested in: 1) Autoflight — an electric vertical take-off and landing company, 2) Genetesis — a magnetic imaging-based cardiac diagnostics company, 3) Origin — 3D printing mass manufacturing company, 4) Exo — hand-held 3D ultrasound imaging company, 5) GenCell — ammonia-to-energy hydrogen fuel cell company, 6) Mojo Vision – augmented reality contact lens company, and 7) Battery Resourcers – a direct to cathode lithium ion battery recycling company.

From his seven investments, Anil has secured two exits. GenCell IPO’d on Tel Aviv’s Stock Exchange, and Origin was acquired by the #1 3D Printing company in the world, Stratasys, for $100M.