Metalens for AR and VR.

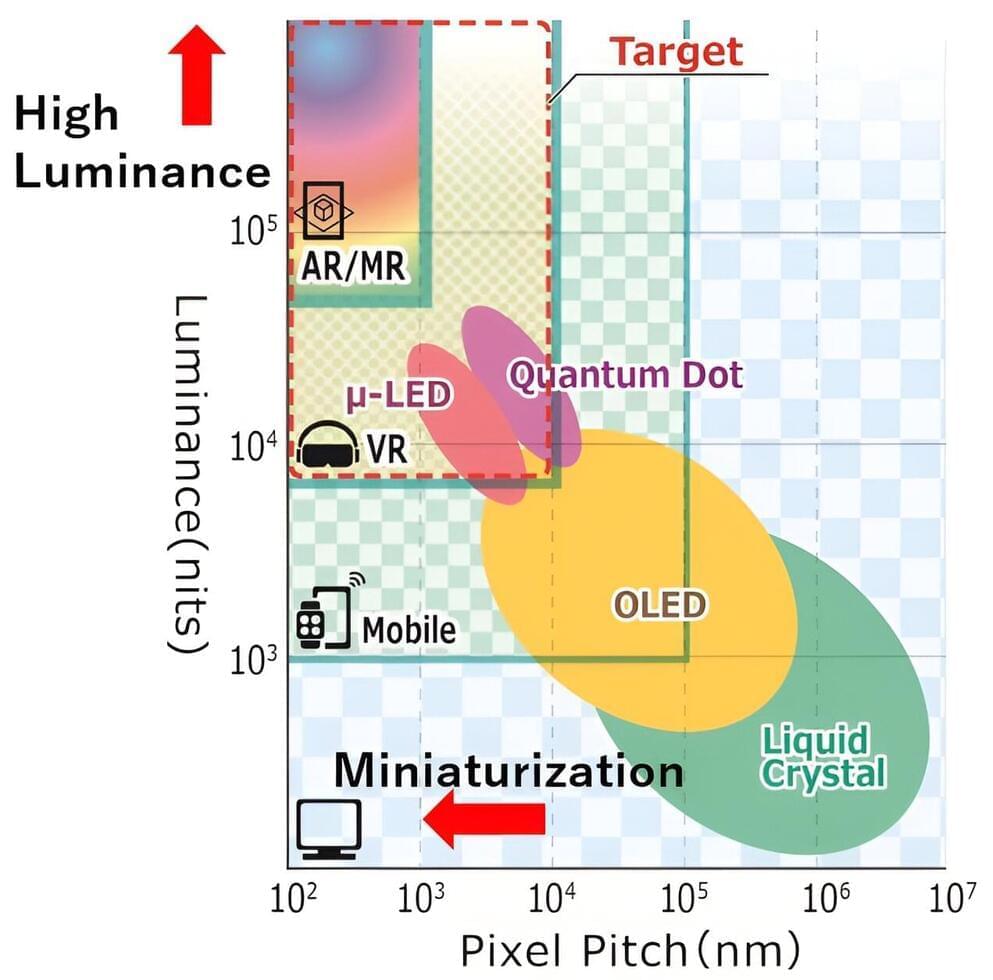

BEIJING, Sept. 8, 2023 /PRNewswire/ — WiMi Hologram Cloud Inc. (NASDAQ: WIMI) (“WiMi” or the “Company”), a leading global Hologram Augmented Reality (“AR”) Technology provider, today announced that a metasurface eyepiece for augmented reality has been developed, which is based on metasurfaces composed of artificially fabricated subwavelength structures. The metasurface eyepiece employs a special optical design and engineered anisotropic optical response to achieve an ultra-wide field of view(FOV), full-color imaging, and high-resolution near-eye display.

At the heart of the WiMi’s metalens are see-through metalens with a high numerical aperture(NA), a large area and broadband characteristics. Its anisotropic optical response allows it to perform two different optical functions simultaneously. First, it can image virtual information, acting as an imaging lens for virtual information. Second, it can transmit light, serving as a transparent glass for viewing a real-world scene. This design allows the transparent metalens to be placed directly in front of the eye without the need for additional optics, resulting in a wider FOV.

Fabrication of metalens is done using nanoimprinting technology, which is capable of fabricating large-area metalens with sub-wavelength structures. First, a mould or template with the desired structure is prepared. Then, the mould or template is contacted with a transparent substrate and the nanoscale structure is transferred by applying pressure and temperature. Through this nanoimprinting process, the subwavelength structure of the metalens is successfully replicated onto the transparent substrate, resulting in the formation of the metalens.