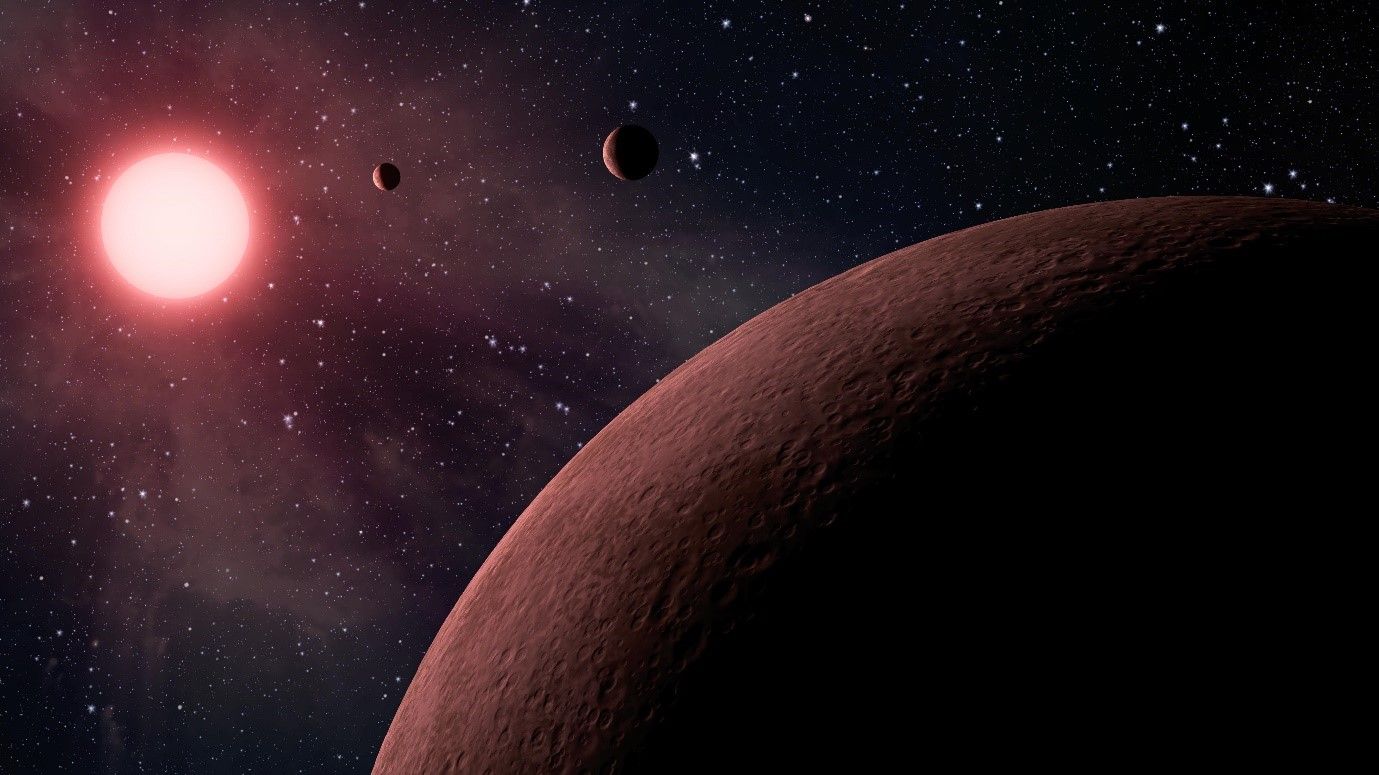

One of the greatest fears of the world’s space is a real-life Andromeda Strain, the chilling movie about a US research satellite carrying a deadly extraterrestrial microscopic organism that crashes into a small town in Arizona. A group of top scientists are hurriedly assembled in a bid to identify and contain the lethal stowaway.