The latest generative AI models such as OpenAI’s ChatGPT-4 and Google’s Gemini 2.5 require not only high memory bandwidth but also large memory capacity. This is why generative AI cloud operating companies like Microsoft and Google purchase hundreds of thousands of NVIDIA GPUs.

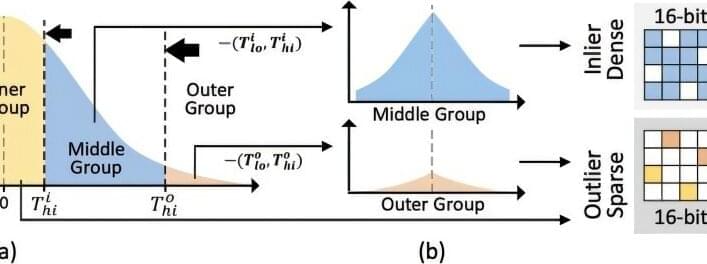

As a solution to address the core challenges of building such high-performance AI infrastructure, Korean researchers have succeeded in developing an NPU (neural processing unit) core technology that improves the inference performance of generative AI models by an average of more than 60% while consuming approximately 44% less power compared to the latest GPUs.

Professor Jongse Park’s research team from KAIST School of Computing, in collaboration with HyperAccel Inc., developed a high-performance, low-power NPU core technology specialized for generative AI clouds like ChatGPT.