The “CSI Effect” has been described as being an increased expectation from jurors that forensic evidence will be presented in court that is instantaneous and unequivocal because that is how it is often presented for dramatic effect in television programs and movies. Of course, in reality forensic science, while exact in some respects is just as susceptible to the vagaries of measurements and analyses as any other part of science. In reality, crime scene investigators often spend seemingly inordinate amounts of time gathering and assessing evidence and then present it as probabilities rather than the kind of definitive result expected of a court room filled with actors rather than real people.

Forensics is on the cusp of a third revolution in its relatively young lifetime. The first revolution, under the brilliant but complicated mind of J. Edgar Hoover, brought science to the field and was largely responsible for the rise of criminal justice as we know it today. The second, half a century later, saw the introduction of computers and related technologies in mainstream forensics and created the subfield of digital forensics.

We are now hurtling headlong into the third revolution with the introduction of Artificial Intelligence (AI) – intelligence exhibited by machines that are trained to learn and solve problems. This is not just an extension of prior technologies. AI holds the potential to dramatically change the field in a variety of ways, from reducing bias in investigations to challenging what evidence is considered admissible.

AI is no longer science fiction. A 2016 survey conducted by the National Business Research Institute (NBRI) found that 38% of enterprises are already using AI technologies and 62% will use AI technologies by 2018. “The availability of large volumes of data—plus new algorithms and more computing power—are behind the recent success of deep learning, finally pulling AI out of its long winter,” writes Gil Press, contributor to Forbes.com.

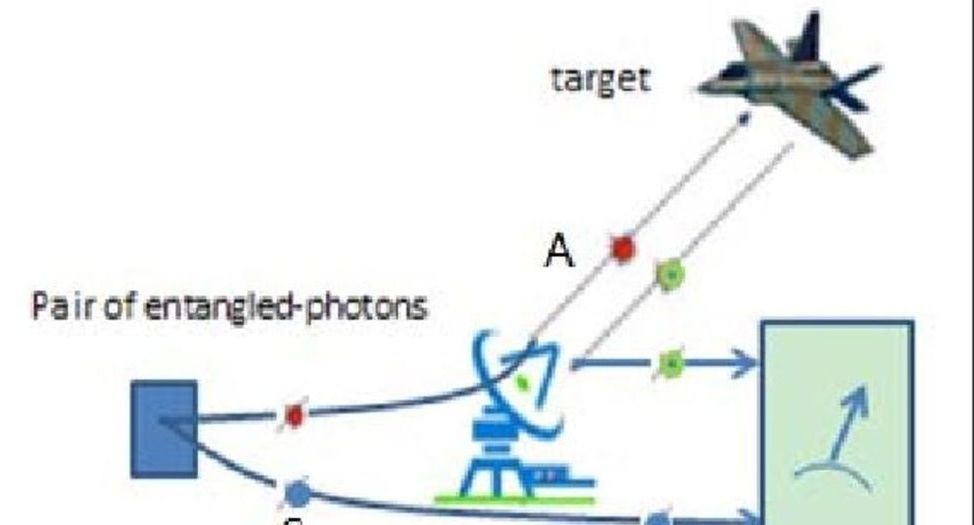

A prototype quantum radar that has the potential to detect objects which are invisible to conventional systems has been developed by an international research team led by a quantum information scientist at the University of York.

The new breed of radar is a hybrid system that uses quantum correlation between microwave and optical beams to detect objects of low reflectivity such as cancer cells or aircraft with a stealth capability. Because the quantum radar operates at much lower energies than conventional systems, it has the long-term potential for a range of applications in biomedicine including non-invasive NMR scans.

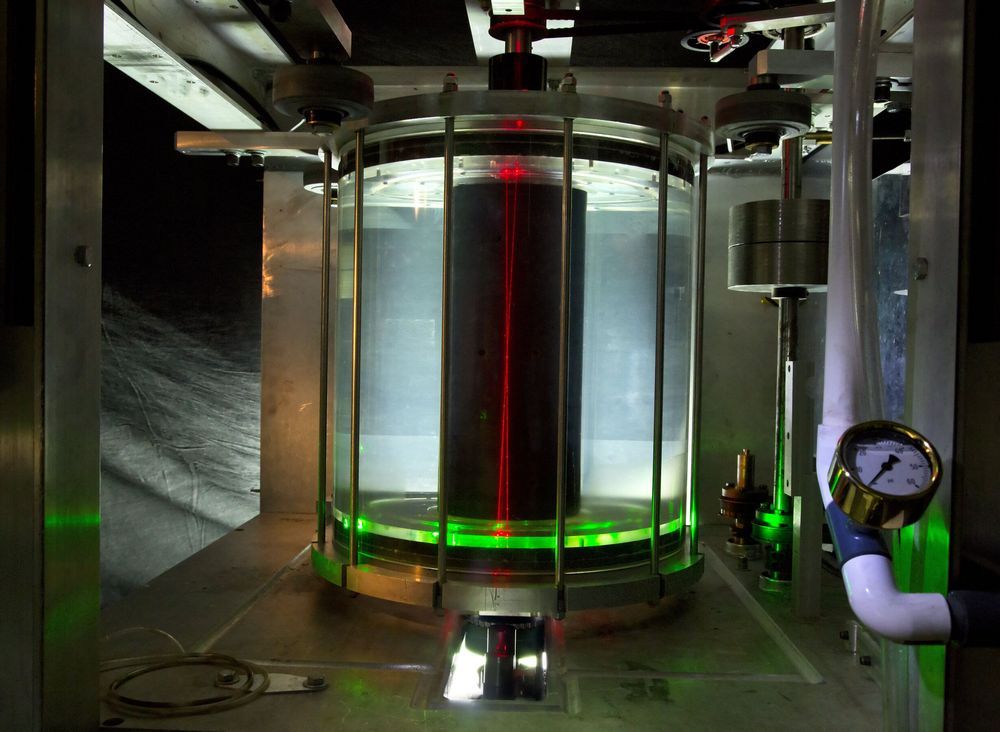

The research team led by Dr Stefano Pirandola, of the University’s Department of Computer Science and the York Centre for Quantum Technologies, found that a special converter — a double-cavity device that couples the microwave beam to an optical beam using a nano-mechanical oscillator — was the key to the new system.

How have stars and planets developed from the clouds of dust and gas that once filled the cosmos? A novel experiment at the U.S. Department of Energy’s (DOE) Princeton Plasma Physics Laboratory (PPPL) has demonstrated the validity of a widespread theory known as “magnetorotational instability,” or MRI, that seeks to explain the formation of heavenly bodies.

The theory holds that MRI allows accretion disks, clouds of dust, gas, and plasma that swirl around growing stars and planets as well as black holes, to collapse into them. According to the theory, this collapse happens because turbulent swirling plasma, technically known as “Keplerian flows,” gradually grows unstable within a disk. The instability causes angular momentum—the process that keeps orbiting planets from being drawn into the sun—to decrease in inner sections of the disk, which then fall into celestial bodies.

Unlike orbiting planets, the matter in dense and crowded accretion disks may experience forces such as friction that cause the disks to lose angular momentum and be drawn into the objects they swirl around. However, such forces cannot fully explain how quickly matter must fall into larger objects for planets and stars to form on a reasonable timescale.

Lawmakers and experts are sounding the alarm about “deepfakes,” forged videos that look remarkably real, warning they will be the next phase in disinformation campaigns.

The manipulated videos make it difficult to distinguish between fact and fiction, as artificial intelligence technology produces fake content that looks increasingly real.

The issue has the attention of lawmakers from both parties on Capitol Hill.

There’s a renewed interest right now in Earth’s magnetic poles – specifically, whether or not they’re about to flip, and what may happen. The consequences of this seemingly rapid geomagnetic backflip may sound a little ominous, but don’t worry: we’re not sure when the next reversal will happen, and even when it does, the risks aren’t likely to be as scary as you may think.

Let’s start with the basics.

As Earth’s liquid, iron-rich outer core gradually cools, it sloshes around through colossal convection currents, which are also somewhat warped by Earth’s own rotation. Thanks to a quirk of physics known as the dynamo theory, this generates a powerful magnetic field, with a north and south end.

Hands are old news. VR navigation, control and selection is best done with the eyes—at least that’s what HTC Vive is banking on with the upcoming HTC Vive Pro Eye, a VR headset with integrated Tobii eye tracking initially targeting businesses. I tried out a beta version of the feature myself on MLB Home Run Derby VR. It’s still in development and, thus, was a little wonky, but I can’t deny its cool factor.

HTC announced the new headset Tuesday at the CES tech show in Las Vegas. The idea is that by having eye tracking built into the headset, better use cases, such as enhanced training programs, can be introduced. The VR player also says users can expect faster VR interactions and better efficiency in terms of tapping your PC’s CPU and GPU.

Of course, before my peepers could be tracked I needed to calibrate the headset for my special eyes. It was quite simple, after adjusting the interpupillary distance appropriately, the headset had me stare at a blue dot that bounced around my field of view (FOV). The whole thing took less than a minute.