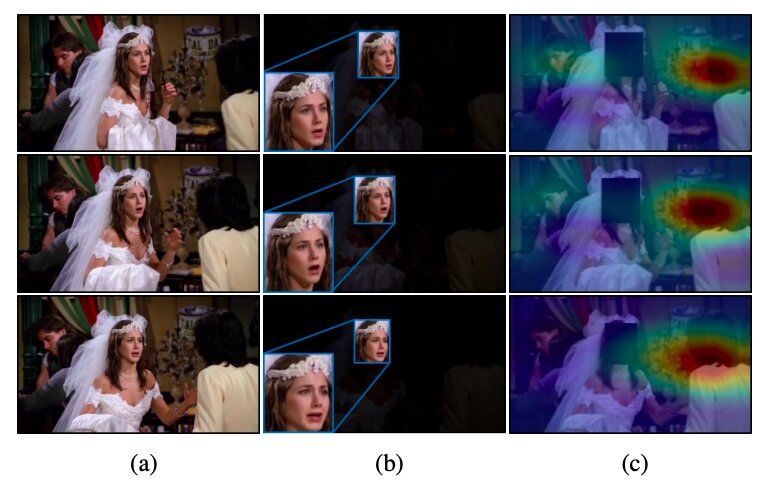

A team of researchers at Yonsei University and École Polytechnique Fédérale de Lausanne (EPFL) has recently developed a new technique that can recognize emotions by analyzing people’s faces in images along with contextual features. They presented and outlined their deep learning-based architecture, called CAER-Net, in a paper pre-published on arXiv.

For several years, researchers worldwide have been trying to develop tools for automatically detecting human emotions by analyzing images, videos or audio clips. These tools could have numerous applications, for instance, improving robot-human interactions or helping doctors to identify signs of mental or neural disorders (e.g.„ based on atypical speech patterns, facial features, etc.).

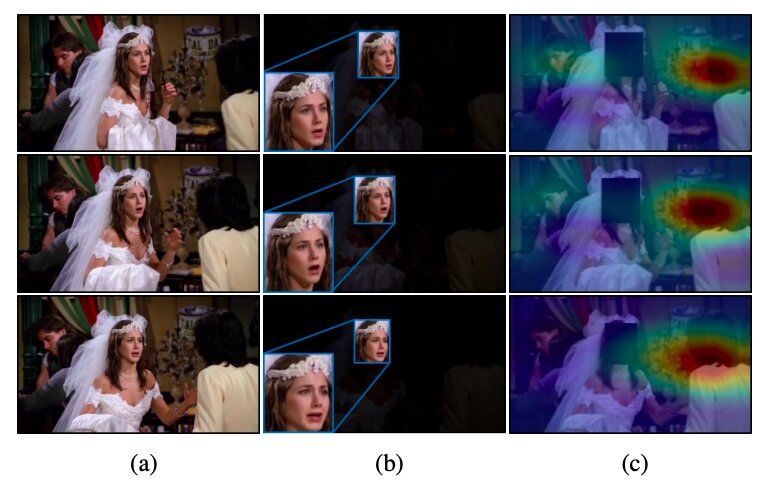

So far, the majority of techniques for recognizing emotions in images have been based on the analysis of people’s facial expressions, essentially assuming that these expressions best convey humans’ emotional responses. As a result, most datasets for training and evaluating emotion recognition tools (e.g., the AFEW and FER2013 datasets) only contain cropped images of human faces.