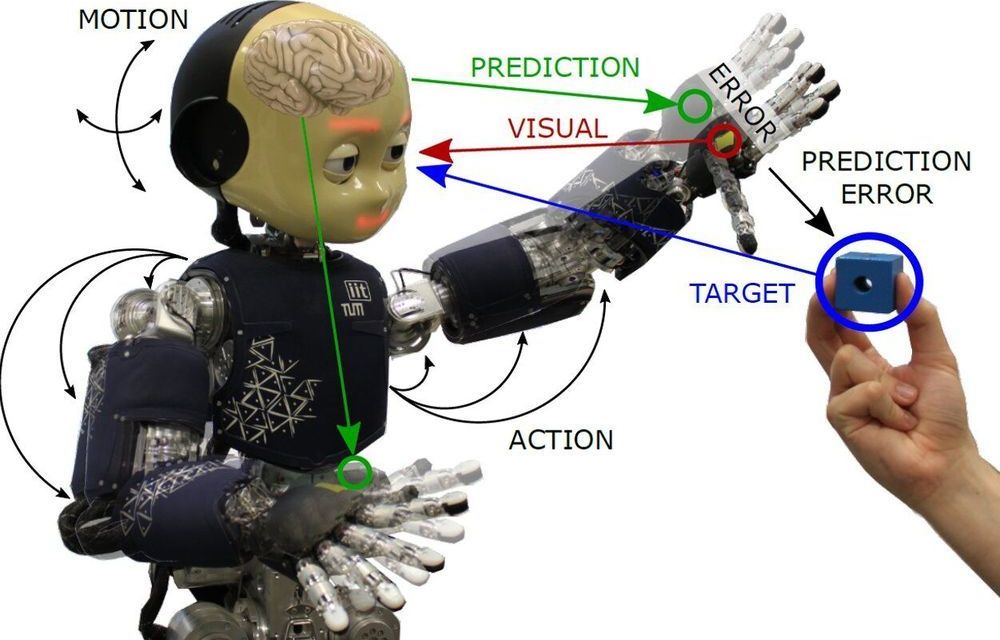

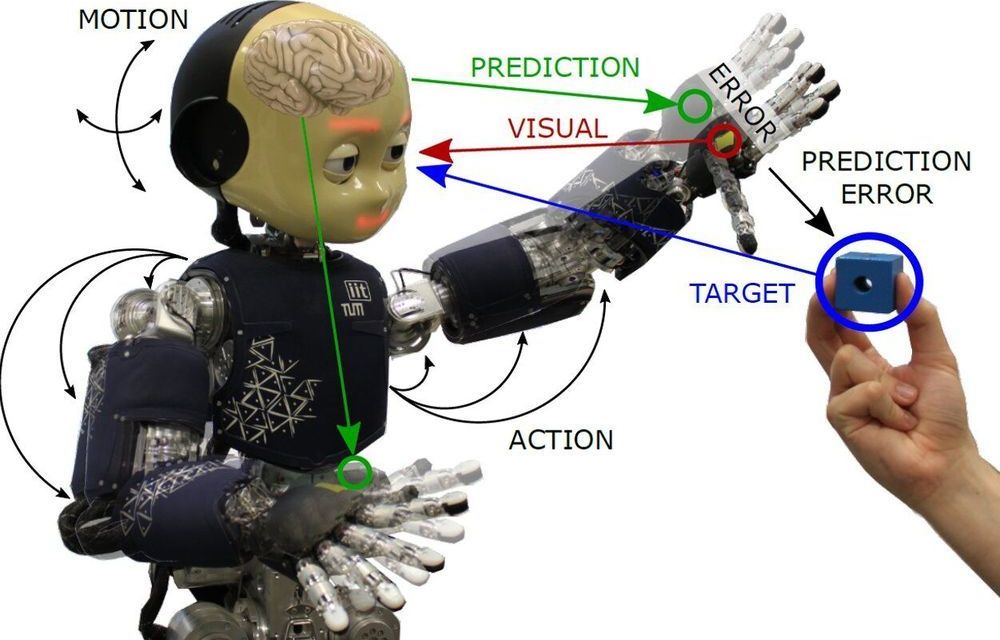

A key challenge for robotics researchers is developing systems that can interact with humans and their surrounding environment in situations that involve varying degrees of uncertainty. In fact, while humans can continuously learn from their experiences and perceive their body as a whole as they interact with the world, robots do not yet have these capabilities.

Researchers at the Technical University of Munich have recently carried out an ambitious study in which they tried to apply “active inference,” a theoretical construct that describes the ability to unite perception and action, to a humanoid robot. Their study is part of a broader EU-funded project called SELFCEPTION, which bridges robotics and cognitive psychology with the aim of developing more perceptive robots.

“The original research question that triggered this work was to provide humanoid robots and artificial agents in general with the capacity to perceive their body as humans do,” Pablo Lanillos, one of the researchers who carried out the study, told TechXplore. “The main goal was to improve their capabilities to interact under uncertainty. Under the umbrella of the Selfception.eu Marie Skłodowska-Curie project we initially defined a roadmap to include some characteristics of human perception and action into robots.”