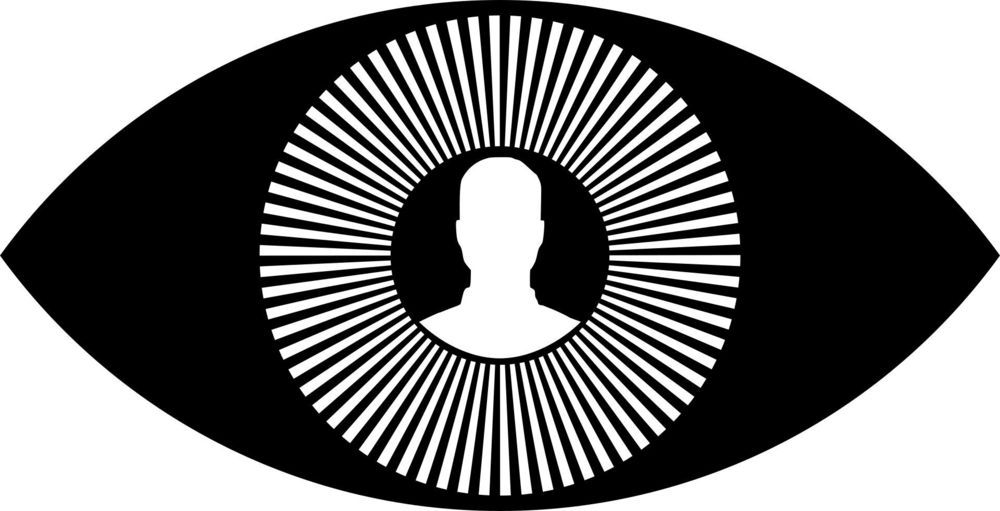

Science fiction writers envisioned the technology decades ago, and startups have been working on developing an actual product for at least 10 years.

Today, Mojo Vision announced that it has done just that—put 14K pixels-per-inch microdisplays, wireless radios, image sensors, and motion sensors into contact lenses that fit comfortably in the eyes. The first generation of Mojo Lenses are being powered wirelessly, though future generations will have batteries on board. A small external pack, besides providing power, handles sensor data and sends information to the display. The company is calling the technology Invisible Computing, and company representatives say it will get people’s eyes off their phones and back onto the world around them.

The first application, says Steve Sinclair, senior vice president of product and marketing, will likely be for people with low vision—providing real-time edge detection and dropping crisp lines around objects. In a demonstration last week at CES 2020, I used a working prototype (albeit by squinting through the lens rather than putting it into my eyes), and the device highlighted shapes in bright green as I looked around a dimly lit room.