A research team headed by the University of Zurich has developed a powerful new method to precisely edit DNA by combining cutting-edge genetic engineering with artificial intelligence. This technique opens the door to more accurate modeling of human diseases and lays the groundwork for next-generation gene therapies.

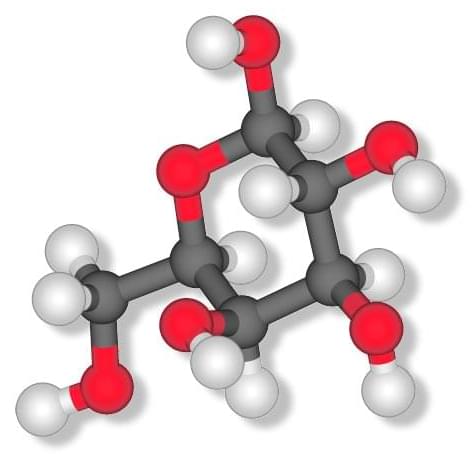

Precise and targeted DNA editing by small point mutations as well as the integration of whole genes via CRISPR/Cas technology has great potential for applications in biotechnology and gene therapy. However, it is very important that the so-called gene scissors do not cause any unintended genetic changes, but maintain genomic integrity to avoid unintended side effects. Normally, double-stranded breaks in the DNA molecule are accurately repaired in humans and other organisms. But occasionally, this DNA end joining repair results in genetic errors.

Gene editing with greatly improved precision Now, scientists from the University of Zurich (UZH), Ghent University in Belgium and the ETH Zurich have developed a new method which greatly improves the precision of genome editing. Using artificial intelligence (AI), the tool called Pythia predicts how cells repair their DNA after it is cut by gene editing tools such as CRISPR/Cas9. “Our team developed tiny DNA repair templates, which act like molecular glue and guide the cell to make precise genetic changes,” says lead author Thomas Naert, who pioneered the technology at UZH and is currently a postdoc at Ghent University.

Page description.