Highlights from the conference

Harvard University has been kneecapped, there will be no U.S. university that’s left in the top 10.

The Nature Index tracks the affiliations of high-quality scientific articles. Updated monthly, the Nature Index presents research outputs by institution and country. Use the Nature Index to interrogate publication patterns and to benchmark research performance.

Neuralink’s second brain implant recipient shows the potential advancements in assistive technology for quadriplegics by playing video games.

An interdisciplinary research team has demonstrated a durable and lasting response to a novel treatment—combined locoregional therapy and immunotherapy (LRT-IO)—for advanced liver cancer patients. The study marks the first investigation into the long-term outcomes for patients with locally advanced liver cancer receiving this treatment. The researchers identified key factors associated with a complete response, and found that this pioneering approach is safe, effective and sustainable.

The findings were recently published in JAMA Oncology. The team included researchers from the Department of Surgery and Department of Clinical Oncology, School of Clinical Medicine at the LKS Faculty of Medicine of the University of Hong Kong (HKUMed).

Advanced liver cancer is often considered incurable, but it can sometimes be converted to a treatable stage through a combination of therapies, potentially leading to curative surgery.

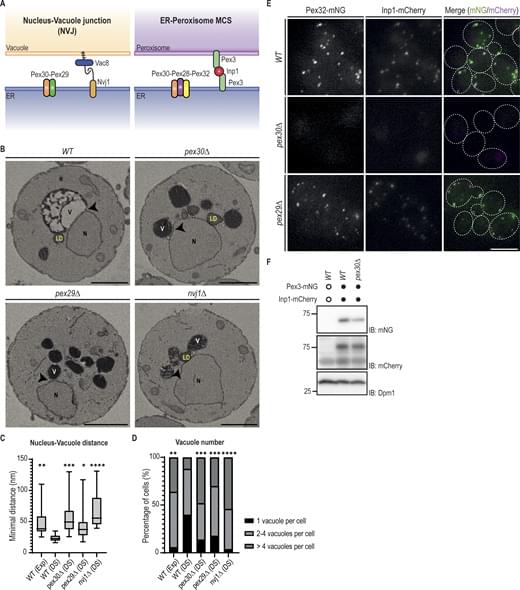

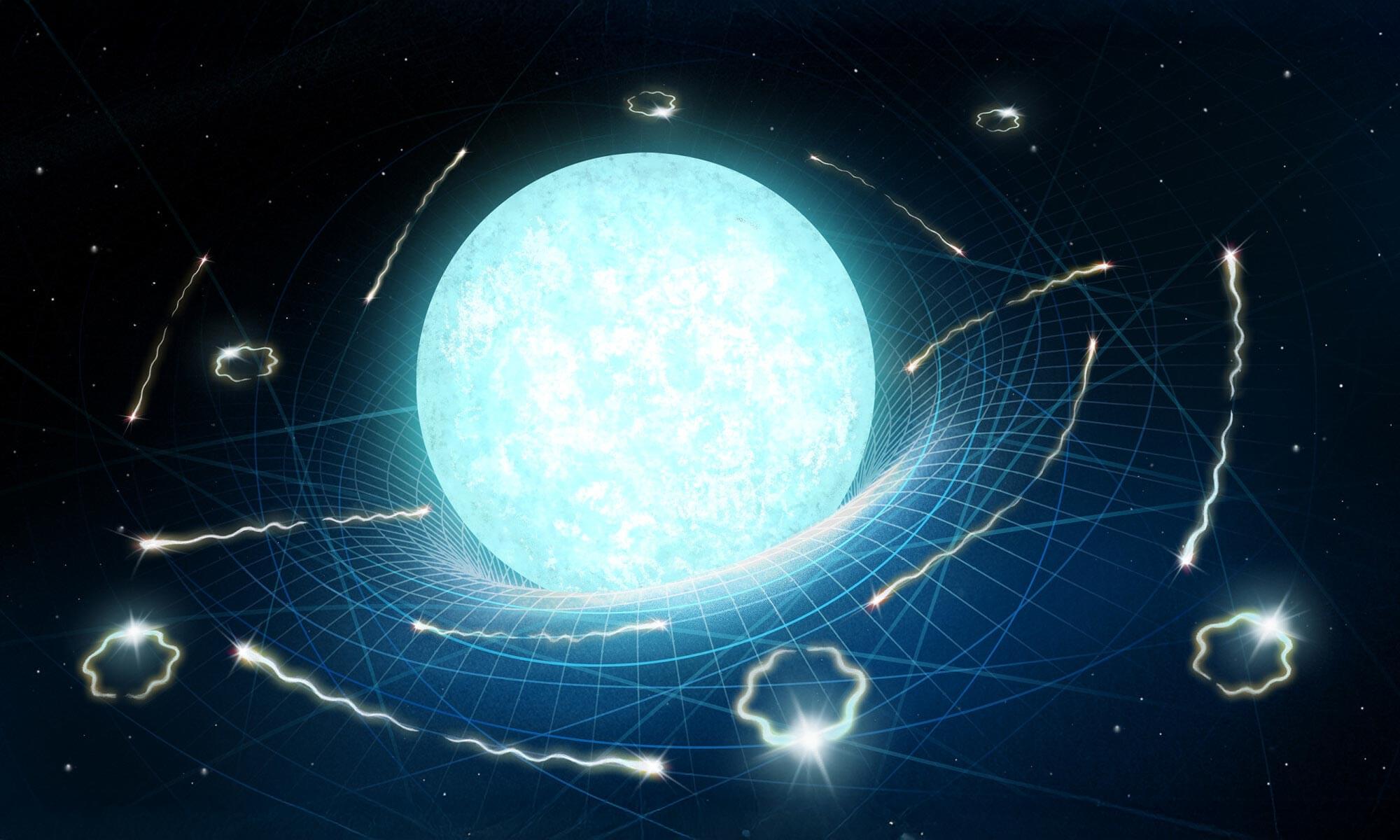

When people think of black holes, they imagine something dramatic: a star exploding in space, collapsing in on itself, and forming a cosmic monster that eats everything around it. But what if black holes didn’t always begin with a bang? What if, instead, they started quietly—growing inside stars, which still appear alive from the outside, without anyone noticing?

Our recent astrophysical research, published in Physical Review D, suggests this could be happening—and the story is far stranger and more fascinating than we imagined.

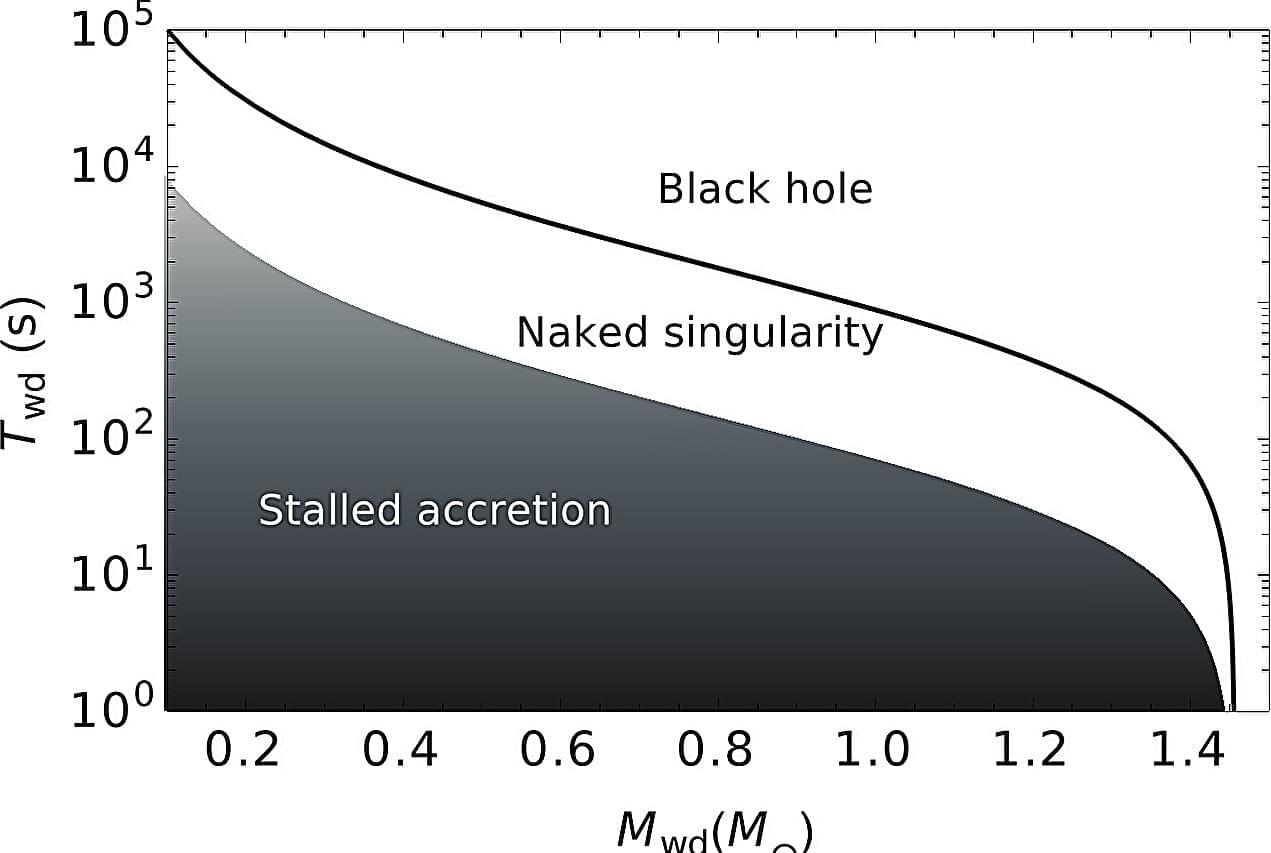

A gene that regulates the development of roots in vascular plants is also involved in the organ development of liverworts—land plants so old they don’t even have proper roots. The Kobe University discovery, published in New Phytologist, highlights the fundamental evolutionary dynamic of co-opting, evolving a mechanism first and adopting it for a different purpose later.

When scientists discover that a gene is necessary for the development of a trait, they are quick to ask since when this gene has been involved in this and how the evolution of the gene has contributed to the evolution of the trait.

Kobe University plant biologist Fukaki Hidehiro says, “My group previously discovered that a gene called RLF is necessary for lateral root development in the model plant Arabidopsis thaliana, but it was completely new that the group of genes RLF belongs to is involved in plant organ development. So we wanted to know whether the equivalent of this gene in other plants is also involved in similar processes.”

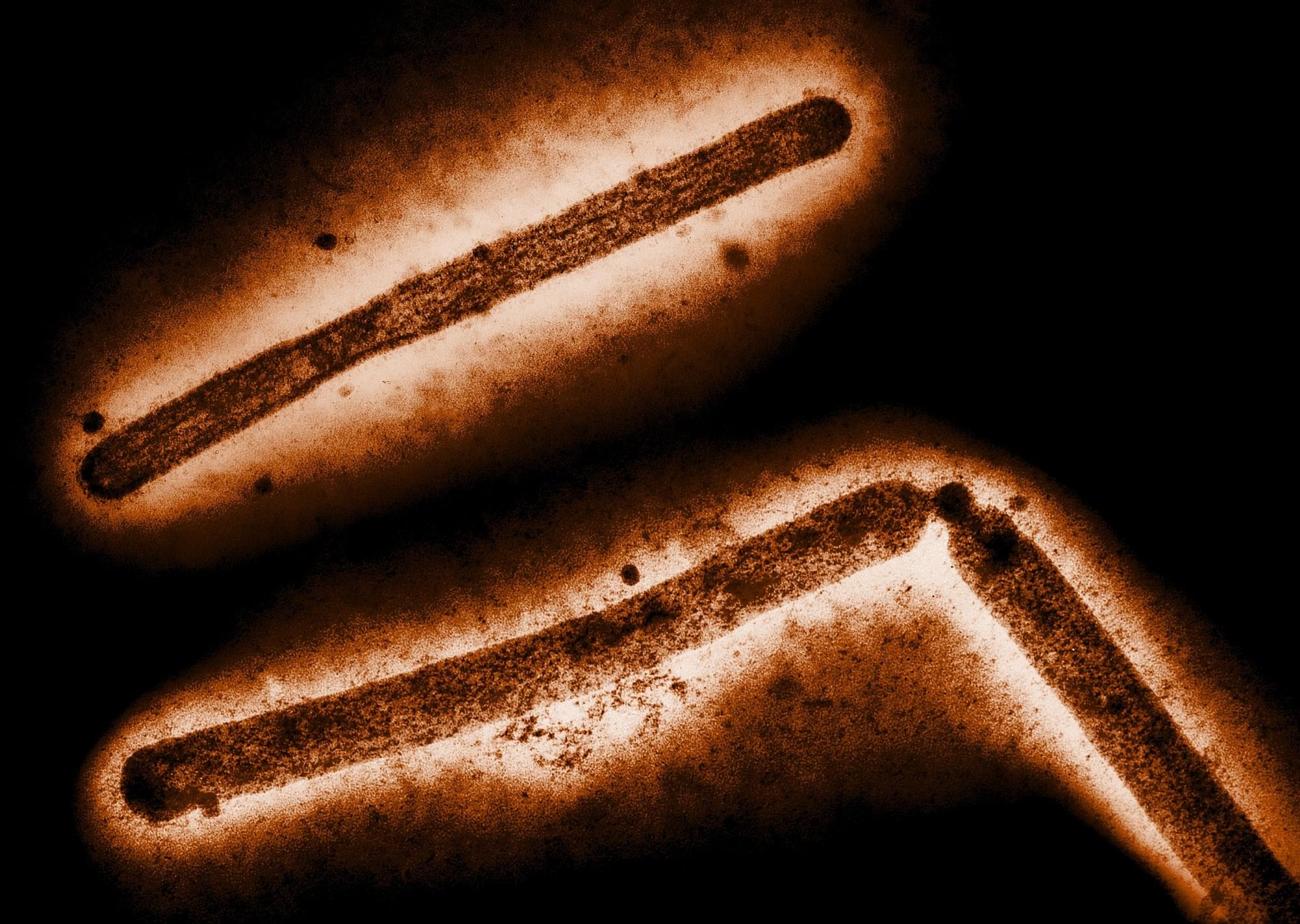

The Gs/Gd lineage of highly pathogenic H5 avian influenza viruses—including H5N1—has rapidly evolved, spreading globally and infecting a growing range of birds, mammals, and occasionally humans. This review highlights the expanding risks, the challenges of cross-species transmission, and urgent needs for surveillance, vaccination, and a unified One Health response.

What if black holes weren’t the only things slowly vanishing from existence? Scientists have now shown that all dense cosmic bodies—from neutron stars to white dwarfs—might eventually evaporate via Hawking-like radiation.

Even more shocking, the end of the universe could come far sooner than expected, “only” 1078 years from now, not the impossibly long 101100 years once predicted. In an ambitious blend of astrophysics, quantum theory, and math, this playful yet serious study also computes the eventual fates of the Moon—and even a human.

Black Holes Aren’t Alone