The infamous Pegasus spyware created by Israeli firm NSO can turn any infected smartphone into a remote microphone or camera. Here’s how to stay safe and know if you’ve been hacked.

In its Series E financing round, Plenty Unlimited secured $400 million – the largest investment to date for an indoor farming company. In addition to Walmart and existing investor SoftBank, new partners One Madison Group and JS Capital also participated in the round.

Plenty Unlimited will use this funding to support its growth strategy, including leveraging its technology platform to sell multi-crop farms directly to partners.

“The indoor farming sector is at an exciting inflection point, poised to reach its full potential as a new asset class that addresses the significant need to provide access to fresh, nutritious food year-round, even in geographies where traditional farming is difficult,” said Omar Asali, Chairman and CEO of One Madison Group. “Plenty has truly ‘cracked the code’ on the technology and economics of indoor farming. It has developed an innovative and scalable model that can deliver fresh, sustainable produce to retailers, growers and governments anywhere in the world.”

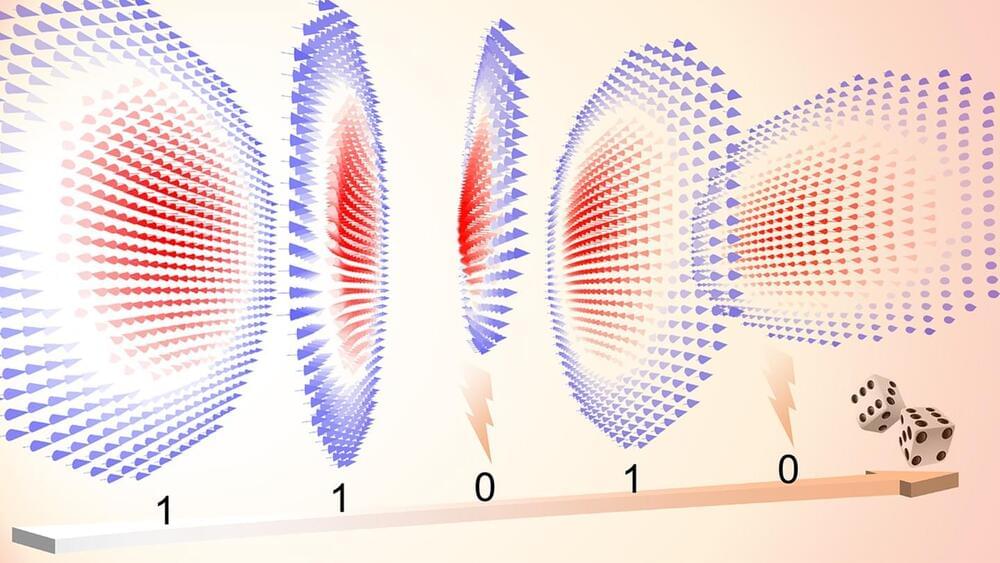

PROVIDENCE, R.I. [Brown University] — Whether for use in cybersecurity, gaming or scientific simulation, the world needs true random numbers, but generating them is harder than one might think. But a group of Brown University physicists has developed a technique that can potentially generate millions of random digits per second by harnessing the behavior of — tiny magnetic anomalies that arise in certain two-dimensional materials.

Their research, published in Nature Communications, reveals previously unexplored dynamics of single, the researchers say. Discovered around a half-decade ago, have sparked interest in physics as a path toward next-generation computing devices that take advantage of the magnetic properties of particles — a field known as spintronics.

“There has been a lot of research into the global dynamics of, using their movements as a basis for performing computations,” said Gang Xiao, chair of the Department of Physics at Brown and senior author of the research. “But in this work, we show that purely random fluctuations in the size of can be useful as well. In this case, we show that we can use those fluctuations to generate random numbers, potentially as many as 10 million digits per second.”

Researchers at Leipzig University have succeeded in moving tiny amounts of liquid at will by remotely heating water over a metal film with a laser. The currents generated in this way can be used to manipulate and even capture tiny objects. This will unlock groundbreaking new solutions for nanotechnology, the manipulation of liquids in systems in tiny spaces, or in the field of diagnostics, by making it possible to detect the smallest concentrations of substances with new types of sensor systems.

The findings are described in an article recently published in Nature Communications (“Hydrodynamic manipulation of nano-objects by optically induced thermo-osmotic flows”).

Illustration of a gold nanoparticle trapped near a locally heated gold surface by hydrodynamic and van der Waals forces. (Image: Martin Fränzl, Universität Leipzig)

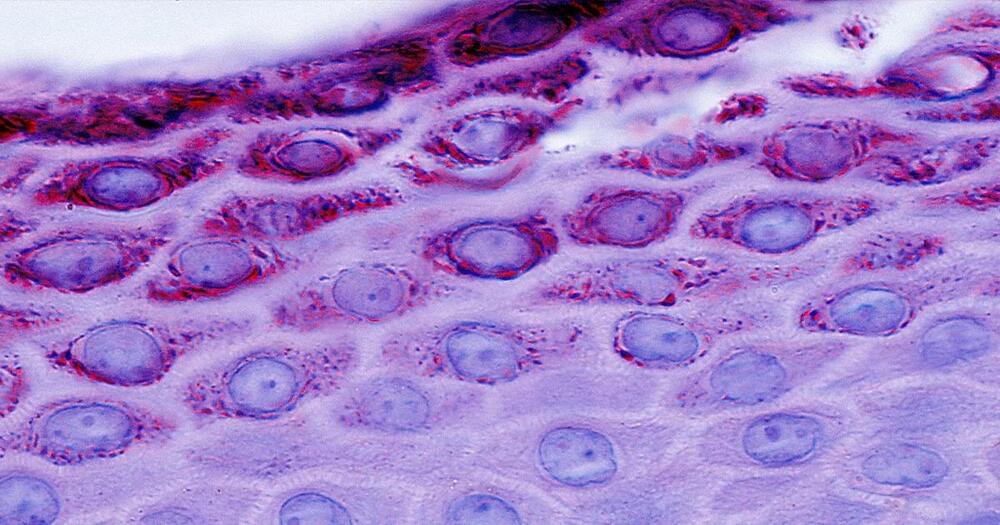

All memory storage devices, from your brain to the RAM in your computer, store information by changing their physical qualities. Over 130 years ago, pioneering neuroscientist Santiago Ramón y Cajal first suggested that the brain stores information by rearranging the connections, or synapses, between neurons.

Since then, neuroscientists have attempted to understand the physical changes associated with memory formation. But visualizing and mapping synapses is challenging to do. For one, synapses are very small and tightly packed together. They’re roughly 10 billion times smaller than the smallest object a standard clinical MRI can visualize. Furthermore, there are approximately 1 billion synapses in the mouse brains researchers often use to study brain function, and they’re all the same opaque to translucent color as the tissue surrounding them.

A new imaging technique my colleagues and I developed, however, has allowed us to map synapses during memory formation. We found that the process of forming new memories changes how brain cells are connected to one another. While some areas of the brain create more connections, others lose them.