New AI tools let you to generate selfies with a variety of styles and angles that will make anyone scrolling Instagram jealous.

Doctor shares five ways to live longer. We all know that to live a long quality life, we have to make healthy lifestyle choices that require commitment and discipline like eating well and exercising. There’s no way around it, but there’s other things we can do as well that help prolong our lifespan.

Join us on Patreon!

https://www.patreon.com/MichaelLustgartenPhD

Bristle Discount Link (Oral microbiome quantification):

ConquerAging15

https://www.bmq30trk.com/4FL3LK/GTSC3/

TruDiagnostic Discount Link (Epigenetic Testing)

CONQUERAGING!

https://bit.ly/3Rken0n.

Quantify Discount Link (At-Home Blood Testing)

https://getquantify.io/mlustgarten.

Cronometer Discount Link (Daily diet tracking):

https://shareasale.com/r.cfm?b=1390137&u=3266601&m=61121&urllink=&afftrack=

Support the channel with Buy Me A Coffee!

Open the website thispersondoesnotexist, and you’ll find a headshot of a stranger. At first glance it looks like someone got the HR records from work and stuck them on a website, refreshing the site regenerates another face of a person you might know.

SEE ALSO: RESEARCHERS DEVELOP NEURAL NETWORK THAT CAN RESTORE DAMAGED OR LOW QUALITY IMAGES

Scientists have successfully increased the lifespan of animals and there are first studies which describe how we might reverse aging. So how could we one day rever aging?

🔬 Subscribe for more awesome biomedical research: https://bit.ly/2SRMqhC

📸 IG: instagram.com/clemens.steinek.

🔬Twitter: https://twitter.com/CSteinek.

In the 70s, scientists observed that cells only grow for a limited amount of days in the laboratory (Hayflick limit). Over the years, so-called hallmarks of aging have been uncovered. These hallmarks of aging govern how our cells age and we could try to slow dem down to “reverse aging”.

The first hallmark of aging is mutation. We can acquire mutations by being exposed to UV radiation or certain chemicals or through cell division. Cell divsion also leads to a second hallmark of aging (telomere attrition). Furthermore, our mitochondria start to work less as quality checks do not work properly anymore.

The hallmarks of aging are tightly linked to epigenetics. Epigenetics means that we have mechanisms (DNA methylation, histone modifications) which regulate the activity of genes. Epigenetics governs the development of embryonic stem cells into cells of our body but also impact aging. The loss of mitochondria for example is linked to dysfunctional epigenetic layers. As we age, at least three epigenetic modifications namely H4K16 acetylation, H3K4 trimethylation, or H4K20 trimethylation acumulate. The thing is that epigenetics is reversible… so can we also reverse aging?

Diets have been shown to slow down (and reverse aging to a small degree). Cells also show less damages in their DNA and we find higher levels of proteins which are found in “young cells. The activity of mitochondria is also increased if we undergo caloric restriction. Diets also impact the production of sirtuins which increase the lifespan and reverse aging. Different compounts (such as NMN and remodelin) have been shown to improve the epigenetic landscape which might have an effect on reversing aging. Exercise also might help to reverse aging as it helps to increase the activity of mitochondria. Meditation and having less stress also helps to increase the lengths of telomeres which might help to reverse aging. All in all studies suggests that some hallmarks of aging can be reversed so lets see where that goes!

0:00–0:46 Intro.

0:46–3:53 Hallmarks of Aging.

3:53–6:38 Epigenetics Controls Genes.

6:38–8:45 Reversing Aging: what is known.

8:45–11:25 Reversing Aging through Diets & Sports.

11:25–12:13 My Opinion.

References:

The speed of light is a universal physical constant that is important in many aspects of physics. Light travels at a continuous and finite speed of 186,000 miles per second. But did you have note that the speed of light can be manipulated?

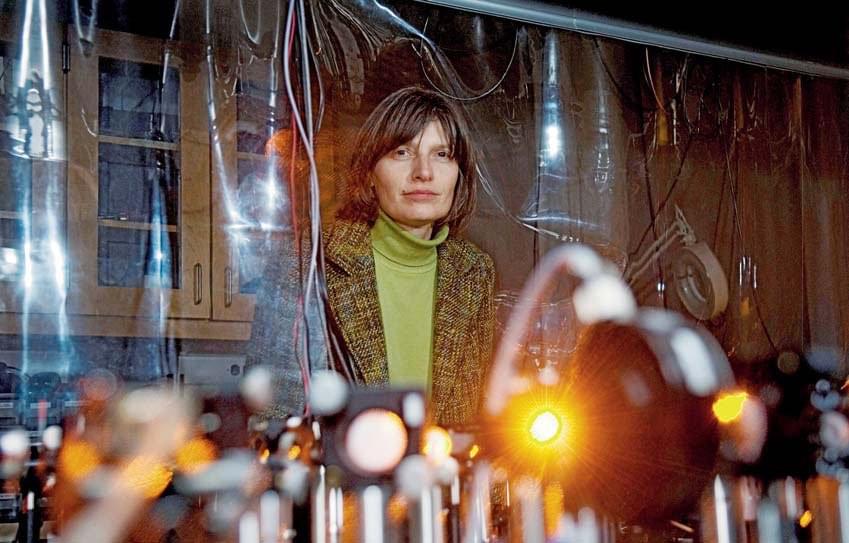

In 1999, Lene Hau, a physicist from Denmark, was the first to slow light down to only 38 mph. Later, she could totally stop, control, and move it.

In this episode we take a look at the many problems facing China’s economy. How did the country end up in this position and what does it mean for the rest of the world?

— About ColdFusion –

ColdFusion is an Australian based online media company independently run by Dagogo Altraide since 2009. Topics cover anything in science, technology, history and business in a calm and relaxed environment.

» ColdFusion Discord: https://discord.gg/coldfusion.

» Twitter | @ColdFusion_TV

» Instagram | coldfusiontv.

» Facebook | https://www.facebook.com/ColdFusioncollective.

» Podcast I Co-host: https://www.youtube.com/channel/UC6jKUaNXSnuW52CxexLcOJg.

» Podcast Version of Videos: https://open.spotify.com/show/3dj6YGjgK3eA4Ti6G2Il8H

https://podcasts.apple.com/us/podcast/coldfusion/id1467404358

ColdFusion Music Channel: https://www.youtube.com/channel/UCGkpFfEMF0eMJlh9xXj2lMw.

ColdFusion Merch:

INTERNATIONAL: https://store.coldfusioncollective.com/

AUSTRALIA: https://shop.coldfusioncollective.com/

If you enjoy my content, please consider subscribing!

Did you miss a session from MetaBeat 2022? Head over to the on-demand library for all of our featured sessions here.

Synthetic data will be a huge industry in five to 10 years. For instance, Gartner estimates that by 2024, 60% of data for AI applications will be synthetic. This type of data and the tools used to create it have significant untapped investment potential. Here’s why.

We are effectively on the cusp of a revolution in how machine learning (ML) and artificial intelligence (AI) can grow and have even more applications across sectors and industries.

At 200 times stronger than steel, graphene has been hailed as a super material of the future since its discovery in 2004. The ultrathin carbon material is an incredibly strong electrical and thermal conductor, making it a perfect ingredient to enhance semiconductor chips found in many electrical devices.

But while graphene-based research has been fast-tracked, the nanomaterial has hit roadblocks: in particular, manufacturers have not been able to create large, industrially relevant amounts of the material. New research from the laboratory of Nai-Chang Yeh, the Thomas W. Hogan Professor of Physics, is reinvigorating the graphene craze.

In two new studies, the researchers demonstrate that graphene can greatly improve electrical circuits required for wearable and flexible electronics such as smart health patches, bendable smartphones, helmets, large folding display screens, and more.