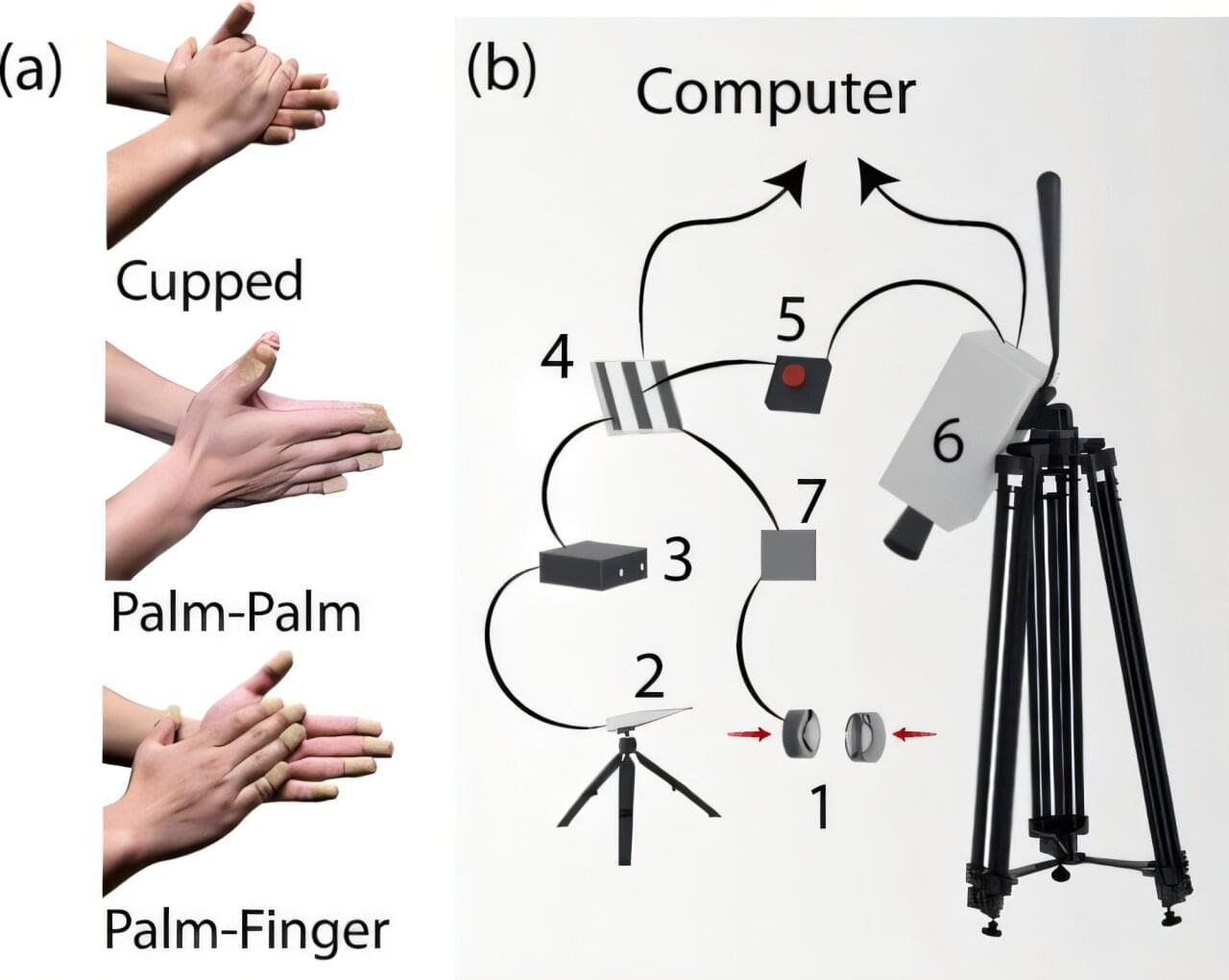

In a scene toward the end of the 2006 film, “X-Men: The Last Stand,” a character claps and sends a shock wave that knocks out an opposing army. Sunny Jung, professor of biological and environmental engineering in the College of Agriculture and Life Sciences, was intrigued. “It made me curious about how the wave propagates when we clap our hands,” Jung said.

Jung is senior author of a study, published in Physical Review Research, that elucidates the complex physical mechanisms and fluid dynamics involved in a handclap, with potential applications in bioacoustics and personal identification, whereby a handclap could be used to identify someone.

“Clapping hands is a daily, human activity and form of communication,” Jung said. “We use it in religious rituals, or to express appreciation: to resonate ourselves and excite ourselves. We wanted to explore how we generate the sound depending on how we clap our hands.”