From superfast magnetic levitation trains and computer chips to magnetic resonance imaging (MRI) machines and particle accelerators, superconductors are electrifying various aspects of our life. Superconductivity is an interesting property that allows materials to transfer moving charges without any resistance, below a certain critical point. This implies that superconducting materials can transfer electrical energy in a highly efficient manner without loss in the form of heat, unlike many conventional conductors.

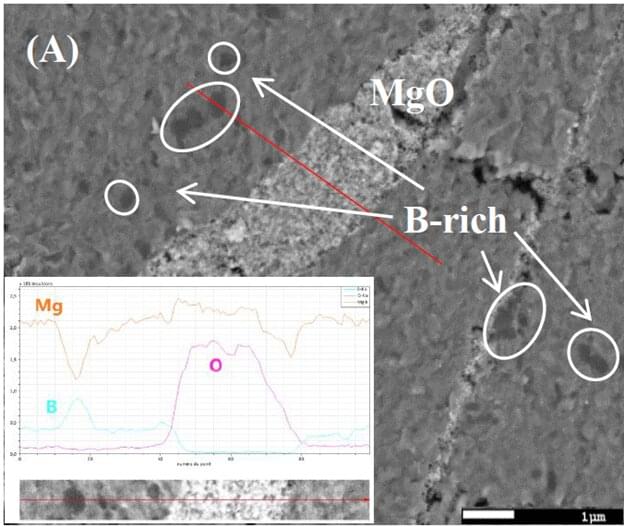

Almost two decades ago scientists discovered superconductivity in a new material —magnesium diboride, or MgB2. There has been a resurgence in the of popularity MgB2 due to its low cost, superior superconducting abilities, high critical current density (which means that compared to other materials, MgB2 remains a semiconductor even when larger amounts of electric current is passed through it), and trapped magnetic fields arising from strong pinning of the vortices—which are cylindrical current loops or tubes of magnetic flux that penetrate a superconductor.

The intermetallic MgB2 also allows adjustability of its properties. For instance, the critical current density values (Jc) of MgB2 can be improved by decreasing the grain size and increasing the number of grain boundaries. Such adjustability is not observed in conventional layered superconductors.