Life science continued dominating the research schedule aboard the International Space Station on Wednesday to benefit humans living on and off the Earth. The seven Expedition 67 orbital residents explored how living in microgravity affects tissue regeneration, crew psychology, and the human digestion system.

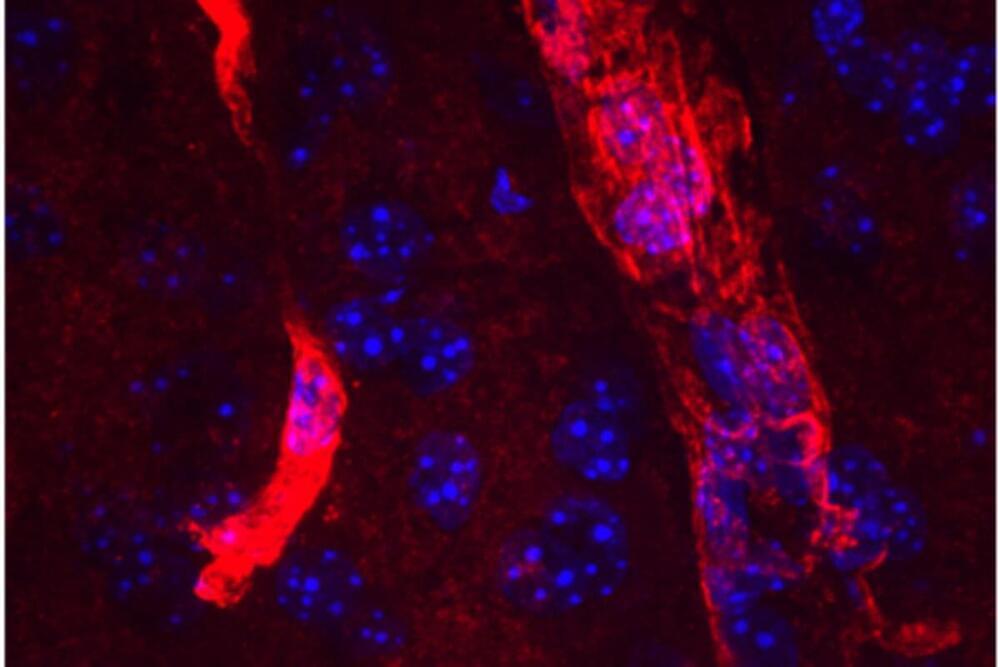

Learning to heal wounds in space is critical as NASA and its international partners plan crewed missions to the Moon, Mars, and beyond. Four station astronauts have been partnering together this week for the skin healing study taking place inside the Kibo laboratory module. Flight Engineers Kjell Lindgren, Bob Hines, and Jessica Watkins, all from NASA, with Samantha Cristoforetti of ESA (European Space Agency), are studying surgical techniques such as biopsies, suture splints, and wound dressing, inside Kibo’s Life Science Glovebox.

Scientists on Earth seek to identify the molecular mechanisms that occur during tissue regeneration in weightlessness. Observations may offer advanced therapies and provide insights into how space-caused accelerated skin aging affects an astronaut’s healing properties. The biomedical experiment may also contribute to better wound healing techniques on Earth.