The U.S. used to be a global leader in lithium production, but now has fallen way behind. Several domestic projects are in the works, but will it be enough?

A light in the dark — If quantum computers continue to advance, and perform more calculations for less steep costs, Rinaldi and his team might be able to reveal what happens inside of black holes, beyond the event horizon — a region immediately surrounding a black hole’s singularity, within which not even light, nor perhaps time itself, can escape the immense force of gravity.

In practical terms, the event horizon prevents all conventional, light-based observations. But, and perhaps more compelling, the team hopes that further advances in this line of inquiry will do more than peek inside a black hole, and unlock what physicists have dreamed of since the days of Einstein: a unified theory of physics.

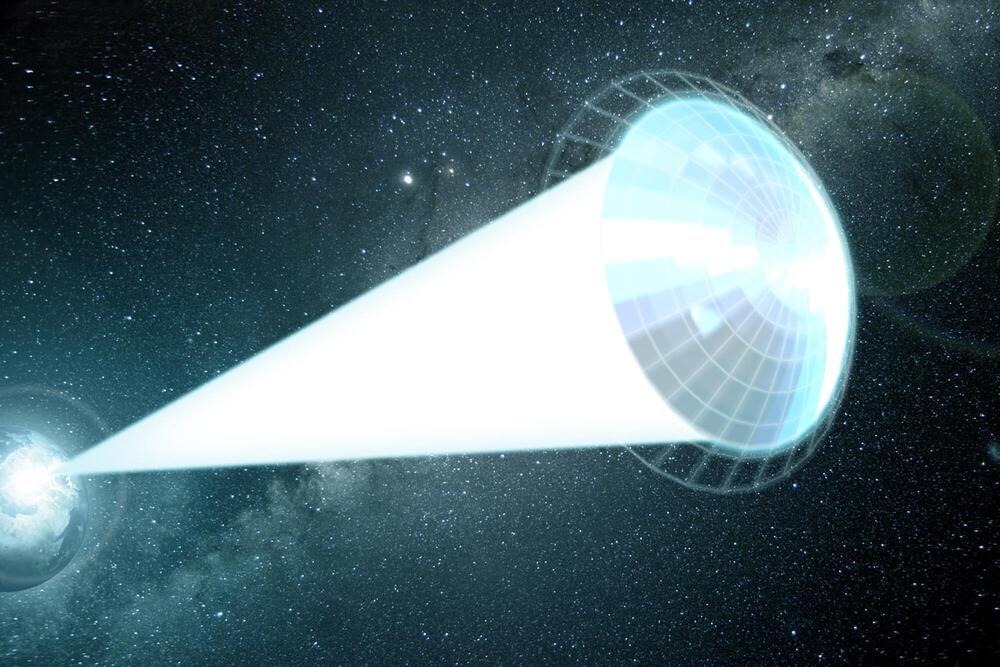

Astronomers have been waiting decades for the launch of the James Webb Space Telescope, which promises to peer farther into space than ever before. But if humans want to actually reach our nearest stellar neighbor, they will need to wait quite a bit longer: a probe sent to Alpha Centauri with a rocket would need roughly 80,000 years to make the trip.

Igor Bargatin, Associate Professor in the Department of Mechanical Engineering and Applied Mechanics, is trying to solve this futuristic problem with ideas taken from one of humanity’s oldest transportation technologies: the sail.

As part of the Breakthrough Starshot Initiative, he and his colleagues are designing the size, shape and materials for a sail pushed not by wind, but by light.

Believe it or not, graphics card prices seem to be headed down. 3D Center has been tracking and reporting pricing trends for GPUs in Germany and Austria. There’s good news: prices are indeed on a downward slope. Even better; this is the third month in a row they have declined, so we can’t just write it off as a one one-time fluke. That said, here’s the bad news: even if this trend continues, which is a big if, prices are still so inflated that months of “progress” may only result in GPUs returning to MSRP, supply issues notwithstanding. At this point we’ll take what we can get.

The report by 3D Center for February mirrors the company’s report from last month, which we covered here. There’s a noticeable downward trend in pricing for both AMD and Nvidia GPUs. It’s almost shocking to see after so many reports of price increases. The trend is undeniable. According to 3D Center, the February price of AMD’s Radeon RDNA2 cards has fallen 18 percent, to 145 percent over MSRP. For Nvidia’s Ampere GPUs, prices fell 20 percent, leaving them 157 percent over MSRP.

Instead of relying on a fixed catalogue of available materials or undergoing trial-and-error attempts to come up with new ones, engineers can turn to algorithms running in supercomputers to design unique materials, based on a “materials genome,” with properties tailored to specific needs. Among the new classes of emerging materials are “transient” electronics and bioelectronics that portend applications and industries comparable to the scale that followed the advent of silicon-based electronics.

In each of the three technological spheres, we find the Cloud increasingly woven into the fabric of innovation. The Cloud itself is, synergistically, evolving and expanding from the advances in new materials and machines, creating a virtuous circle of self-amplifying progress. It is a unique feature of our emerging century that constitutes a catalyst for innovation and productivity, the likes of which the world has never seen.

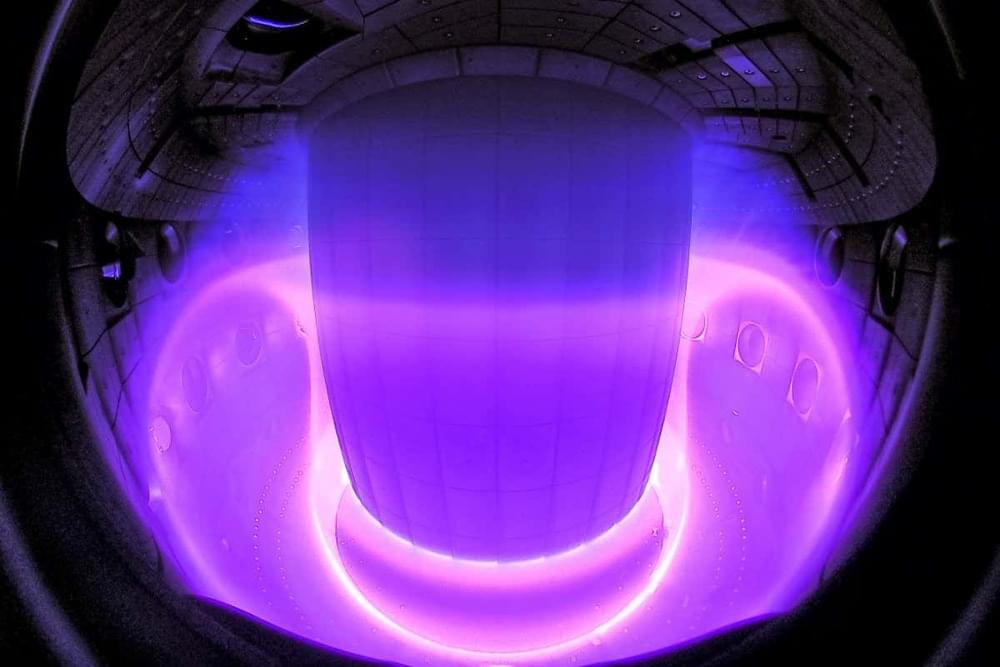

For the first time, artificial intelligence has been used to control the super-hot plasma inside a fusion reactor, offering a new way to increase stability and efficiency.

Unleashed cosmic ray bombardment may have eaten up ozone, driving short-term climate swings.

Lithium-sulfur batteries have three times the potential charge capacity of lithium-ion batteries, which are found in everything from smartphones to electric cars. Their inherent instability, however, have so far made them unsuitable for commercial applications, with lithium-sulfur batteries undergoing a 78 per cent change in size every charging cycle.

Overcoming this issue would not only radically improve the performance of battery-powered devices, it would also address some of the environment concerns that come with lithium-ion batteries, such as the sourcing and disposal of rare raw materials.

Researchers at Lund University in Sweden have succeeded in developing a simple hydrocarbon molecule with a logic gate function, similar to that in transistors, in a single molecule. The discovery could make electric components on a molecular scale possible in the future. The results are published in Nature Communications.

Manufacturing very small components is an important challenge in both research and development. One example is transistors—the smaller they are, the faster and more energy efficient our computers become. But is there a limit to how small logic gates can become? And is it possible to create electric machines on a molecular scale? Yes, perhaps, is the answer from a chemistry research team at Lund University.

“We have developed a simple hydrocarbon molecule that changes its form, and at the same time goes from insulating to conductive, when exposed to electric potential. The successful formula was to design a so-called anti-aromatic ring in a molecule so that it becomes more robust and can both receive and relay electrons,” says Daniel Strand, chemistry researcher at Lund University.