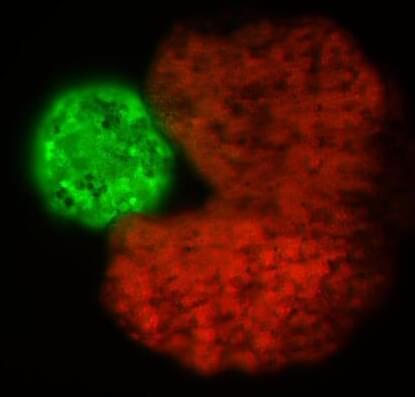

Micro-sized cameras have great potential to spot problems in the human body and enable sensing for super-small robots, but past approaches captured fuzzy, distorted images with limited fields of view.

Now, researchers at Princeton University and the University of Washington have overcome these obstacles with an ultracompact camera the size of a coarse grain of salt. The new system can produce crisp, full-color images on par with a conventional compound camera lens 500,000 times larger in volume, the researchers reported in a paper published Nov. 29 in Nature Communications.

Enabled by a joint design of the camera’s hardware and computational processing, the system could enable minimally invasive endoscopy with medical robots to diagnose and treat diseases, and improve imaging for other robots with size and weight constraints. Arrays of thousands of such cameras could be used for full-scene sensing, turning surfaces into cameras.