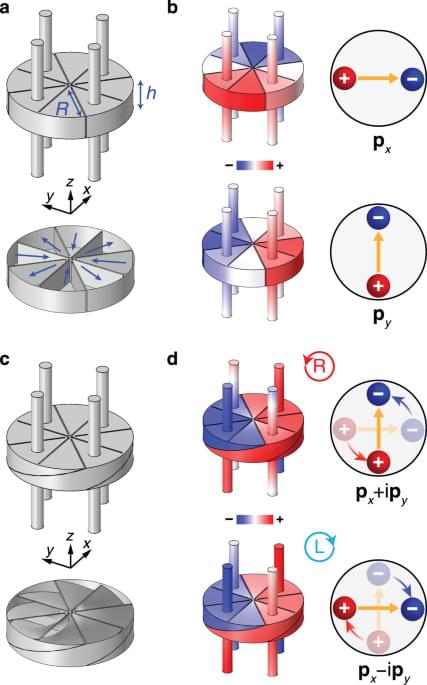

Spin-orbit acoustics is determinant to provide new perspectives and functionalities for sound manipulations. Here the authors theoretically and experimentally demonstrate acoustic spin-orbit interaction enabling chiral sound-matter interactions with unprecedented applications.

Abstract. The cnidarian model organism Hydra has long been studied for its remarkable ability to regenerate its head, which is controlled by a head organizer located near the hypostome. The canonical Wnt pathway plays a central role in head organizer function during regeneration and during bud formation, which is the asexual mode of reproduction in Hydra. However, it is unclear how shared the developmental programs of head organizer genesis are in budding and regeneration. Time-series analysis of gene expression changes during head regeneration and budding revealed a set of 298 differentially expressed genes during the 48-h head regeneration and 72-h budding time courses. In order to understand the regulatory elements controlling Hydra head regeneration, we first identified 27,137 open-chromatin elements that are open in one or more sections of the organism body or regenerating tissue. We used histone modification ChIP-seq to identify 9,998 candidate proximal promoter and 3,018 candidate enhancer-like regions respectively. We show that a subset of these regulatory elements is dynamically remodeled during head regeneration and identify a set of transcription factor motifs that are enriched in the enhancer regions activated during head regeneration. Our results show that Hydra displays complex gene regulatory structures of developmentally dynamic enhancers, which suggests that the evolution of complex developmental enhancers predates the split of cnidarians and bilaterians.

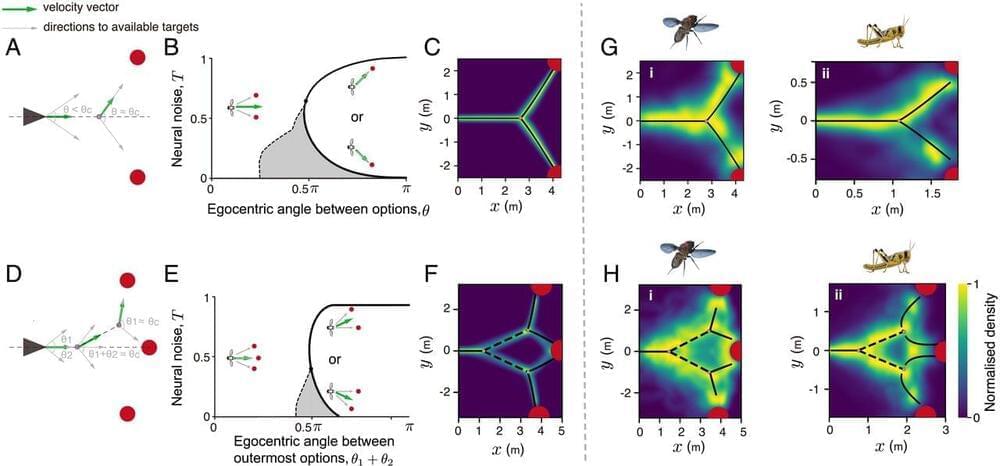

Almost all animals must make decisions on the move. Here, employing an approach that integrates theory and high-throughput experiments (using state-of-the-art virtual reality), we reveal that there exist fundamental geometrical principles that result from the inherent interplay between movement and organisms’ internal representation of space. Specifically, we find that animals spontaneously reduce the world into a series of sequential binary decisions, a response that facilitates effective decision-making and is robust both to the number of options available and to context, such as whether options are static (e.g., refuges) or mobile (e.g., other animals). We present evidence that these same principles, hitherto overlooked, apply across scales of biological organization, from individual to collective decision-making.

Animal movement data have been deposited in GitHub (https://github.

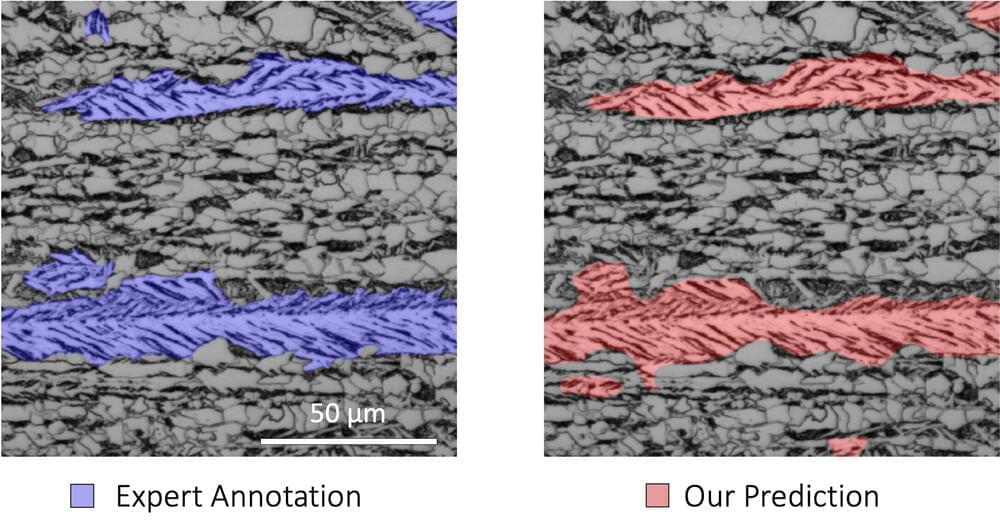

Most often, we recognize deep learning as the magic behind self-driving cars and facial recognition, but what about its ability to safeguard the quality of the materials that make up these advanced devices? Professor of Materials Science and Engineering Elizabeth Holm and Ph.D. student Bo Lei have adopted computer vision methods for microstructural images that not only require a fraction of the data deep learning typically relies on but can save materials researchers an abundance of time and money.

Quality control in materials processing requires the analysis and classification of complex material microstructures. For instance, the properties of some high strength steels depend on the amount of lath-type bainite in the material. However, the process of identifying bainite in microstructural images is time-consuming and expensive as researchers must first use two types of microscopy to take a closer look and then rely on their own expertise to identify bainitic regions. “It’s not like identifying a person crossing the street when you’re driving a car,” Holm explained “It’s very difficult for humans to categorize, so we will benefit a lot from integrating a deep learning approach.”

Their approach is very similar to that of the wider computer-vision community that drives facial recognition. The model is trained on existing material microstructure images to evaluate new images and interpret their classification. While companies like Facebook and Google train their models on millions or billions of images, materials scientists rarely have access to even ten thousand images. Therefore, it was vital that Holm and Lei use a “data-frugal method,” and train their model using only 30–50 microscopy images. “It’s like learning how to read,” Holm explained. “Once you’ve learned the alphabet you can apply that knowledge to any book. We are able to be data-frugal in part because these systems have already been trained on a large database of natural images.”

Scientists aboard the International Space Station (ISS) have used magnetism as a gravity replacement in a biomanufacturing device that can make human cartilage tissue out of individual cells. The researchers say this isn’t just the first time a complex material has been assembled—it also represents an entire new field using magnets to “levitate” materials in zero-gravity environments.

🤯 Let’s go deeper. Click here to read more stories like this, solve life’s mind-blowing mysteries, and get unlimited access to Popular Mechanics.

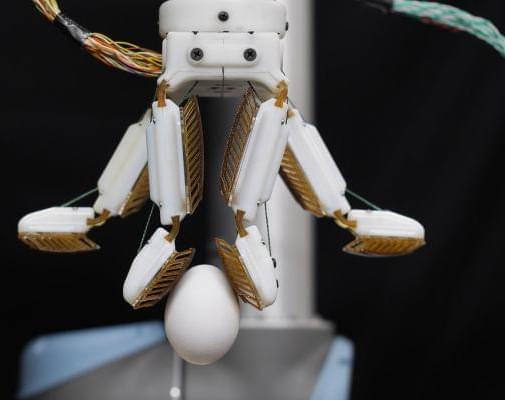

Stanford’s made a lot of progress over the years with its gecko-inspired robotic hand. In May, a version of the “gecko gripper” even found its way onto the International Space Station to test its ability to perform tasks like collecting debris and fixing satellites.

In a paper published today in Science Robotics, researchers at the university are demonstrating a far more terrestrial application for the tech: picking delicate objects. It’s something that’s long been a challenge for rigid robot hands, leading to a wide range of different solutions, including soft robotic grippers.

The team is showing off FarmHand, a four-fingered gripper inspired by both the dexterity of the human hand and the unique gripping capabilities of geckos. Of the latter, Stanford notes that the adhesive surface “creates a strong hold via microscopic flaps — Van der Waals force – a weak intermolecular force that results from subtle differences in the positions of electrons on the outsides of molecules.”

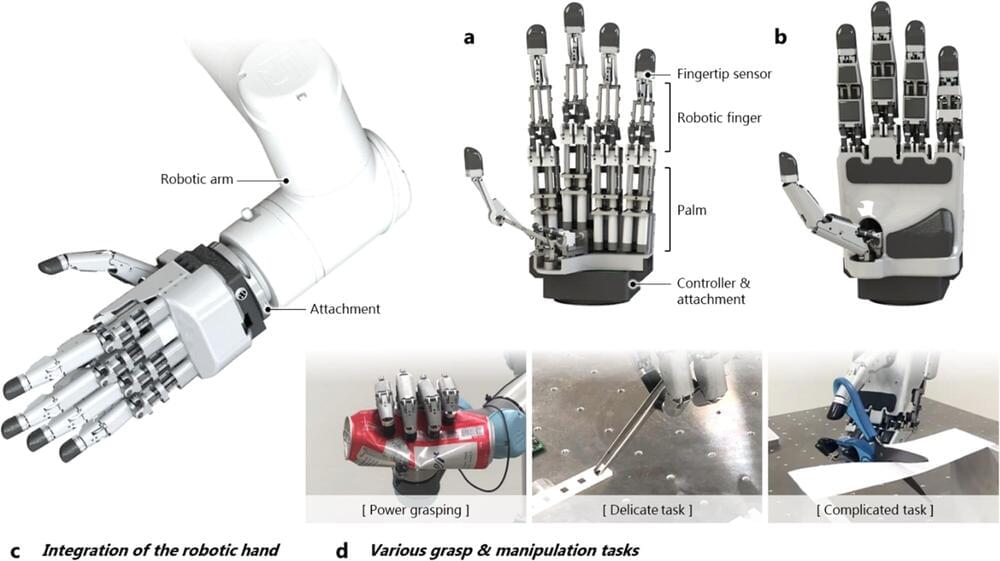

A team of researchers affiliated with multiple institutions in Korea has developed a robot hand that has abilities similar to human hands. In their paper published in the journal Nature Communications, the group describes how they achieved a high level of dexterity while keeping the hand’s size and weight low enough to attach to a robot arm.

Creating robot hands with the dexterity, strength and flexibility of human hands is a challenging task for engineers—typically, some attributes are discarded to allow for others. In this new effort, the researchers developed a new robot hand based on a linkage-driven mechanism that allows it to articulate similarly to the human hand. They began their work by conducting a survey of existing robot hands and assessing their strengths and weaknesses. They then drew up a list of features they believed their hand should have, such as fingertip force, a high degree of controllability, low cost and high dexterity.

The researchers call their new hand an integrated, linkage-driven dexterous anthropomorphic (IDLA) robotic hand, and just like its human counterpart, it has four fingers and a thumb, each with three joints. And also like the human hand, it has fingertip sensors. The hand is also just 22 centimeters long. Overall, it has 20 joints, which gives it 15 degrees of motion—it is also strong, able to exert a crushing force of 34 Newtons—and it weighs just 1.1.kg.

Why AI will completely take over science by the end of the next decade:

‘’It can take decades for scientists to identify physical laws, statements that explain anything from how gravity affects objects to why energy can’t be created or destroyed. Purdue University researchers have found a way to use machine learning for reducing that time to just a few days.’‘.

Bionaut Labs aims to use robots smaller than a grain of rice to deliver medications exactly where they are needed and minimize the side effects for the body.

The price is normally 45$ for two days but use my code: GALAXISGAL to get in for just 15$.