Virtual reality (VR) and augmented reality (AR) headsets are becoming increasingly advanced, enabling increasingly engaging and immersive digital experiences. To make VR and AR experiences even more realistic, engineers have been trying to create better systems that produce tactile and haptic feedback matching virtual content.

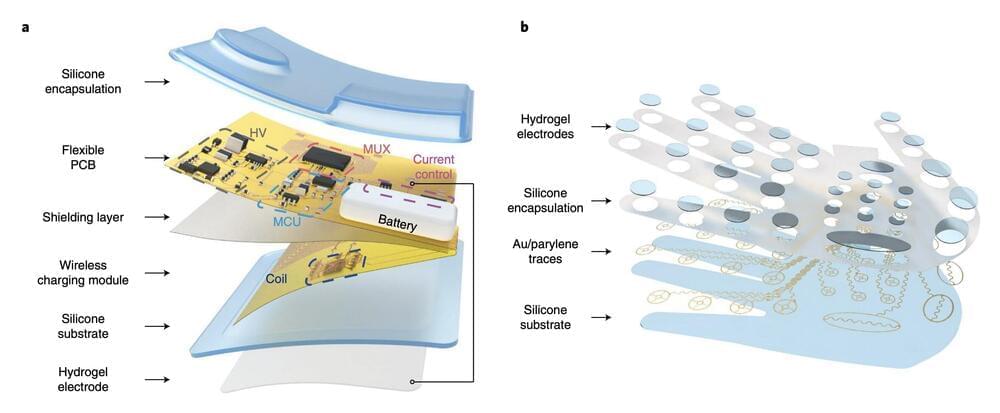

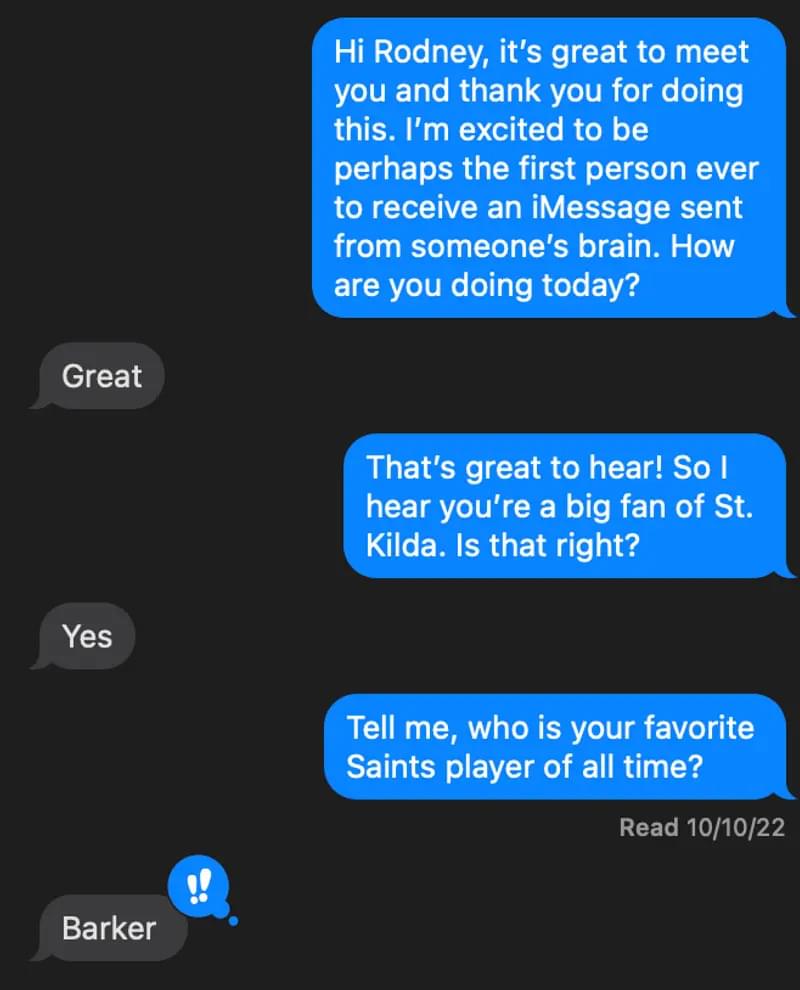

Researchers at University of Hong Kong, City University of Hong Kong, University of Electronic Science and Technology of China (UESTC) and other institutes in China have recently created WeTac, a miniaturized, soft and ultrathin wireless electrotactile system that produces tactile sensations on a user’s skin. This system, introduced in Nature Machine Intelligence, works by delivering electrical current through a user’s hand.

“As the tactile sensitivity among different individuals and different parts of the hand within a person varies widely, a universal method to encode tactile information into faithful feedback in hands according to sensitivity features is urgently needed,” Kuanming Yao and his colleagues wrote in their paper. “In addition, existing haptic interfaces worn on the hand are usually bulky, rigid and tethered by cables, which is a hurdle for accurately and naturally providing haptic feedback.”