Albert Einstein wasn’t entirely convinced about quantum mechanics, suggesting our understanding of it was incomplete. In particular, Einstein took issue with entanglement, the notion that a particle could be affected by another particle that wasn’t close by.

Experiments since have shown that quantum entanglement is indeed possible and that two entangled particles can be connected over a distance. Now a new experiment further confirms it, and in a way we haven’t seen before.

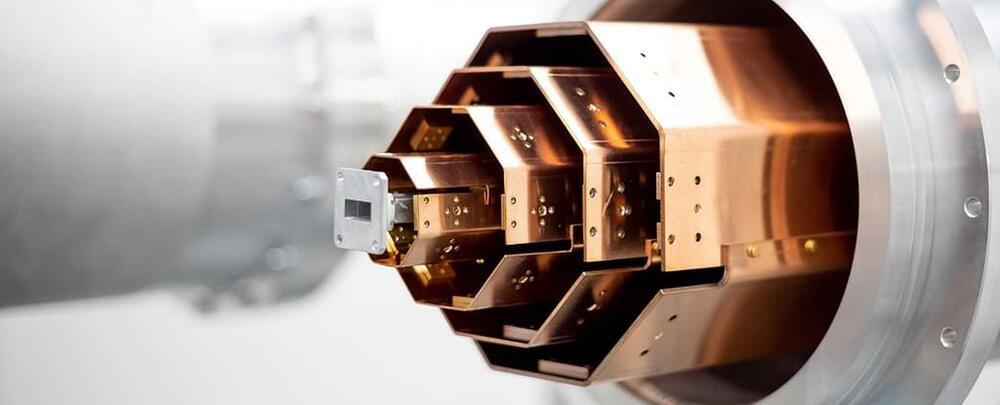

In the new experiment, scientists used a 30-meter-long tube cooled to close to absolute zero to run a Bell test: a random measurement on two entangled qubit (quantum bit) particles at the same time.