Year 2020 o.o!

Explorations into the nature of reality have been undertaken across the ages, and in the contemporary world, disparate tools, from gedanken experiments [1–4], experimental consistency checks [5,6] to machine learning and artificial intelligence are being used to illuminate the fundamental layers of reality [7]. A theory of everything, a grand unified theory of physics and nature, has been elusive for the world of Physics. While unifying various forces and interactions in nature, starting from the unification of electricity and magnetism in James Clerk Maxwell’s seminal work A Treatise on Electricity and Magnetism [8] to the electroweak unification by Weinberg-Salam-Glashow [9–11] and research in the direction of establishing the Standard Model including the QCD sector by Murray Gell-Mann and Richard Feynman [12,13], has seen developments in a slow but surefooted manner, we now have a few candidate theories of everything, primary among which is String Theory [14]. Unfortunately, we are still some way off from establishing various areas of the theory in an empirical manner. Chief among this is the concept of supersymmetry [15], which is an important part of String Theory. There were no evidences found for supersymmetry in the first run of the Large Hadron Collider [16]. When the Large Hadron Collider discovered the Higgs Boson in 2011-12 [17–19], there were results that were problematic for the Minimum Supersymmetric Model (MSSM), since the value of the mass of the Higgs Boson at 125 GeV is relatively large for the model and could only be attained with large radiative loop corrections from top squarks that many theoreticians considered to be ‘unnatural’ [20]. In the absence of experiments that can test certain frontiers of Physics, particularly due to energy constraints particularly at the smallest of scales, the importance of simulations and computational research cannot be underplayed. Gone are the days when Isaac Newton purportedly could sit below an apple tree and infer the concept of classical gravity from an apple that had fallen on his head. In today’s age, we have increasing levels of computational inputs and power that factor in when considering avenues of new research in Physics. For instance, M-Theory, introduced by Edward Witten in 1995 [21], is a promising approach to a unified model of Physics that includes quantum gravity. It extends the formalism of String Theory. There have been computational tools relating to machine learning that have lately been used for solving M-Theory geometries [22]. TensorFlow, a computing platform normally used for machine learning, helped in finding 194 equilibrium solutions for one particular type of M-Theory spacetime geometries [23–25].

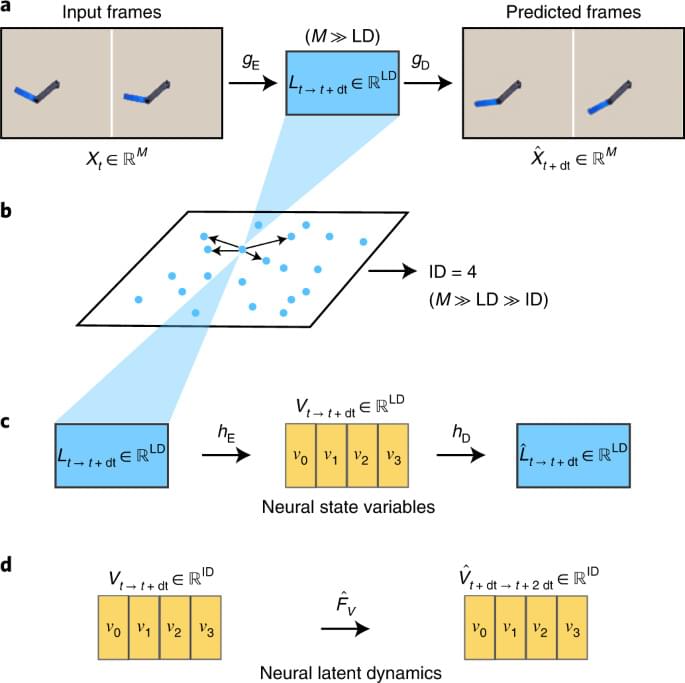

Artificial intelligence has been one of the primary areas of interest in computational pursuits around Physics research. In 2020, Matsubara Takashi (Osaka University) and Yaguchi Takaharu (Kobe University), along with their research group, were successful in developing technology that could simulate phenomena for which we do not have the detailed formula or mechanism, using artificial intelligence [26]. The underlying step here is the creation of a model from observational data, constrained by the model being consistent and faithful to the laws of Physics. In this pursuit, the researchers utilized digital calculus as well as geometrical approach, such as those of Riemannian geometry and symplectic geometry.