Much like artificial intelligence, quantum computing has the potential to transform many industries. But a cybersecurity threat looms large.

Controlling chemical reactions to generate new products is one of the biggest challenges in chemistry. Developments in this area impact industry, for example, by reducing the waste generated in the manufacture of construction materials or by improving the production of catalysts to accelerate chemical reactions.

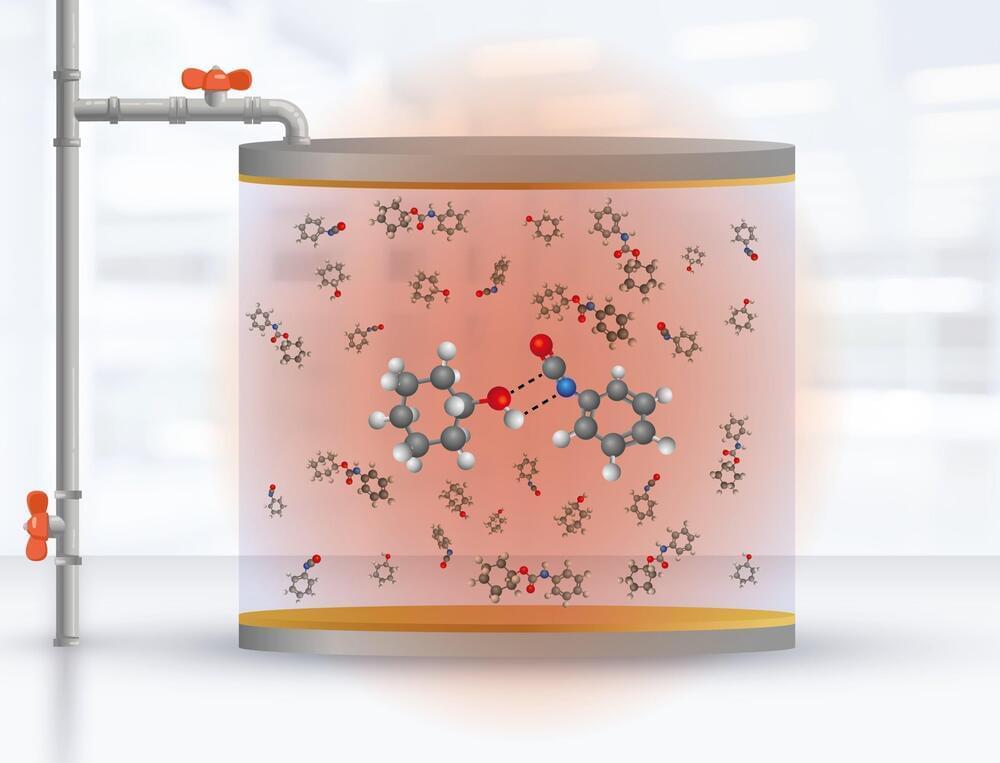

For this reason, in the field of polariton chemistry—which uses tools of chemistry and quantum optics—in the last 10 years different laboratories around the world have developed experiments in optical cavities to manipulate the chemical reactivity of molecules at room temperature, using electromagnetic fields. Some have succeeded in modifying chemical reactions products in organic compounds, but to date, and without relevant advances in the last two years, no research team has been able to come up with a general physical mechanism to describe the phenomenon and to reproduce it to obtain the same measurements in a consistent manner.

Now a team of researchers from Universidad de Santiago (Chile), part of the Millennium Institute for Research in Optics (MIRO), led by principal investigator Felipe Herrera, and the laboratory of the chemistry division of the US Naval Research Laboratory, (United States), led by researcher Blake Simpkins, for the first time report the manipulation of the formation rate of urethane molecules in a solution contained inside an infrared cavity.

Scientists from a collection of Chinese research institutions collaborated on a study of organ regeneration in mammals, finding deer antler blastema progenitor cells are a possible source of conserved regeneration cells in higher vertebrates. Published in the journal Science, the researchers suggest the findings have applications in clinical bone repair. With the activation of key characteristic genes, it could potentially be used in regenerative medicine for skeletal, long bone or limb regeneration.

Limb and organ regeneration is a long coveted technology in medical science. Humans have some limited regenerative abilities, mostly in our livers. If a portion of the liver is removed, the remaining liver will begin to grow until it reaches its original functional size. Lungs, kidneys, and pancreas can do this also, though not as thoroughly or efficiently.

Compare this to a lizard regenerating a tail, a zebrafish replacing a fin, a lobster regrowing a claw, or an axolotl salamander that can rebuild organs, limbs, spinal cord and even missing brain tissue.

This article is part of a VB special issue. Read the full series here: Data centers in 2023: How to do more with less.

The metaverse was once pure science fiction, an idea of a sprawling online universe born 30 years ago in Neal Stephenson’s Snow Crash novel. But now it’s gone through a rebirth as a realistic destination for many industries. And so I asked some people how the metaverse will change data centers in the future.

First, it helps to reach an understanding of what the metaverse will be. Some see the metaverse as the next version of the internet, or the spatial web, or the 3D web, with a 3D animated foundation that resembles sci-fi movies like Steven Spielberg’s Ready Player One.

On May 8, Shenzhen citizens found a significant reduction in their social security pension balances. Some people’s accounts even shrunk a third, the most loss reached 120,000 RMB, around 17,000 USD. A wave of public outcry ensued, with aggrieved parties accusing the government of arbitrarily “pooling” their hard-earned social security funds. The controversy grew to such an extent that the balance inquiry function of the Shenzhen social security system was promptly suspended.

Following in the footsteps of Shanghai, Shenzhen has become the second major city to execute a social security coordination. Between midnight on April 24th, 2023, and 9 am on May 8th, the Shenzhen Social Insurance Information System was suspended to integrate into Guangdong Province’s system, thereby linking to the National Pension Insurance Coordinated Information System for Enterprise Employees. Upon the system’s restoration, however, a considerable number of Shenzhen residents were shocked to find that their social security account balances suddenly decreased, with some accounts seeing a drastic 30–40% plunge. The whereabouts of these diverted funds remains a mystery.

The government’s actions prompted sharp rebuke. Many people criticised the authorities for appropriating funds without prior consent or notification, especially for a matter as important as social security funds. As the public searched for the reason behind this, some people pointed out the precarious state of the existing pension and medical insurance funds, which are very low, and underscored the severe challenges confronting the nation. To mitigate this crisis, the CCP is reportedly exploring various reform measures, including the integration of Shenzhen’s social security system with that of Guangdong Province, to pool and coordinate the two funds together.

#chinaPensions #chinaeconomy #chinaobserver.

All rights reserved.

ETH Zurich researchers have developed a structure that can switch between stable shapes as needed while being remarkably simple to produce. The key lies in a clever combination of base materials.

During photosynthesis, a symphony of chemicals transforms light into the energy required for plant, algal, and some bacterial life. Scientists now know that this remarkable reaction requires the smallest possible amount of light – just one single photon – to begin.

A US team of researchers in quantum optics and biology showed that a lone photon can start photosynthesis in the purple bacterium Rhodobacter sphaeroides, and they are confident it works in plants and algae since all photosynthetic organisms share an evolutionary ancestor and similar processes.

The team says their findings bolster our knowledge of photosynthesis and will lead to a better understanding of the intersection of quantum physics in a wide range of complex biological, chemical, and physical systems, including renewable fuels.