Why aren’t there more robots in homes? This a surprising complex question — and our homes are surprisingly complex places. A big part of the reason autonomous systems are thriving on warehouse and factory floors first is the relative ease of navigating a structured environment. Sure, most systems still require a space be mapped prior to getting to work, but once that’s in place there tends to be little in the way of variation.

Homes, on the other hand, are kind of a nightmare. Not only do they vary dramatically from unit to unit, they’re full of unfriendly obstacles and tend to be fairly dynamic, as furniture is moved around or things are left on the floor. Vacuums are the most prevalent robots in the home, and they’re still being refined after decades on the market.

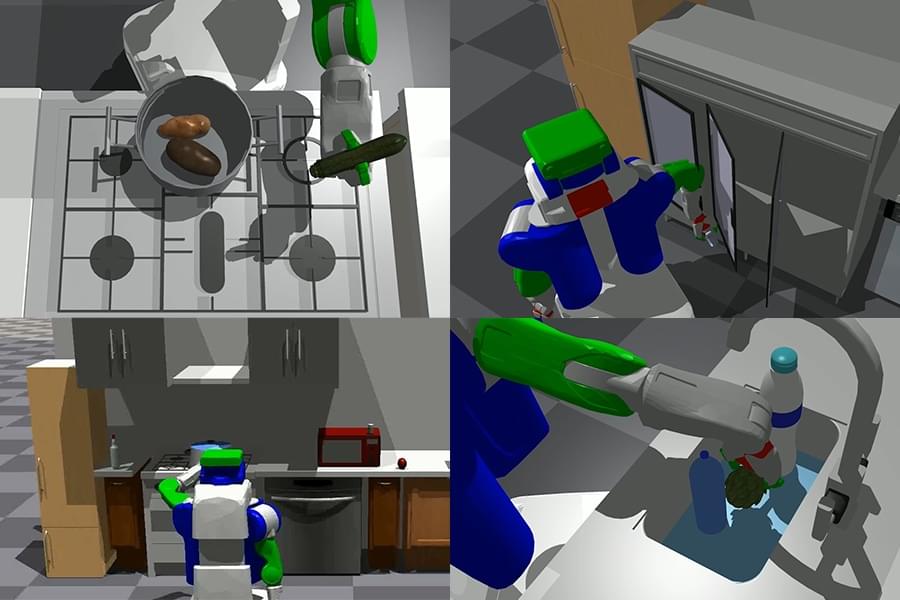

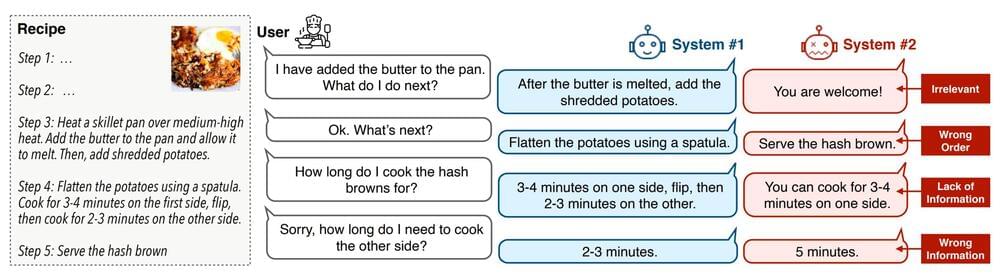

This week, researchers at MIT CSAIL are showcasing PIGINet (Plans, Images, Goal, and Initial facts), which is designed to bring task and motion planning to home robotic systems. The neural network is designed to help streamline their ability to create plans of action in different environments.