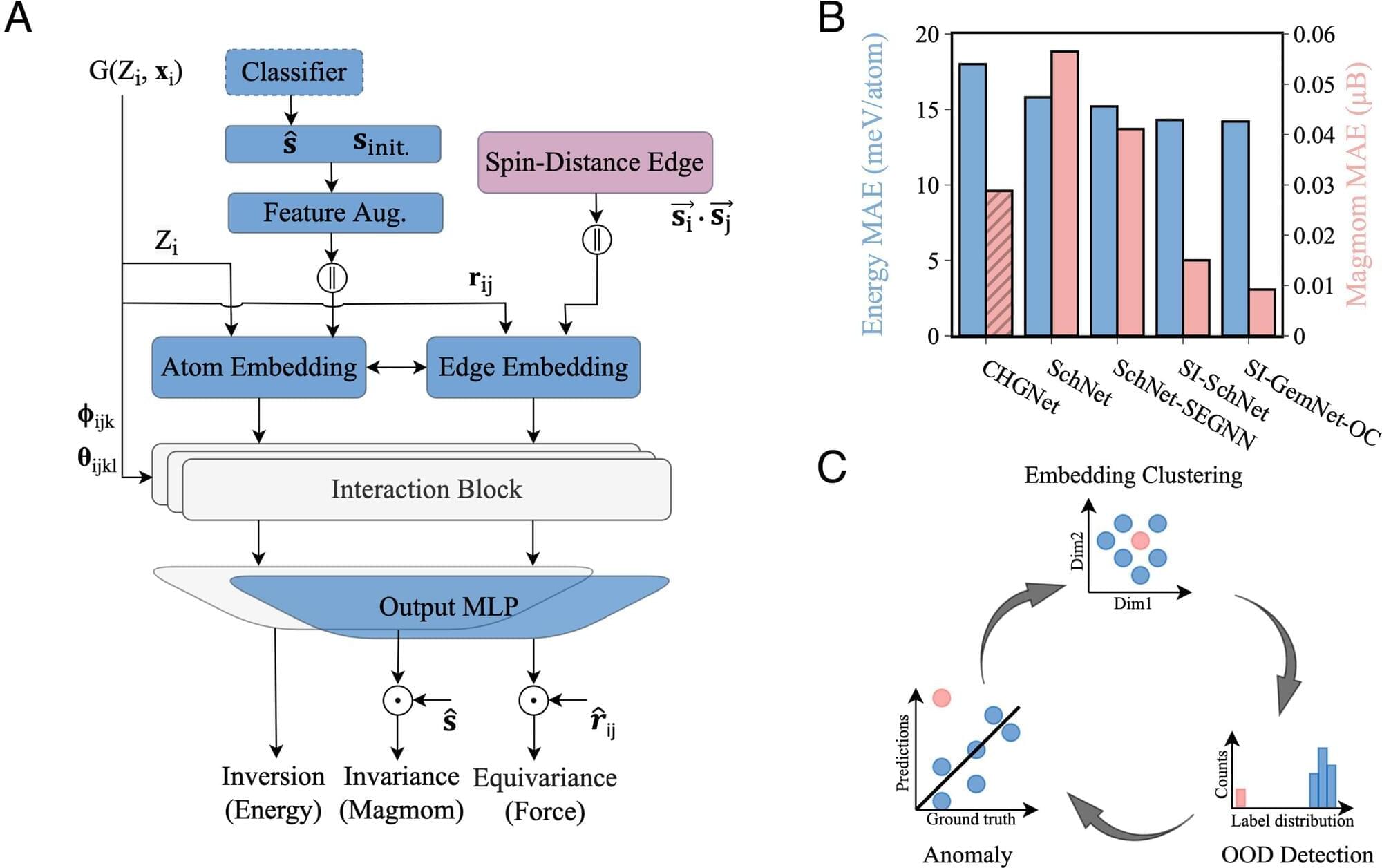

The potential of chat gpt and neural link is limitless. Really chat gpt with agi would automate even an entire world and even do all work by itself basically taking the forever mental labor of work forever scenario away from humans so we can sit and drink tea or other leisure activities. Then if we miniaturize even chat gpt, neural link, and agi all in one whether it is in the neural link or even on a smartphone it could allow for near infinite money 💵 with little to no effort which takes away mental labor forever because we could solve anything or do all jobs with no need for even training it would be like an everything calculator for an eternity of work so no humans need suffer the dole of forever mental labor which can evolve earths civilization into complete abundance.

We spoke to two people pioneering ChatGPT’s integration with Synchron’s brain-computer-interface to learn what it’s like to use and where this technology is headed.

Read more on CNET: How This Brain Implant Is Using ChatGPT https://bit.ly/3y5lFkD

0:00 Intro.

0:25 Meet Trial Participant Mark.

0:48 What Synchron’s BCI is for.

1:25 What it’s like to use.

1:51 Why work with ChatGPT?

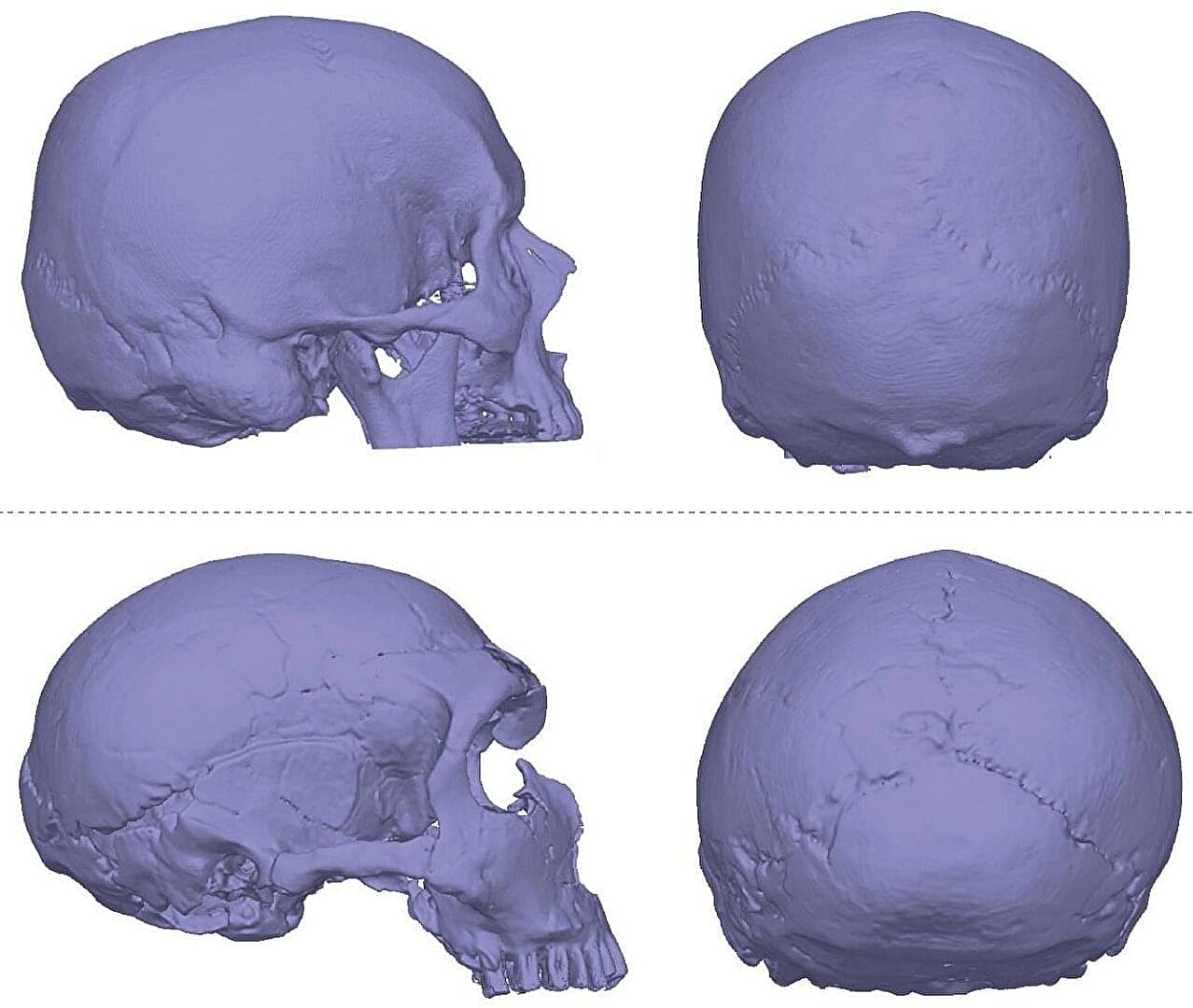

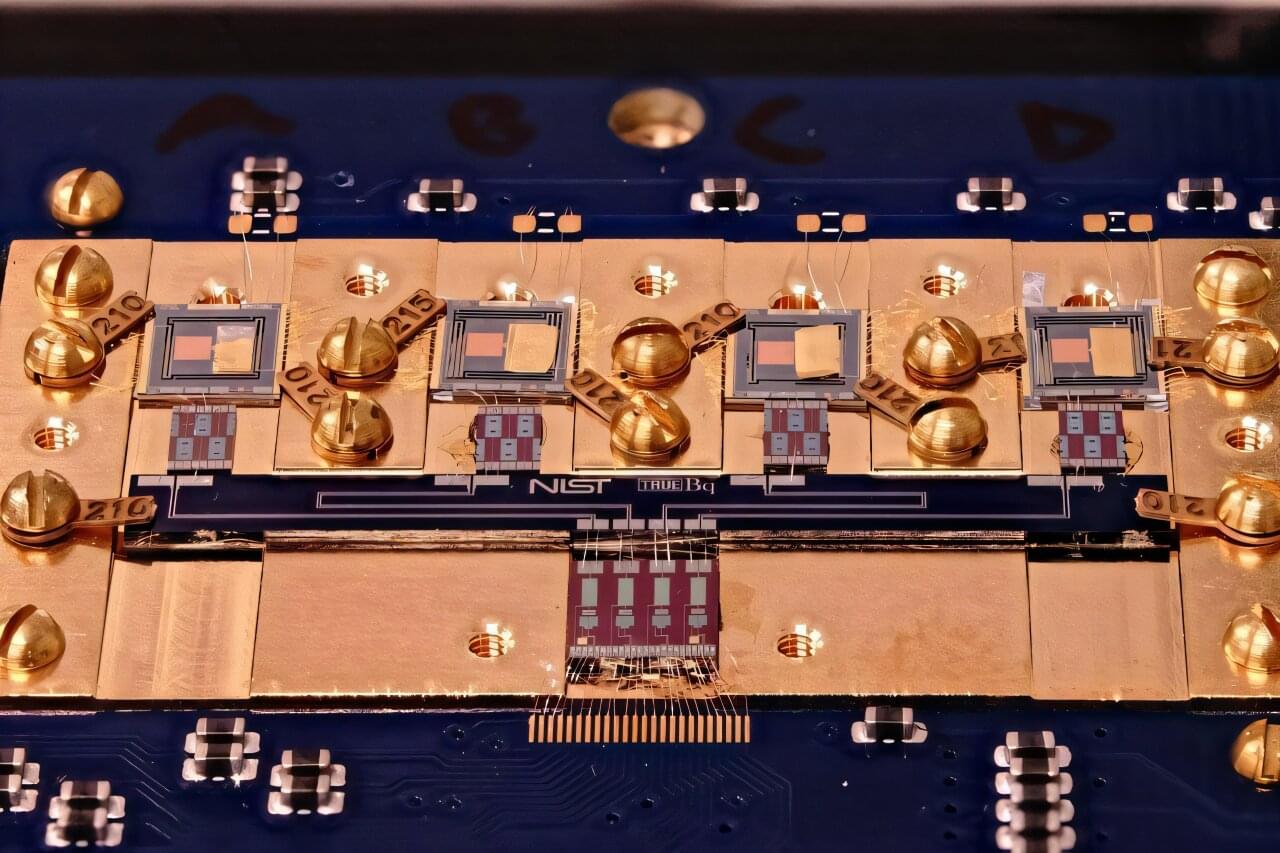

3:05 How Synchron’s BCI works.

3:46 Synchron’s next steps.

4:27 Final Thoughts.

Never miss a deal again! See CNET’s browser extension 👉 https://bit.ly/3lO7sOU