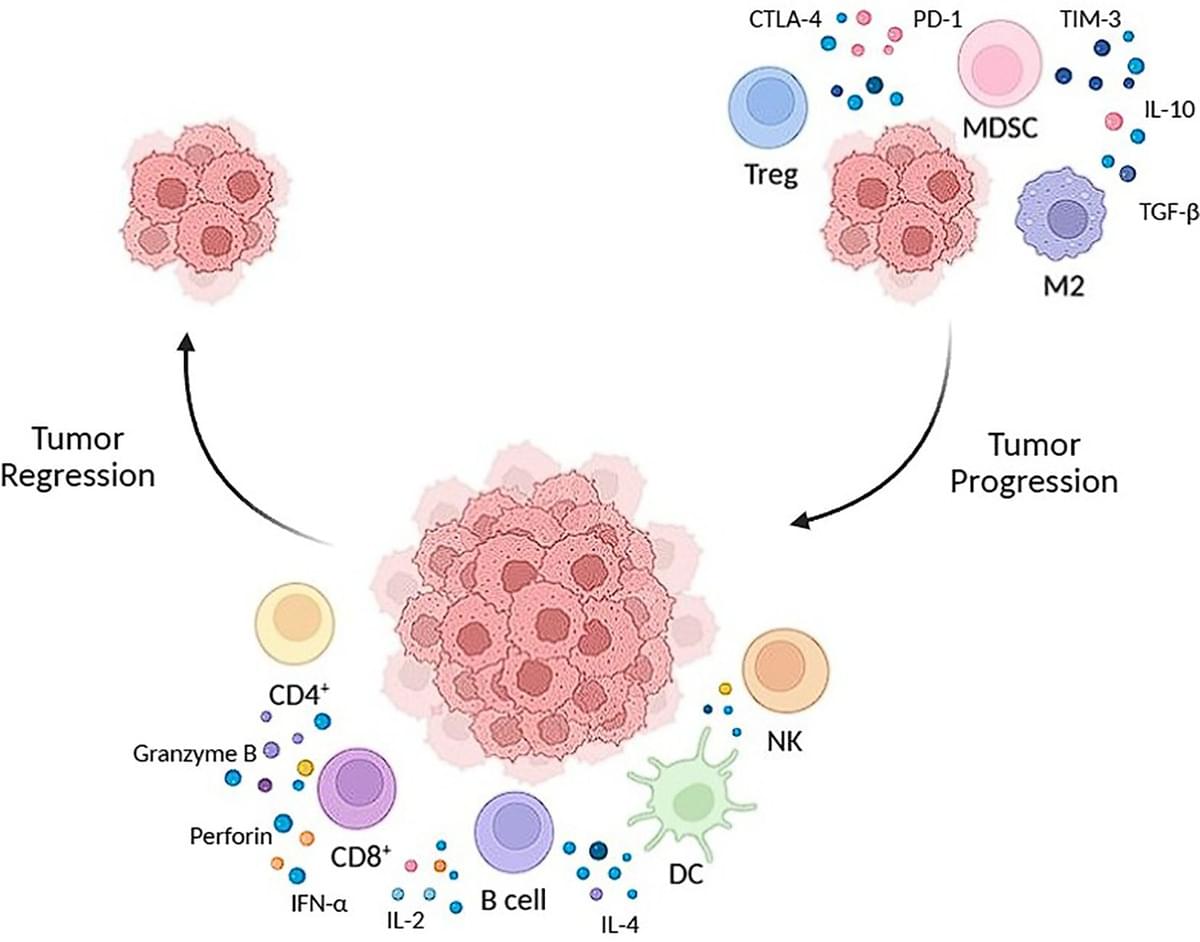

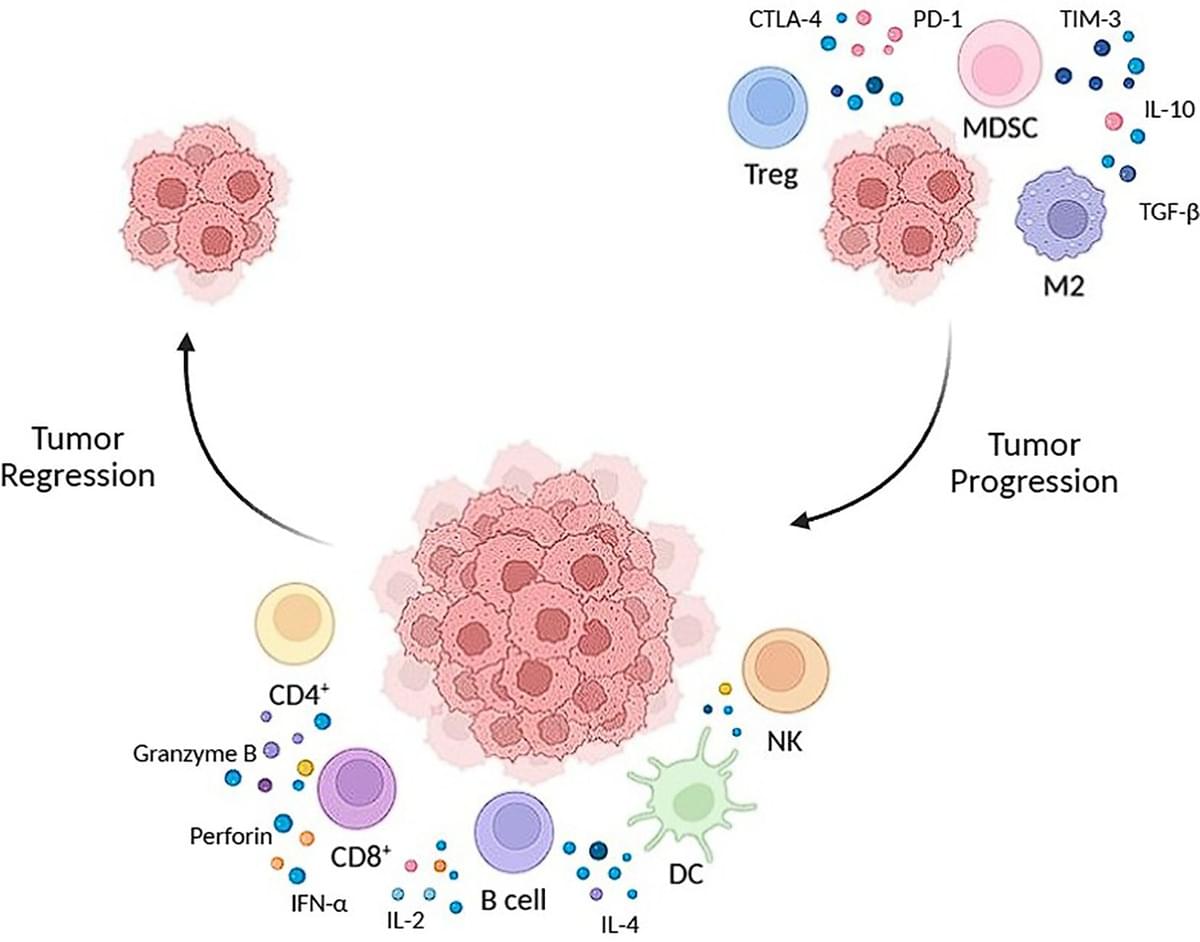

Tumor-infiltrating lymphocytes (TILs) are a diverse population of immune cells that play a central role in tumor immunity and have emerged as critical mediat…

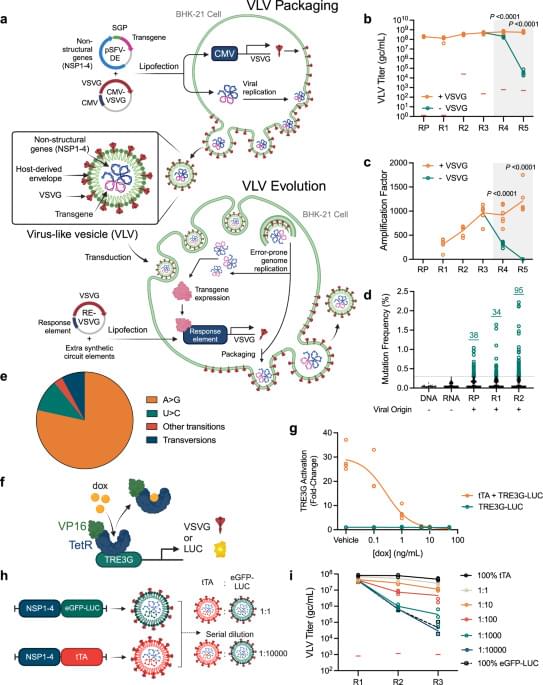

Directed evolution is a process of mutation and artificial selection to breed biomolecules with new or improved activity. Here the authors develop a directed evolution platform (PROTein Evolution Using Selection; PROTEUS) that enables the generation of proteins with enhanced or novel activities within a mammalian context.

“We are excited to be joining Google DeepMind along with some of the Windsurf team,” Mohan and Chen said in a statement. “We are proud of what Windsurf has built over the last four years and are excited to see it move forward with their world class team and kick-start the next phase.”

Google didn’t share how much it was paying to bring on the team. OpenAI was previously reported to be buying Windsurf for $3 billion.

The SunRise Building, a residential complex in Alberta, Canada, has established a Guinness World Record for the largest solar panel mural.

The installation combines art with building-integrated photovoltaics (BIPV), contributing to the building’s energy supply. This project measures 34,500 square feet and provides 267 kW of solar capacity, powering the building’s common areas.

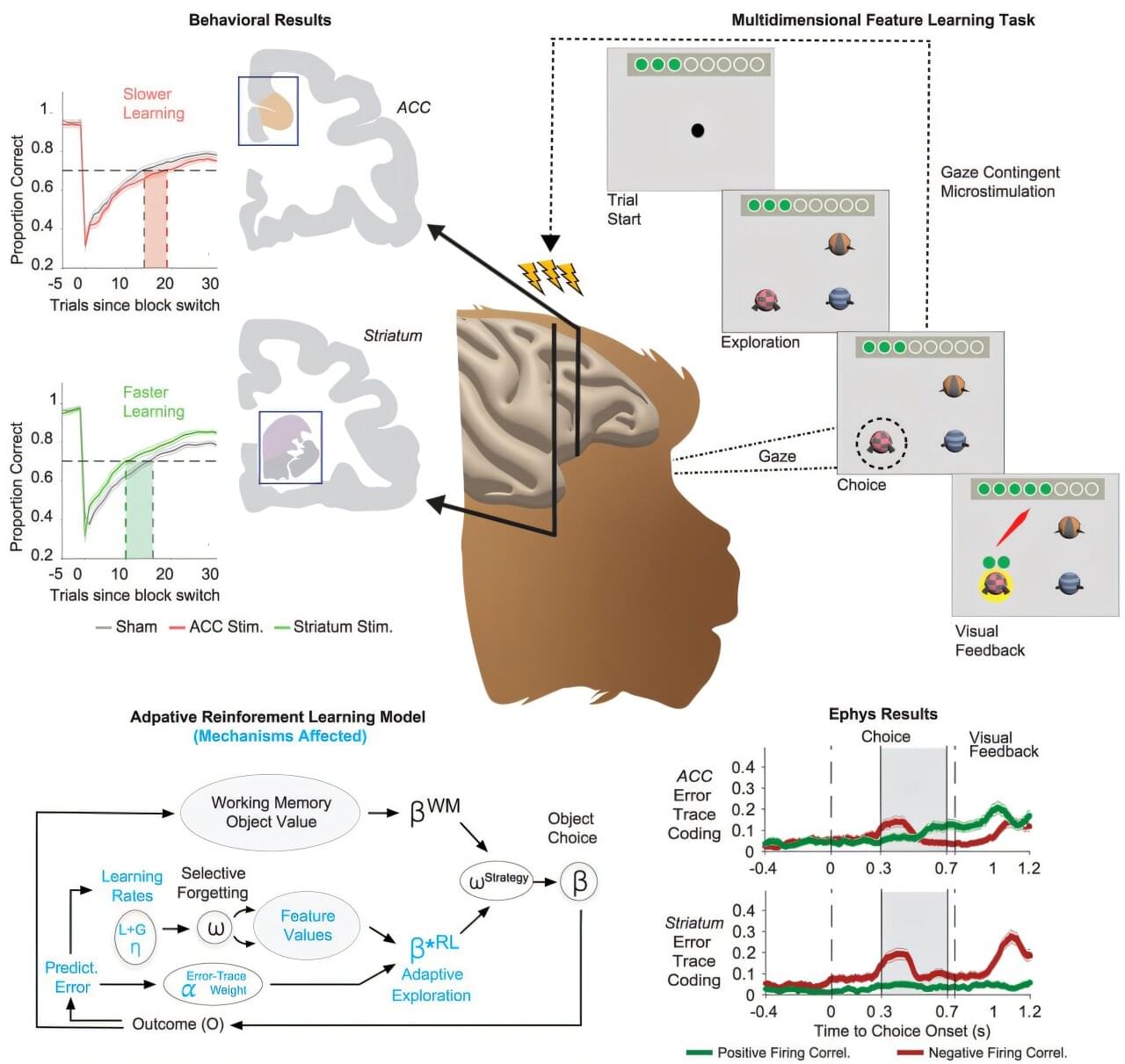

Research led by Thilo Womelsdorf, professor of psychology and biomedical engineering at the Vanderbilt Brain Institute, could revolutionize how brain-computer interfaces are used to treat disorders of memory and cognition.

The study, “Adaptive reinforcement learning is causally supported by anterior cingulate cortex and striatum,” was published June 10, 2025, in the journal Neuron.

According to researchers, neurologists use electrical brain-computer interfaces (BCIs) to help patients with Parkinson’s disease and spinal cord injuries when drugs and other rehabilitative interventions are not efficient. For these disorders, researchers say brain-computer interfaces have become electroceuticals that substitute pharmaceuticals by directly modulating dysfunctional brain signals.

Shading brings 3D forms to life, beautifully carving out the shape of objects around us. Despite the importance of shading for perception, scientists have long been puzzled about how the brain actually uses it. Researchers from Justus-Liebig-University Giessen and Yale University recently came out with a surprising answer.

Previously, it has been assumed that one interprets shading like a physics-machine, somehow “reverse-engineering” the combination of shape and lighting that would recreate the shading we see. Not only is this extremely challenging for advanced computers, but the visual brain is not designed to solve that sort of problem. So, these researchers decided to start instead by considering what is known about the brain when it first gets signals from the eye.

“In some of the first steps of visual processing, the brain passes the image through a series of ‘edge-detectors,’ essentially tracing it like an etch-a-sketch,” Professor Roland W. Fleming of Giessen explains. “We wondered what shading patterns would look like to a brain that’s searching for lines.” This insight led to an unexpected, but clever short-cut to the shading inference problem.