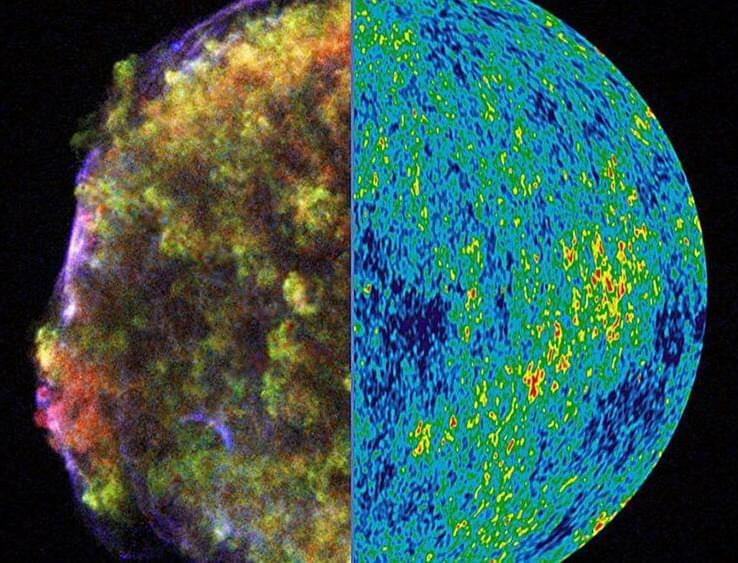

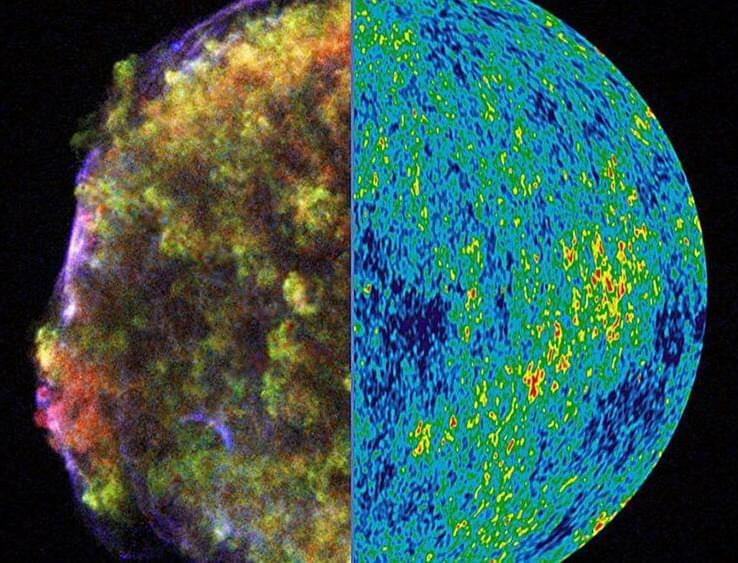

Two conflicting methods to measure the expansion rate of the universe give different results, but researchers could resolve the disparity by watching merging neutron stars explode.

Now that computer-generated imaging is accessible to anyone with a weird idea and an internet connection, the creation of “AI art” is raising questions—and lawsuits. The key questions seem to be 1) how does it actually work, 2) what work can it replace, and 3) how can the labor of artists be respected through this change?

The lawsuits over AI turn, in large part, on copyright. These copyright issues are so complex that we’ve devoted a whole, separate post to them. Here, we focus on thornier non-legal issues.

How Do AI Art Generators Work?

We often wonder where we might find a truly sustainable and abundant source of energy, and the answer might turn out to be in the emptiness all around us.

Watch my exclusive video Dark Stars At The Beginning Of Time: https://nebula.tv/videos/isaacarthur-dark-stars-at-the-beginning-of-time.

Get Nebula using my link for 40% off an annual subscription: https://go.nebula.tv/isaacarthur.

More on Infinite Energy https://infiniteenergy.org.

Visit our Website: http://www.isaacarthur.net.

Join Nebula: https://go.nebula.tv/isaacarthur.

Support us on Patreon: https://www.patreon.com/IsaacArthur.

Support us on Subscribestar: https://www.subscribestar.com/isaac-arthur.

Facebook Group: https://www.facebook.com/groups/1583992725237264/

Reddit: https://www.reddit.com/r/IsaacArthur/

Twitter: https://twitter.com/Isaac_A_Arthur on Twitter and RT our future content.

SFIA Discord Server: https://discord.gg/53GAShE

Credits: Zero Point Energy & Vacuum Energy.

Episode 415, October 5, 2023

Written by:

Isaac Arthur.

Vlad Ardelean.

Produced & narrated by: isaac arthur.

Editor:

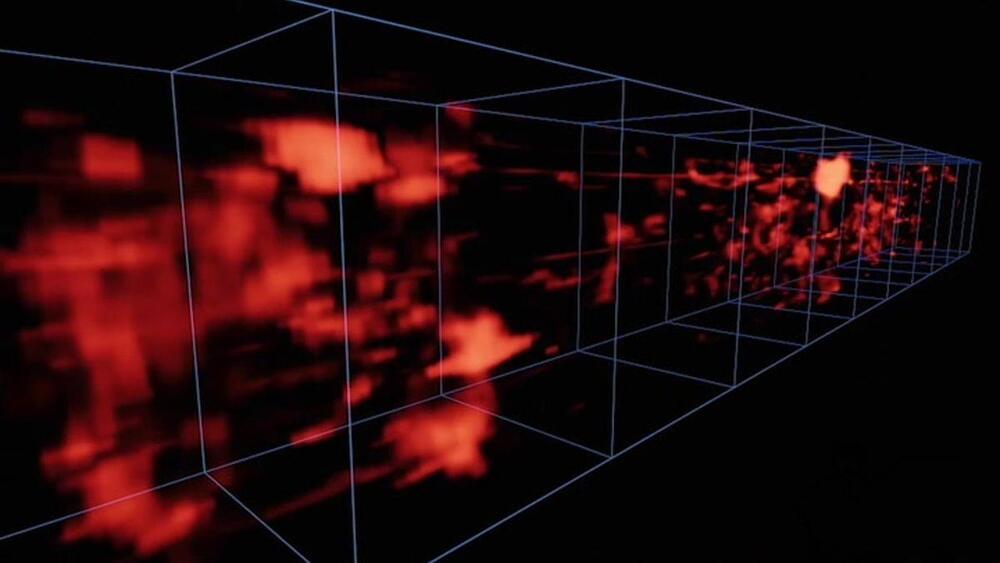

The IEEE has certified the first standard for Li-Fi, a high-speed digital communication standard in the infrared (IR), visual light, and ultraviolet (UV) spectrums.

With the certification of the Li-Fi (light-fidelity) standard, 802.11bb-2023, a new era has opened up for local area wireless communications. Li-Fi refers to wireless data communications using light rather than the radio waves used by Wi-Fi. It is faster, immune to electromagnetic interference, and more difficult to intercept. It operates by modulating near-infrared, visual, or near-ultraviolet LEDs, making any LED source a potential access point.

The first asteroid sample collected in space by a U.S. spacecraft and brought to Earth is unveiled to the world at NASA’s Johnson Space Center in Houston on…

NASA has issued a request for “lunar freezer” designs that can safely store materials taken from the moon during planned Artemis missions.

According to a request for information (RFI) posted to the federal contracting website SAM.gov, the freezer’s primary use will be transporting scientific and geological samples from the moon to Earth. These samples, the post specifies, will be ones collected during the Artemis program.

Humane, a stealthy software and hardware company, is clearly milking the media hype cycle for all it’s worth. The company’s origin dates all the way back to 2017, when it was founded by former Apple employees Bethany Bongiorno and Imran Chaudhri. In the intervening half-decade, the firm has been largely shrouded in mystery, as it has put together the pieces of a mystery wearable, which it promises will leverage AI in unique ways.

The company’s been buzzy since it first engaged with the media — well before it offered the slightest bit of insight into what it’s been working on. In spite — or perhaps because — of such mysteries, Humane is now an extremely well-funded early-stage startup.

At the tail end of 2020, it raised a $30 million Series A at a $150 million valuation. The $100 million B round arrived the following September, including Tiger Global Management, SoftBank Group, BOND, Forerunner Ventures and Qualcomm Ventures. It all seemed like a strong vote of confidence for the still stealthy firm. This March, it went ahead and raised another $100 million.