Super-resolution microscopy methods are essential for uncovering the structures of cells and the dynamics of molecules. Since researchers overcame the resolution limit of around 250 nanometers (while winning the 2014 Nobel Prize in Chemistry for their efforts), which had long been considered absolute, the methods of microscopy have progressed rapidly.

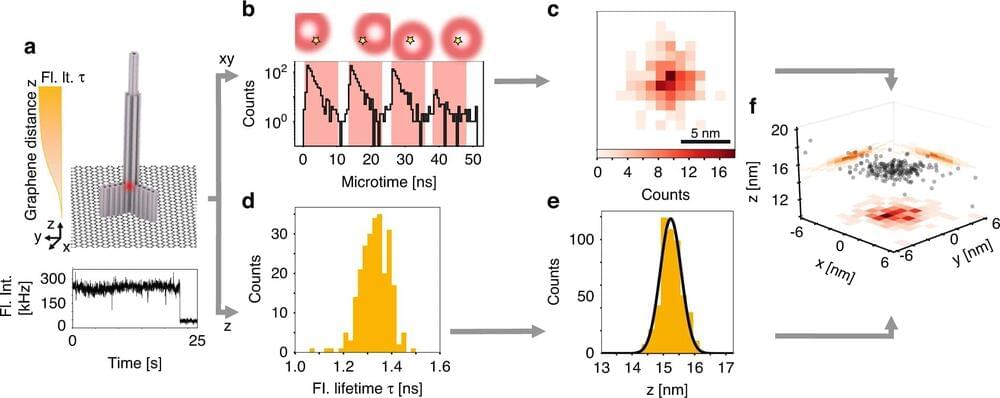

Now a team led by LMU chemist Prof. Philip Tinnefeld has made a further advance through the combination of various methods, achieving the highest resolution in three-dimensional space and paving the way for a fundamentally new approach for faster imaging of dense molecular structures. The new method permits axial resolution of under 0.3 nanometers.

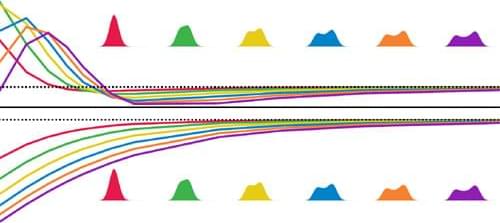

The researchers combined the so-called pMINFLUX method developed by Tinnefeld’s team with an approach that utilizes special properties of graphene as an energy acceptor. pMINFLUX is based on the measurement of the fluorescence intensity of molecules excited by laser pulses. The method makes it possible to distinguish their lateral distances with a resolution of just 1 nanometer.