Two drugs from a class new to HIV medicine called BH3 mimetics were unveiled at July’s 12th International AIDS Society Conference on HIV Science (IAS 2023) in Brisbane. They may contribute to a cure for HIV by killing off long-lived cells that contain HIV genes in their DNA. Notably, venetoclax (Venclexta) and obatoclax only killed off cells containing intact DNA, capable of giving rise to new viruses, and did not delete cells containing defective, harmless DNA.

A number of drugs and treatments from the anti-cancer arsenal have been investigated as HIV cure research such as HDAC inhibitors, PD-1 inhibitors and therapeutic vaccines. (And, of course, the six successful cures so far have used the radical cancer therapy of a stem cell (bone marrow) transplant.)

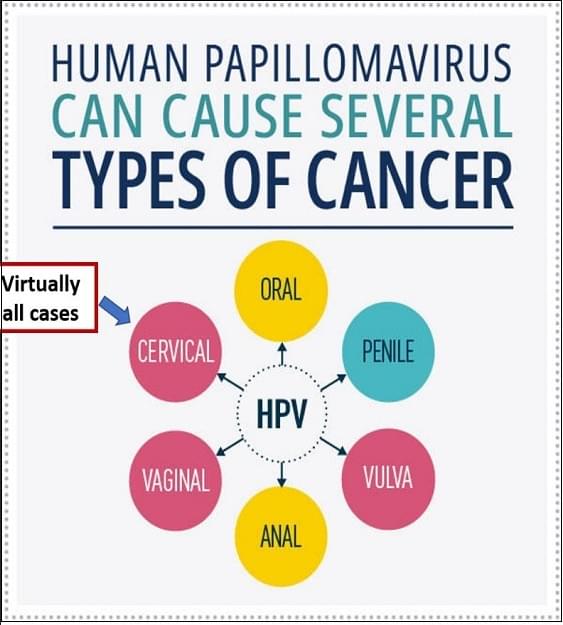

This is not coincidental: cancer and AIDS are both the end result of mutations in the DNA of some of our cells. In the case of cancer they arise in the host DNA and in HIV infection they are introduced by a virus, but both are the result of ‘rogue genes’ (some other viruses, such as HPV, directly cause cancers).