Breast cancer screening guidelines recommend women should start getting a mammogram every other year beginning at age 40.

Artificial intelligence is becoming more common in many areas of our society. One area that we may start to see more of it is in the medical community, including when it comes to the management of chronic pain. Researchers recently put artificial intelligence to the test in helping people with managing their chronic pain, and the results turned up a promising outlook for those who may have difficulty accessing a therapist.

Cognitive pain therapy intervention can play an important role in helping people who suffer from chronic pain. Our thoughts regarding pain and what we are experiencing can influence the severity of pain that we experience and how well we manage through it. Having access to a therapist who can assist chronic pain patients with cognitive pain therapy can be a challenge for some people. This leads to people not receiving the therapy they could benefit from or not finishing treatment altogether.

Blackred/iStock.

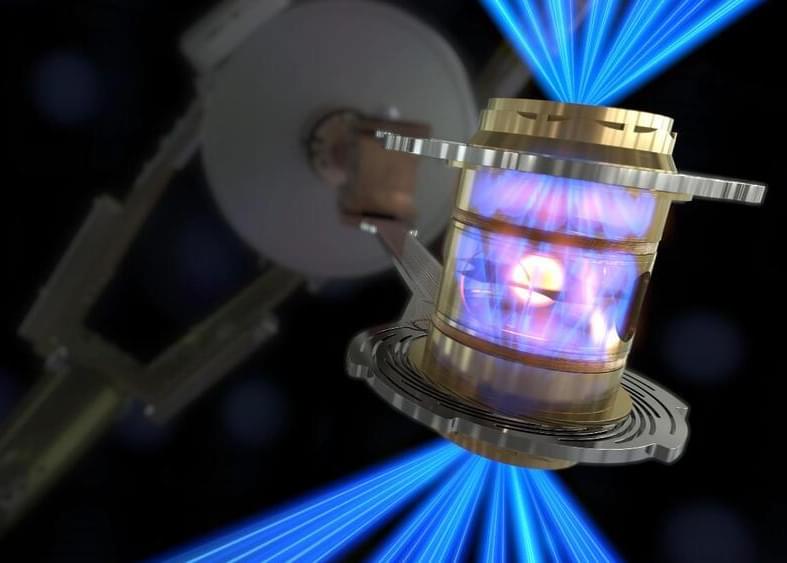

The group led by researchers from the Technical University of Denmark employed a technique using an X-ray microscope that could see acoustic waves within mm-sized crystals with subpicosecond precision. This enabled the team to see how mechanical energy thermalizes across timeframes ranging from picoseconds to microseconds by directly visiting the creation, propagation, branching, and energy dissipation of longitudinal and transverse acoustic waves in a diamond.

This summer the federal government took steps to boost connectivity by expanding existing broadband infrastructure. In late June the Biden administration announced a $42.45 billion commitment to the Broadband Equity, Access, and Deployment (BEAD) program, a federal initiative to provide all U.S. residents with reliable high-speed Internet access. The project emphasizes broadband connectivity, but some researchers suggest a more powerful cellular connection could eventually sidestep the need for wired Internet.

The 6G network is so early in its development that it is still not even clear how fast that network will be. Each new generation of wireless technology is defined by the United Nations’ International Telecommunication Union (ITU) as having a specific range of upload and download speeds. These standards have not yet been set for 6G—the ITU will likely do so late next year—but industry experts are expecting it to be anywhere from 10 to 1,000 times faster than current 5G networks. It will achieve this by using higher-frequency radio waves than its predecessors. This will provide a faster connection with fewer network delays.

No matter how fast the new network turns out to be, it could enable futuristic technology, according to Lingjia Liu, a leading 6G researcher and a professor of electrical and computer engineering at Virginia Tech. “Wi-Fi provides good service, but 6G is being designed to provide even better service than your home router, especially in the latency department, to address the growing remote workforce,” Liu says. This would likely result in a wave of new applications that have been unfathomable at current network speeds. For example, your phone could serve as a router, self-driving cars may be able to communicate with one another almost instantaneously, and mobile devices might become completely hands-free. “The speed of 6G will enable applications that we may not even imagine today. The goal for the industry is to have the global coverage and support ready for those applications when they come,” Liu says.

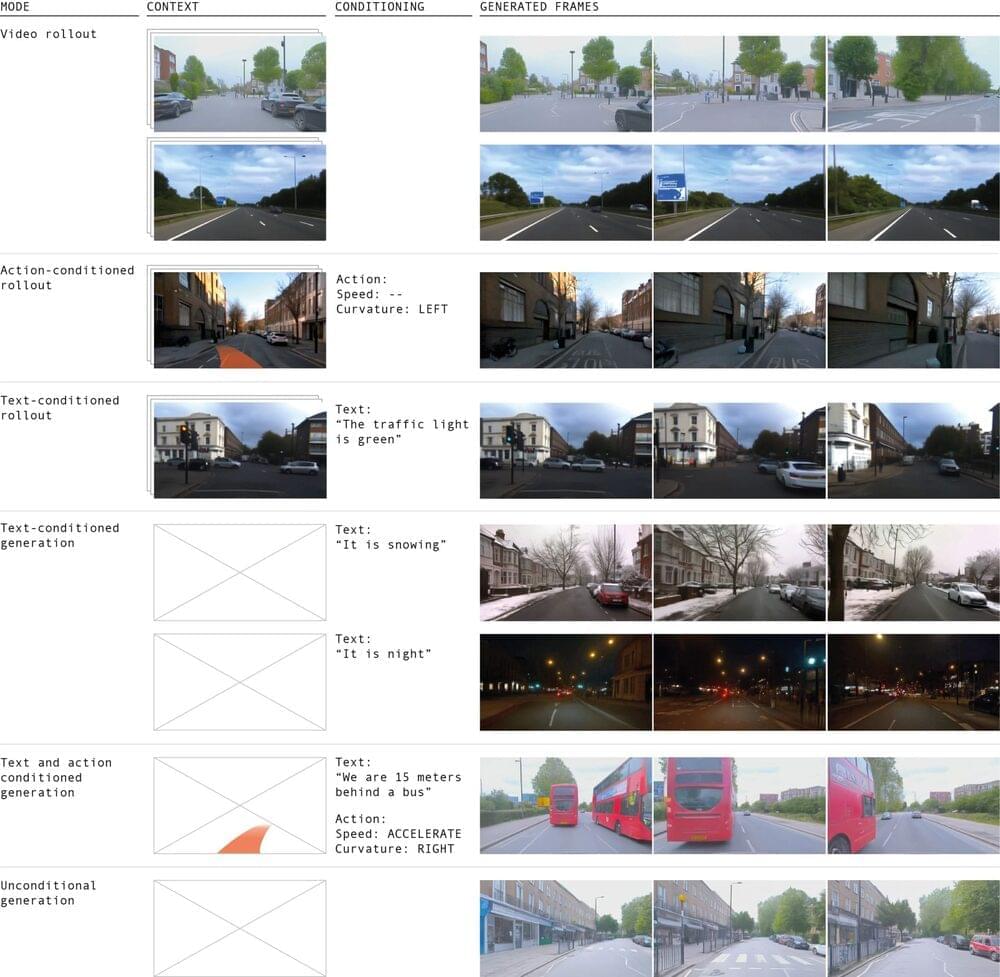

GAIA-1 is a cutting-edge generative world model built for autonomous driving. A world model learns representations of the environment and its future dynamics, providing a structured understanding of the surroundings that can be leveraged for making informed decisions when driving. Predicting future events is a fundamental and critical aspect of autonomous systems. Accurate future prediction enables autonomous vehicles to anticipate and plan their actions, enhancing safety and efficiency on the road. Incorporating world models into driving models yields the potential to enable them to understand human decisions better and ultimately generalise to more real-world situations.

GAIA-1 is a model that leverages video, text and action inputs to generate realistic driving videos and offers fine-grained control over ego-vehicle behaviour and scene features. Due to its multi-modal nature, GAIA-1 can generate videos from many prompt modalities and combinations.

Examples of types of prompts that GAIA-1 can use to generate videos. GAIA-1 can generate videos by performing the future rollout starting from a video prompt. These future rollouts can be further conditioned on actions to influence particular behaviours of the ego-vehicle (e.g. steer left), or by text to drive a change in some aspects of the scene (e.g. change the colour of the traffic light). For speed and curvature, we condition GAIA-1 by passing the sequence of future speed and/or curvature values. GAIA-1 can also generate realistic videos from text prompts, or by simply drawing samples from its prior distribution (fully unconditional generation).

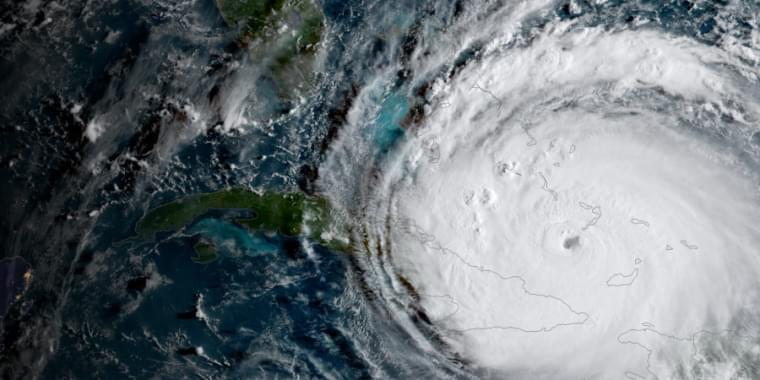

Hurricane Lee wasn’t bothering anyone in early September, churning far out at sea somewhere between Africa and North America. A wall of high pressure stood in its westward path, poised to deflect the storm away from Florida and in a grand arc northeast. Heading where, exactly? It was 10 days out from the earliest possible landfall—eons in weather forecasting—but meteorologists at the European Centre for Medium-Range Weather Forecasts, or ECMWF, were watching closely. The tiniest uncertainties could make the difference between a rainy day in Scotland or serious trouble for the US Northeast.

Typically, weather forecasters would rely on models of atmospheric physics to make that call. This time, they had another tool: a new generation of AI-based weather models developed by chipmaker Nvidia, Chinese tech giant Huawei, and Google’s AI unit DeepMind.

The — a crucial mission as AI capabilities are increasingly acquired, developed and integrated into U.S. defense and intelligence systems, the agency’s outgoing director announced Thursday.

Army Gen. Paul Nakasone said the center would be incorporated into the NSA’s Cybersecurity Collaboration Center, where it works with private industry and international partners to harden the U.S. defense-industrial base against threats from adversaries led by China and Russia.

“We maintain an advantage in AI in the United States today. That AI advantage should not be taken for granted,” Nakasone said at the National Press Club, emphasizing the threat from Beijing in particular.”

The National Security Agency is starting an artificial intelligence security center — a crucial mission as AI capabilities are increasingly acquired, developed and integrated into U.S. defense and intelligence systems.