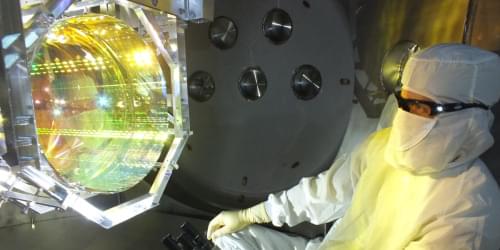

A crystalline reflective coating being considered for future gravitational-wave detectors exhibits peculiar noise features at cryogenic temperatures.

Imagine you’re in an airplane with two pilots, one human and one computer. Both have their “hands” on the controllers, but they’re always looking out for different things. If they’re both paying attention to the same thing, the human gets to steer. But if the human gets distracted or misses something, the computer quickly takes over.

Meet the Air-Guardian, a system developed by researchers at the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL). As modern pilots grapple with an onslaught of information from multiple monitors, especially during critical moments, Air-Guardian acts as a proactive co-pilot; a partnership between human and machine, rooted in understanding attention.

But how does it determine attention, exactly? For humans, it uses eye-tracking, and for the neural system, it relies on something called “saliency maps,” which pinpoint where attention is directed. The maps serve as visual guides highlighting key regions within an image, aiding in grasping and deciphering the behavior of intricate algorithms. Air-Guardian identifies early signs of potential risks through these attention markers, instead of only intervening during safety breaches like traditional autopilot systems.

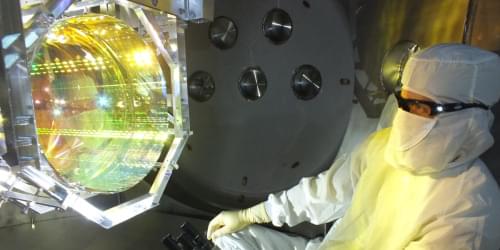

Like fingerprints, a firearm’s discarded shell casings have unique markings. This allows forensic experts to compare casings from a crime scene with those from a suspect’s gun. Finding and reporting a mismatch can help free the innocent, just as a match can incriminate the guilty.

But a new study from Iowa State University researchers reveals mismatches are more likely than matches to be reported as “inconclusive” in cartridge-case comparisons.

“Firearms experts are failing to report evidence that’s favorable to the defense, and it has to be addressed and corrected. This is a terrible injustice to innocent people who are counting on expert examiners to issue a report showing that their gun was not involved but instead are left defenseless by a report that says the result was inconclusive,” says Gary Wells, an internationally recognized pioneer and scholar in eyewitness memory research.

UCLA pediatric pulmonologist Mindy Ross, MD, MBA, MAS, talks about diagnosis and evaluation of chronic cough in children.

As we plunge head-on into the game-changing dynamic of general artificial intelligence, observers are weighing in on just how huge an impact it will have on global societies. Will it drive explosive economic growth as some economists project, or are such claims unrealistically optimistic?

Few question the potential for change that AI presents. But in a world of litigation, privacy concerns and ethical boundaries, will AI be able to thrive?

Two researchers from Epoch, a research group evaluating the progression of artificial intelligence and its potential impacts, decided to explore arguments for and against the likelihood that innovation ushered in by AI will lead to explosive growth comparable to the Industrial Revolution of the 18th and 19th centuries.

VentureBeat presents: AI Unleashed — An exclusive executive event for enterprise data leaders. Network and learn with industry peers. Learn More

Microsoft has joined the race for large language model (LLM) application frameworks with its open source Python library, AutoGen.

As described by Microsoft, AutoGen is “a framework for simplifying the orchestration, optimization, and automation of LLM workflows.” The fundamental concept behind AutoGen is the creation of “agents,” which are programming modules powered by LLMs such as GPT-4. These agents interact with each other through natural language messages to accomplish various tasks.

In case you missed the hype, Humane is a startup founded by ex-Apple executives that’s working on a device called the “Ai Pin” that uses projectors, cameras and AI tech to act as a sort of wearable AI assistant. Now, the company has unveiled the AI Pin in full at a Paris fashion show (Humane x Coperni) as a way to show off the device’s new form factor. “Supermodel Naomi Campbell is the first person outside of the company to wear the device in public, ahead of its full unveiling on November 9,” Humane wrote.

The company describes the device as a “screenless, standalone device and software platform built from the ground up for AI.” It’s powered by an “advanced” Qualcomm Snapdragon platform and equipped with a mini-projector that takes the place of a smartphone screen, along with a camera and speaker. It can perform functions like AI-powered optical recognition, but is also supposedly “privacy-first” thanks to qualities like no wake word and thus no “always on” listening.”