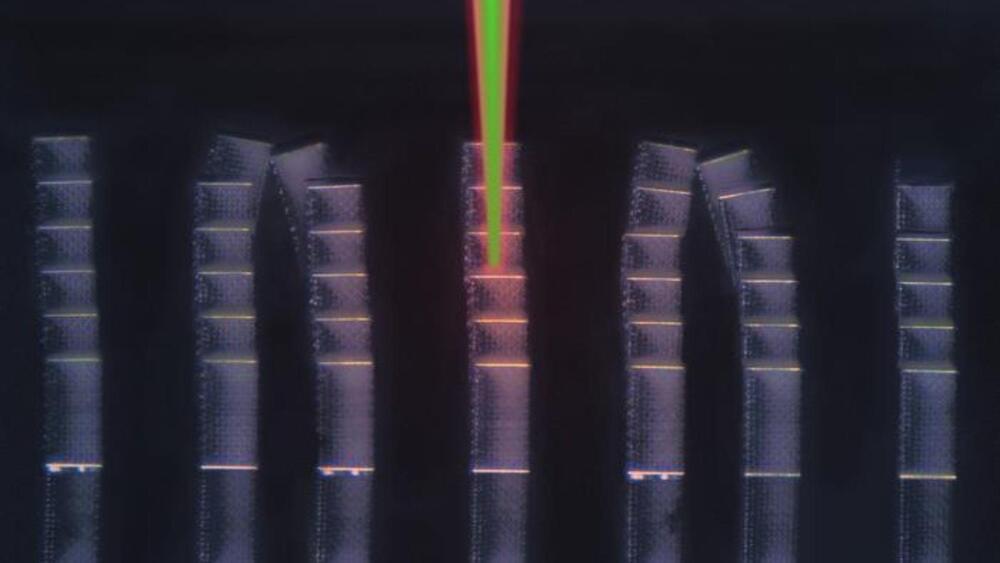

3D printing is advancing rapidly, and the range of materials that can be used has expanded considerably. While the technology was previously limited to fast-curing plastics, it has now been made suitable for slow-curing plastics as well. These have decisive advantages as they have enhanced elastic properties and are more durable and robust.

The use of such polymers is made possible by a new technology developed by researchers at ETH Zurich and a US start-up. As a result, researchers can now 3D print complex, more durable robots from a variety of high-quality materials in one go. This new technology also makes it easy to combine soft, elastic, and rigid materials. The researchers can also use it to create delicate structures and parts with cavities as desired.