Shared with Dropbox.

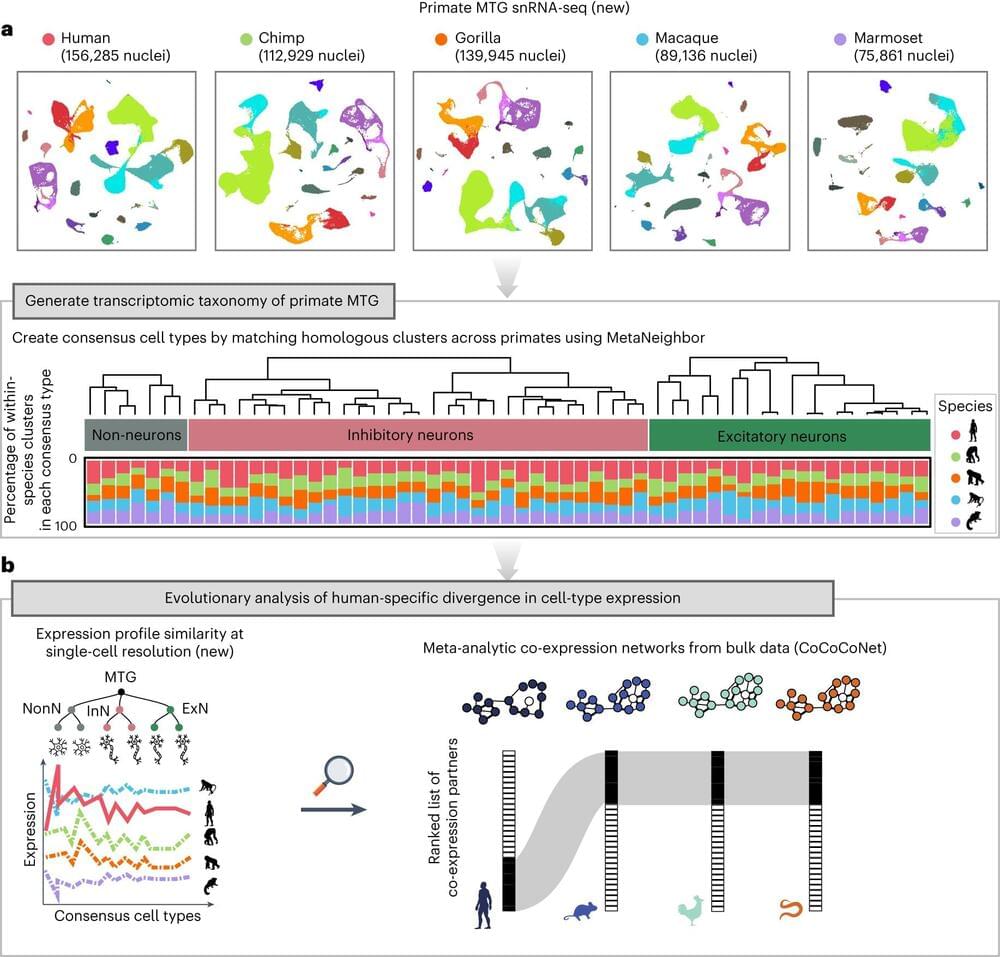

An international team led by researchers at the University of Toronto has uncovered over 100 genes that are common to primate brains but have undergone evolutionary divergence only in humans—and which could be a source of our unique cognitive ability.

The researchers, led by Associate Professor Jesse Gillis from the Donnelly Center for Cellular and Biomolecular Research and the department of physiology at U of T’s Temerty Faculty of Medicine, found the genes are expressed differently in the brains of humans compared to four of our relatives—chimpanzees, gorillas, macaques and marmosets.

The findings, published in Nature Ecology & Evolution, suggest that reduced selective pressure, or tolerance to loss-of-function mutations, may have allowed the genes to take on higher-level cognitive capacity. The study is part of the Human Cell Atlas, a global initiative to map all human cells to better understand health and disease.

Some new concepts for me but interesting and a good step forward.

A team of researchers working on DARPA’s Optimization with Noisy Intermediate-Scale Quantum devices (ONISQ) program has created the first-ever quantum circuit with logical quantum bits (qubits), a key discovery that could accelerate fault-tolerant quantum computing and revolutionize concepts for designing quantum computer processors.

The ONISQ program began in 2020 seeking to demonstrate a quantitative advantage of quantum information processing by leapfrogging the performance of classical-only supercomputers to solve a particularly challenging class of problem known as combinatorial optimization. The program pursued a hybrid concept to combine intermediate-sized “noisy”— or error-prone — quantum processors with classical systems focused specifically on solving optimization problems of interest to defense and commercial industry. Teams were selected to explore various types of physical, non-logical qubits including superconducting qubits, ion qubits, and Rydberg atomic qubits.

The Harvard research team, supported by MIT, QuEra Computing, Caltech, and Princeton, focused on exploring the potential of Rydberg qubits, and in the course of their research made a major breakthrough: The team developed techniques to create error-correcting logical qubits using arrays of “noisy” physical Rydberg qubits. Logical qubits are a critical missing piece in the puzzle to realize fault-tolerant quantum computing. In contrast to error-prone physical qubits, logical qubits are error-corrected to maintain their quantum state, making them useful for solving a diverse set of complex problems.

As Canadian politicians continue an intense debate over emissions policies, a new study has found that the country’s carbon pricing scheme in British Columbia has a health benefit: Air in the Pacific province is now cleaner to breathe.

British Columbia (BC) introduced a carbon tax in 2008.

Per-and polyfluoroalkyl substances (PFAS), manufactured chemicals used in products such as food packaging and cosmetics, can lead to reproductive problems, increased cancer risk and other health issues. A growing body of research has also linked the chemicals to lower bone mineral density, which can lead to osteoporosis and other bone diseases. But most of those studies have focused on older, non-Hispanic white participants and only collected data at a single point in time.

Now, researchers from the Keck School of Medicine of USC have replicated those results in a longitudinal study of two groups of young participants, primarily Hispanics, a group that faces a heightened risk of bone disease in adulthood.

“This is a population completely understudied in this area of research, despite having an increased risk for bone disease and osteoporosis,” said Vaia Lida Chatzi, MD, Ph.D., a professor of population and public health sciences at the Keck School of Medicine and the study’s senior author.

📸 Watch this video on Facebook https://www.facebook.com/share/v/dv8r6G2mywg5NthT/?mibextid=qi2Omg

Accessibility empowers innovation for everyone.

Technology can empower the 1+ billion people living with disabilities to achieve more, and the benefit is for us all. Solutions built with inclusive design and accessibility top of mind can help improve our experience, productivity, and engagement.

We’re proud of Microsoft partners empowering a more accessible world. These partners are driving innovation in assistive technology, enabling more inclusive workplaces, and increasing disability inclusion and support for mental health needs in their communities. Join us in supporting partners building for a more inclusive and accessible world.

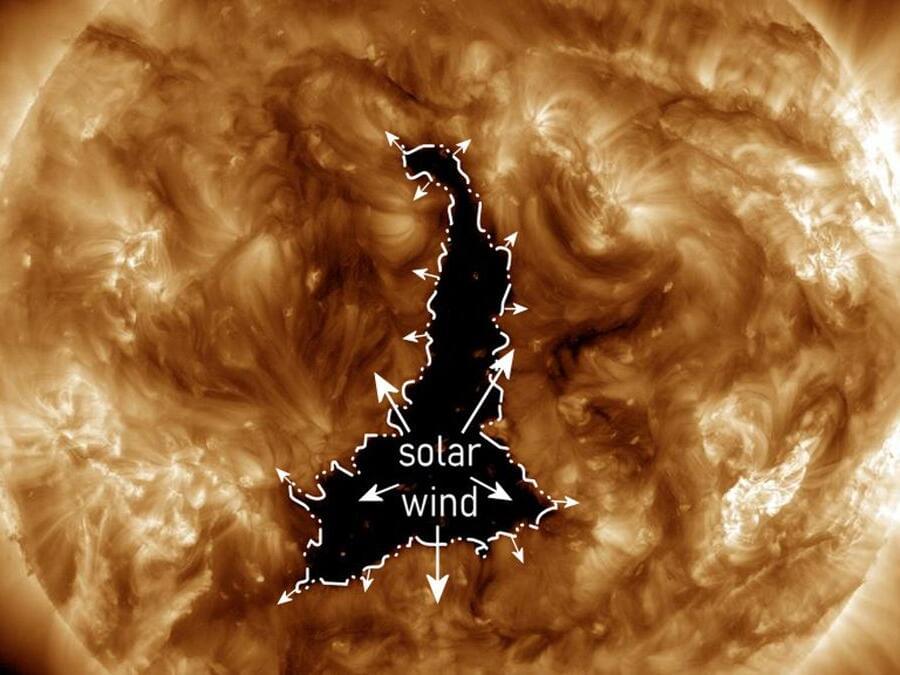

An enormous dark hole has opened up in the sun’s surface and is spewing powerful streams of unusually fast radiation, known as solar wind, right at Earth. The size and orientation of the temporary gap, which is wider than 60 Earths, is unprecedented at this stage of the solar cycle, scientists say.

The giant dark patch on the sun, known as a coronal hole, took shape near the sun’s equator on Dec. 2 and reached its maximum width of around 497,000 miles (800,000 kilometers) within 24 hours, Spaceweather.com reported. Since Dec. 4, the solar void has been pointing directly at Earth.

Experts initially predicted this most recent hole could spark a moderate (G2) geomagnetic storm, which could trigger radio blackouts and strong auroral displays for the next few days. However, the solar wind has been less intense than expected, so the resulting storm has only been weak (G1) so far, according to Spaceweather.com. But auroras are still possible at high latitudes.

On the internet, nothing is safe — not even your DNA, apparently.

That’s the dystopian lesson from the commercial genetic testing company 23andMe, which disclosed on Friday in a regulatory filing that hackers managed to access information on about 14,000 users or 0.1 percent of its customer user base.

But the problem goes beyond this relatively small number of people. Because the website allows users to share DNA information with other users in order to find relatives, the true number impacted is orders of magnitude larger — with about 6.9 million customers having their personal information compromised, according to TechCrunch. Big yikes on that figure, because it affects something like half of the 14 million users at 23andMe.