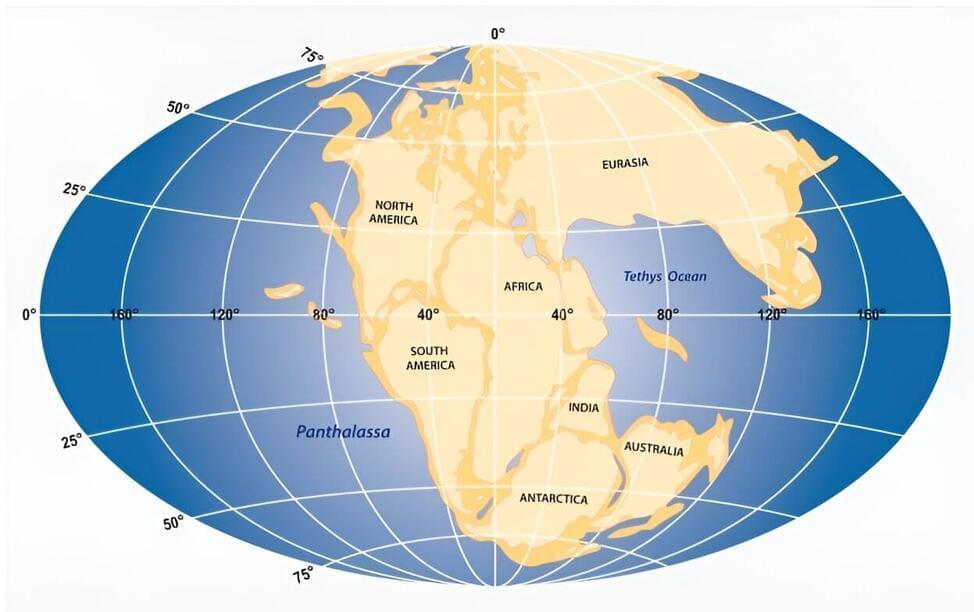

A recent study published in the Journal of Geophysical Research: Solid Earth sheds new light on the formation of the East Coast of the United States—a “passive margin,” in geologic terms—during the breakup of the supercontinent Pangea and the opening of the Atlantic Ocean around 230 million years ago.

In geology, passive margins are “quiet” areas, locations with minimal faulting or magmatism, where land meets the ocean. Understanding their formation is crucial for many reasons, including that they are stable regions where hydrocarbon resources are extracted and that their sedimentary archive preserves our planet’s climate history as far back as millions of years.

The study, co-authored by scientists from the University of New Mexico, SMU seismologist Maria Beatrice Magnani, and scientists from Northern Arizona University and USC, explores the structure of rocks and the amount of magma-derived rocks along the East Coast and how they change along the margin, which may be tied to how the continent was pulled apart when Pangea fragmented. This event may have also influenced the structure of the Mid-Atlantic Ridge, a vast underwater mountain system running down the center of the Atlantic Ocean.