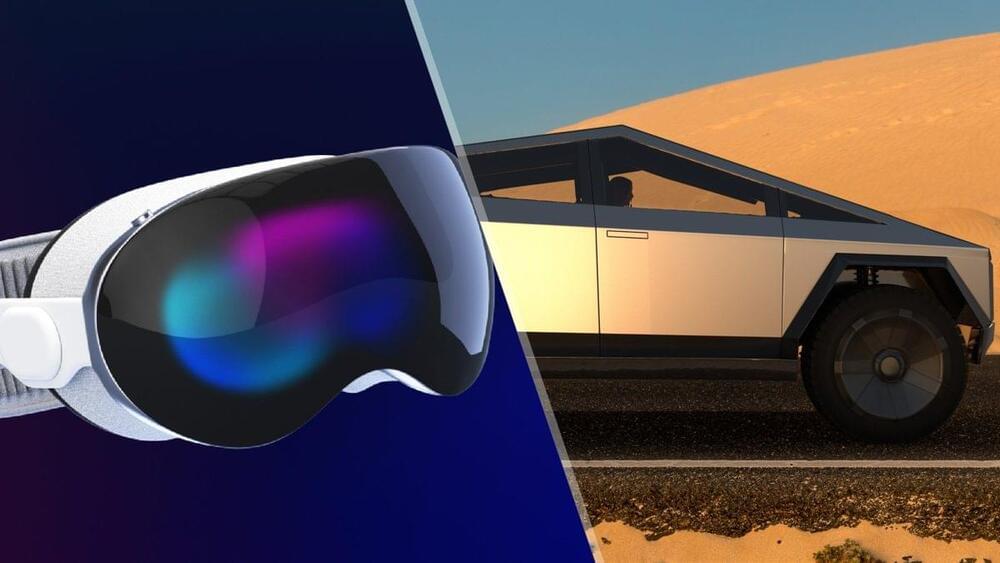

Apparently, the Vision Pro can double as a key for one’s Tesla.

Sidewalk delivery robot services appear to be stalling left and right, but a pioneer in the concept says it is profitable and has now raised a round of funding to scale up to meet market demand. Starship Technologies, a startup out of Estonia that was an early mover in the delivery robotics space, has picked up $90 million in funding as it works to cement its position at the top of its category.

This latest investment round is being co-led by two previous backers: Plural, the VC with roots in Estonia and London that announced a new $430 million fund last month; and Iconical, the London-based investor backed by Janus Friis, the serial entrepreneur who was a co-founder of Skype, and who is also a co-founder of Starship itself.

It brings the total raised by Starship to $230 million, with previous backers including the Finnish-Japanese firm NordicNinja, the European Investment Bank, Morpheus Ventures and TDC.

In a recent breakthrough, DNA sequencing technology has uncovered the culprit behind cassava witches’ broom disease: the fungus genus Ceratobasidium. The cutting-edge nanopore technology used for this discovery was first developed to track the COVID-19 virus in Colombia, but is equally suited to identifying and reducing the spread of plant viruses.

The findings, published in Scientific Reports, will help plant pathologists in Laos, Cambodia, Vietnam and Thailand protect farmers’ valued cassava harvest.

“In Southeast Asia, most smallholder farmers rely on cassava. Its starch-rich roots form the basis of an industry that supports millions of producers. In the past decade, however, cassava witches’ broom disease has stunted plants, reducing harvests to levels that barely permit affected farmers to make a living,” said Wilmer Cuellar, Senior Scientist at the Alliance of Bioversity and CIAT.

The recent move to open access, in which researchers pay a fee to publish an article in a journal, has also encouraged some publishers to boost revenues by publishing as many papers as possible. At the same time, there has been a rise in retractions, especially of fabricated or manipulated manuscripts sold by “paper mills”. Last year, for example, more than 10 000 journal articles were retracted – a record high – with about 8,000 alone from journals owned by Hindawi, a London-based subsidiary of the publicly-owned publisher Wiley.

The new “purpose-led” coalition is designed to show how the three learned-society publishers have a business model that is not like that of profit-focussed corporations. In particular, they plough all the money generated from publishing back into science by supporting initiatives such as educational training, mentorship, awards and grants. “Purpose-led publishing is about our dedication to science, and to the scientific community,” says Antonia Seymour, IOP Publishing’s chief executive. “We’re proudly declaring that science is our only shareholder.”

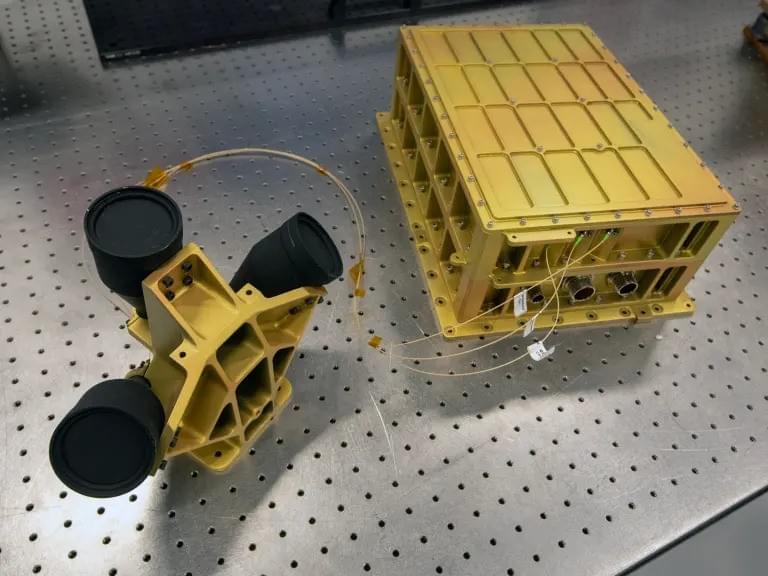

Read about NASA’s new instrument for landing on other worlds!

Landing on planetary bodies is both risky and hard, and landing humans is even riskier and harder. This is why technology needs to be developed to mitigate the risks associated with landing large spacecraft on the Moon and other planetary bodies we plan to continue exploring, both in the near and distant future. This is what makes the Nova-C lunar lander from Intuitive Machines—which is scheduled to launch to the Moon on February 13 and also called Nova-C (IM-1) —so vital to returning humans to the Moon. One of its NASA science payloads will be the Navigation Doppler Lidar (NDL), which will serve as a technology demonstration for future landers to help them navigate risky terrain and land safely.

Image of the Navigation Doppler Lidar which will be a technology demonstration during the IM-1 mission. (Credit: NASA/David C. Bowman)

When NASA was landing robots on Mars in the 1990s and 2000s, they discovered that radar and radio waves were insufficient for accurate landing measurements, so the engineers had to come up with their own plan to land spacecraft on extraterrestrial worlds.

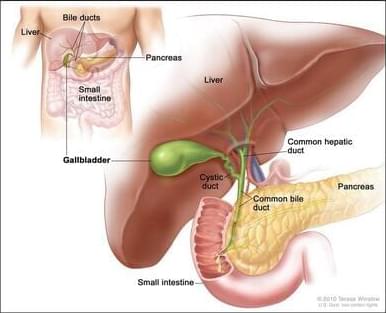

A healthy liver is capable of completely regenerating itself. Researchers from Heinrich Heine University Düsseldorf (HHU), University Hospital Düsseldorf (UKD) and the German Diabetes Center (DDZ) have now identified the growth factor MYDGF (Myeloid-Derived Growth Factor), which is important for this regenerative capacity.

In cooperation with the Hannover Medical School and the University Medical Center Mainz, they also showed that higher levels of MYDGF can be detected in the blood of patients following partial removal of the liver.

In a study published in Nature Communications, they also report that this growth factor stimulates the proliferation of human hepatocytes in a tissue culture.

A new test invented by University of Illinois Chicago researchers allows dentists to screen for the most common form of oral cancer with a simple and familiar tool: the brush.

The diagnostic kit, created and patented by Guy Adami and Dr. Joel Schwartz of the UIC College of Dentistry, uses a small brush to collect cells from potentially cancerous lesions inside the mouth. The sample is then analyzed for genetic signals of oral squamous cell carcinoma, the ninth most prevalent cancer globally.

This new screening method, which is currently seeking commercialization partnerships, improves upon the current diagnostic standard of surgical biopsies-an extra referral step that risks losing patients who sometimes don’t return until the cancer progresses to more advanced, hard-to-treat stages.