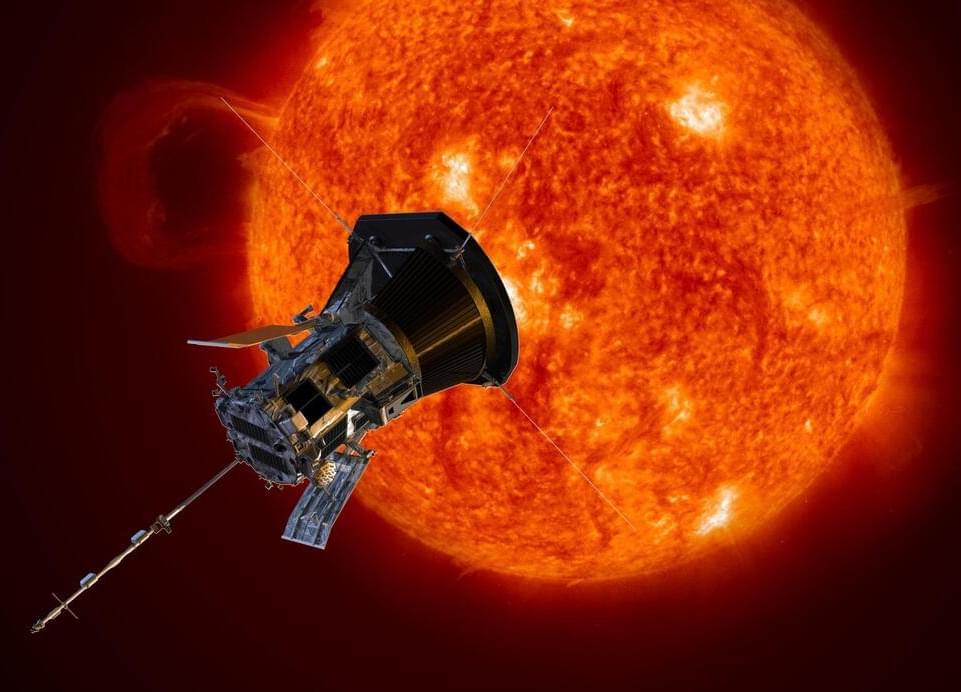

NASA’s Parker Solar Probe is crashing through a hailstorm of dust as it hurtles towards the sun at awe-inspiring speed.

The probe’s team members found that high-speed impacts with dust particles are not only more common than expected, they’re making tiny plumes of superhot plasma on the surface of the craft, according to an announcement for a new study.

The probe’s main mission goals are to measure the electric and magnetic fields near the sun and learn more about the solar wind—the stream of particles coming off of the sun, says David Malaspina, a space plasma physicist at the University of Colorado Boulder Astrophysical and Planetary Sciences Department and Laboratory for Atmospheric and Space Physics. Malaspina led the study, which the team will present at a conference this week.