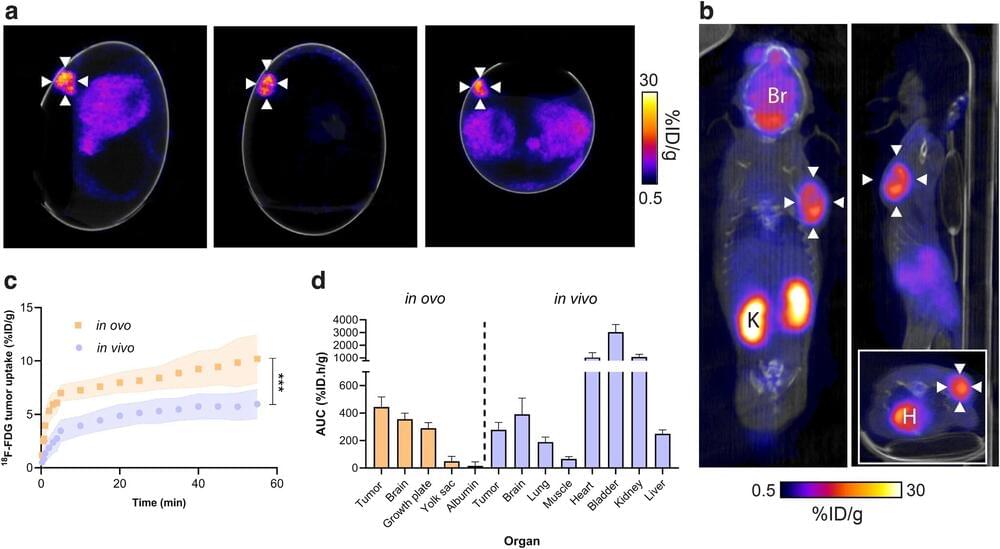

In a paper published in npj Imaging, King’s researchers have assessed the use of fertilized chicken eggs as an alternative model that can resolve both ethical and economic issues for preclinical cancer research.

The use of animal models in cancer research is a major contributor to the clinical development of drugs and diagnostic imaging. However, while invaluable tools, the current standard of using mouse models to recreate diseases is expensive, time-intensive, and complicated by both variable tumor take rates and the associated welfare considerations.

Fertilized chicken eggs contain a highly vascularized membrane, known as the chicken chorioallantoic membrane (CAM), which can provide an ideal environment for tumor growth and study, but to date, relatively few studies have used chick CAM to evaluate novel radiopharmaceuticals.