The Quantum Insider (TQI) is the leading online resource dedicated exclusively to Quantum Computing.

Dr. David Cohen comments on 10-year results from a trial of transcatheter vs. surgical aortic valve replacement:

Over the past decade, transcatheter aortic valve replacement (TAVR) has evolved from a niche procedure to treat severe aortic stenosis in high-risk patients to a mainstream procedure that is also performed in intermediate-and low-risk patients. With this evolution in practice, the large number of younger patients with life expectancies 10 years now receiving TAVR has raised concerns about its durability and patients’ long-term outcomes. Now, 10-year results are available from the NOTION trial of TAVR versus surgical aortic valve replacement (SAVR) that was conducted between 2009 and 2013 (NEJM JW Cardiol May 29 2015 and J Am Coll Cardiol 2015; 65:2184).

Two hundred eighty patients aged 70 years (mean age, 79 years; mean predicted risk of surgical mortality, 3%) were randomized to SAVR using any commercially available bioprosthesis or TAVR using the first-generation self-expanding CoreValve device. At 10-year follow-up, there was no significant between-group difference in the composite of death, stroke, or myocardial infarction (66% for both groups) or any of the individual components. Rates of bioprosthetic valve failure and repeat valve intervention were also similar. However, the rate of bioprosthetic valve dysfunction was lower with TAVR, largely reflecting lower rates of patient–prosthesis mismatch. The rate of structural valve deterioration was lower with TAVR as well, driven mainly by lower transvalvular gradients with TAVR that emerged early and persisted throughout follow-up.

Although limited by its modest sample size, the NOTION trial provides the longest available follow-up of any TAVR-versus-SAVR randomized trial to date. Overall, the results provide reassurance that there are no important differences in major clinical outcomes between the two strategies, and the echocardiographic data suggest sustained differences in hemodynamic performance in favor of TAVR. Nonetheless, given the advanced age of the patients at the time of enrollment, we should be cautious in extrapolating these findings to younger patients with severe aortic stenosis or to patients with bicuspid aortic valve disease (who were excluded from NOTION). These findings emphasize the tension between ongoing innovation and the desire for long-term outcomes data for our cardiac devices.

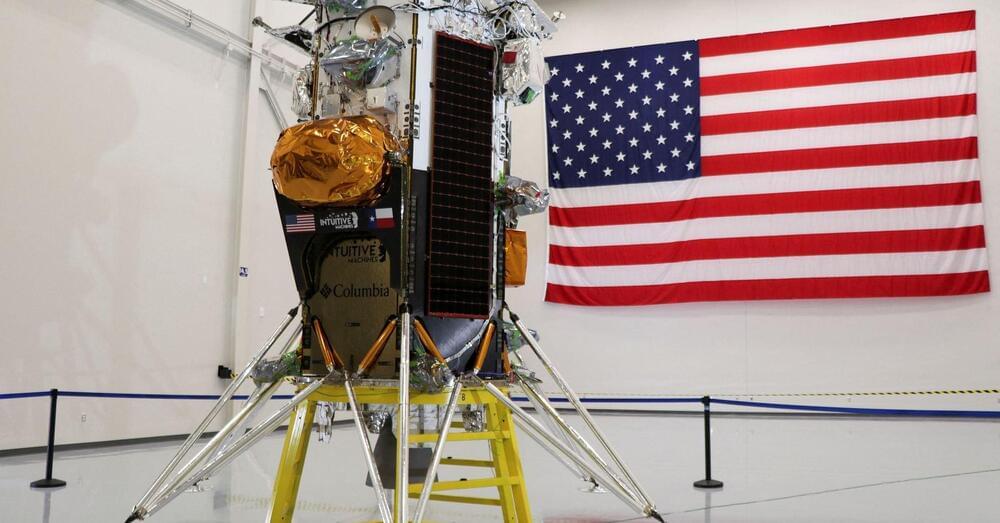

Shares of Intuitive Machines, which fell for two straight sessions before the landing, were among the top trending stocks on retail trader platform Stocktwits on Friday.

More shares traded in February — exceeding 200 million — than in the previous two years combined, according to LSEG data.

Ghaffarian, who is also the co-founder of Axiom Space, is on the board of other space organizations and has held numerous technical and management positions at Lockheed Martin, Ford Aerospace and Loral.