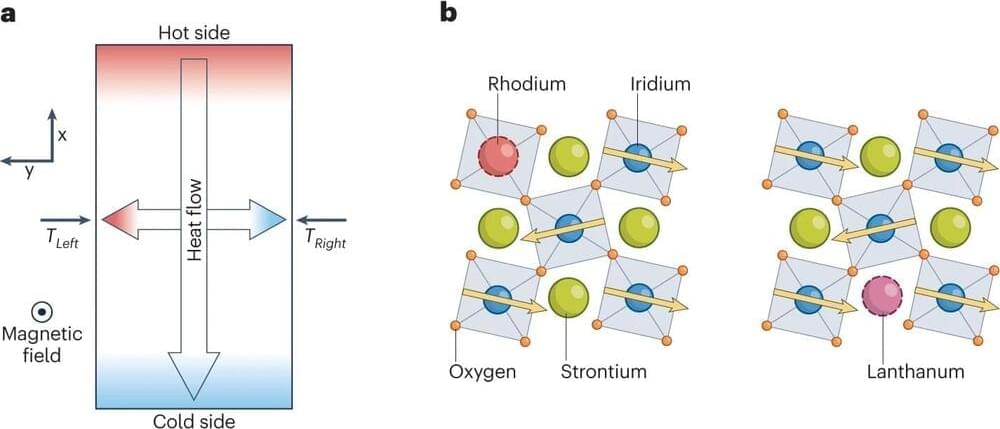

The thermal hall effect (THE) is a physical phenomenon characterized by tiny transverse temperature differences occurring in a material when a thermal current passes through it and a perpendicular magnetic field is applied to it. This effect has been observed in a growing number of insulators, yet its underlying physics remains poorly understood.

Researchers at Université de Sherbrooke in Canada have been trying to identify the mechanism behind this effect in different materials. Their most recent paper, published in Nature Physics, specifically examined this effect in the antiferromagnetic insulator strontium iridium oxide (Sr2IrO4).

“Our current research activity on the THE in insulators started with our discovery of a large THE in cuprate superconductors,” Louis Taillefer, co-author of the paper, told Phys.org.