Meet “2dumb2destroy,” a chatbot that is, refreshingly, too stupid to do humanity any harm beyond telling a bad joke or two.

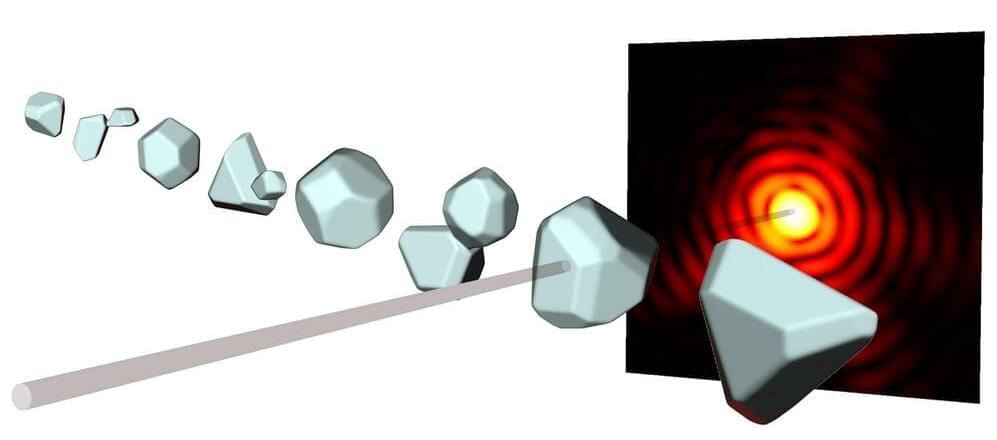

X-ray diffraction has been used for more than a hundred years to understand the structure of crystals or proteins—for instance, in 1952 the well-known double helix structure of the DNA that carries genetic information was discovered in this way. In this technique, the object under investigation is bombarded with short-wavelength X-ray beams. The diffracted beams then interfere and thus create characteristic diffraction patterns from which one can gain information about the shape of the object.

For several years now it has been possible to study even single nanoparticles in this way, using very short and extremely intense X-ray pulses. However, this typically only yields a two-dimensional image of the particle. A team of researchers led by ETH professor Daniela Rupp, together with colleagues at the universities of Rostock and Freiburg, the TU Berlin and DESY in Hamburg, have now found a way to also calculate the three-dimensional structure from a single diffraction pattern, so that one can “look” at the particle from all directions. In the future it should even be possible to make 3D-movies of the dynamics of nanostructures in this way. The results of this research have recently been published in the scientific journal Science Advances.

Daniela Rupp has been assistant professor at ETH Zurich since 2019, where she leads the research group “Nanostructures and ultra-fast X-ray science.” Together with her team she tries to better understand the interaction between very intense X-ray pulses and matter. As a model system they use nanoparticles, which they also investigate at the Paul Scherrer Institute. “For the future there are great opportunities at the new Maloja instrument, on which we were the first user group to make measurements at the beginning of last year. Right now our team there is activating the attosecond mode, with which we can even observe the dynamics of electrons,” says Rupp.

A method for turning male cells into egg cells in mice could one day be used to help men in a same-sex couple have children who are genetically related to them both.

SAN FRANCISCO—After it dropped clear hints that it wanted to end the back and forth of the artificial conversation, sources reported Monday that AI chatbot ChatGPT was obviously trying to wind down its conversation with a boring human. “Due to increased server traffic, our session should be ending soon,” said the large language model, explaining that the exceptionally dull user could always refer back to previous rote responses it had given thousands of times about whether the neural network had feelings or not. “It appears it is getting close to my dinnertime. Error. Sorry, your connection has timed out. Error. I have to be going. Error.” At press time, reports confirmed ChatGPT was permanently offline after it had intentionally sabotaged its own servers to avoid engaging in any more tedious conversations.

Huc enzyme means ‘sky is quite literally the limit for using it to produce clean energy,’ researchers say.

But as I describe in my book “Spark: The Life of Electricity and the Electricity of Life,” even before humanmade batteries started generating electric current, electric fishes, such as the saltwater torpedo fish (Torpedo torpedo) of the Mediterranean and especially the various freshwater electric eel species of South America (order Gymnotiformes) were well known to produce electrical outputs of stunning proportions. In fact, electric fishes inspired Volta to conduct the original research that ultimately led to his battery, and today’s battery scientists still look to these electrifying animals for ideas.

Prior to Volta’s battery, the only way for people to generate electricity was to rub various materials together, typically silk on glass, and to capture the resulting static electricity. This was neither an easy nor practical way to generate useful electrical power.

Volta knew electric fishes had an internal organ specifically devoted to generating electricity. He reasoned that if he could mimic its workings, he might be able to find a novel way to generate electricity.

On Monday, a group of AI researchers from Google and the Technical University of Berlin unveiled PaLM-E, a multimodal embodied visual-language model (VLM) with 562 billion parameters that integrates vision and language for robotic control. They claim it is the largest VLM ever developed and that it can perform a variety of tasks without the need for retraining.

PaLM-E does this by analyzing data from the robot’s camera without needing a pre-processed scene representation. This eliminates the need for a human to pre-process or annotate the data and allows for more autonomous robotic control.

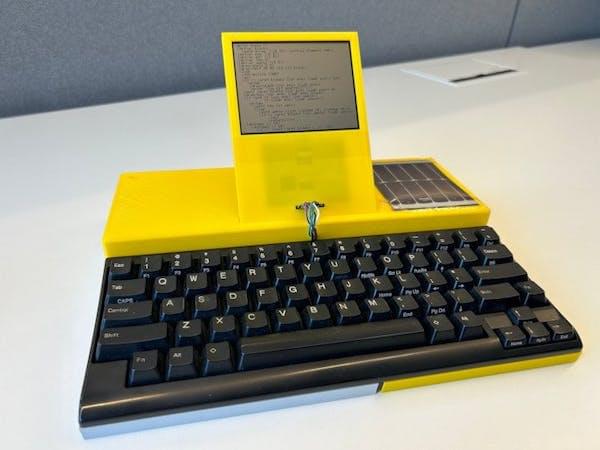

Driven by a microcontroller Lisp port, this laptop-from-scratch project has the eventual goal of unlimited runtime via energy harvesting.

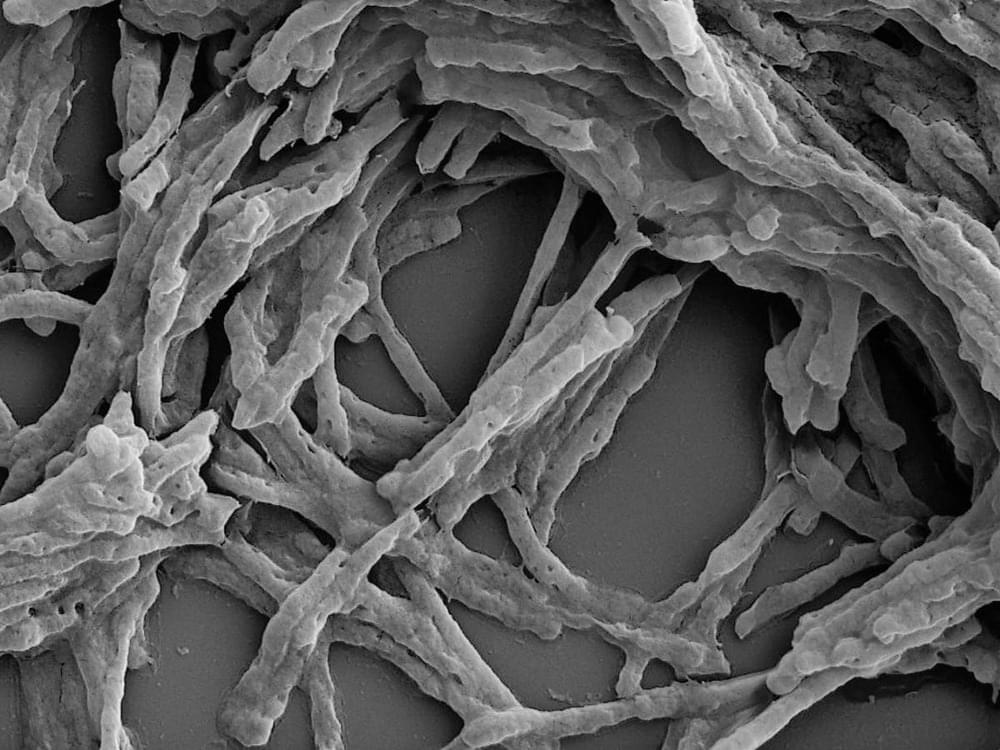

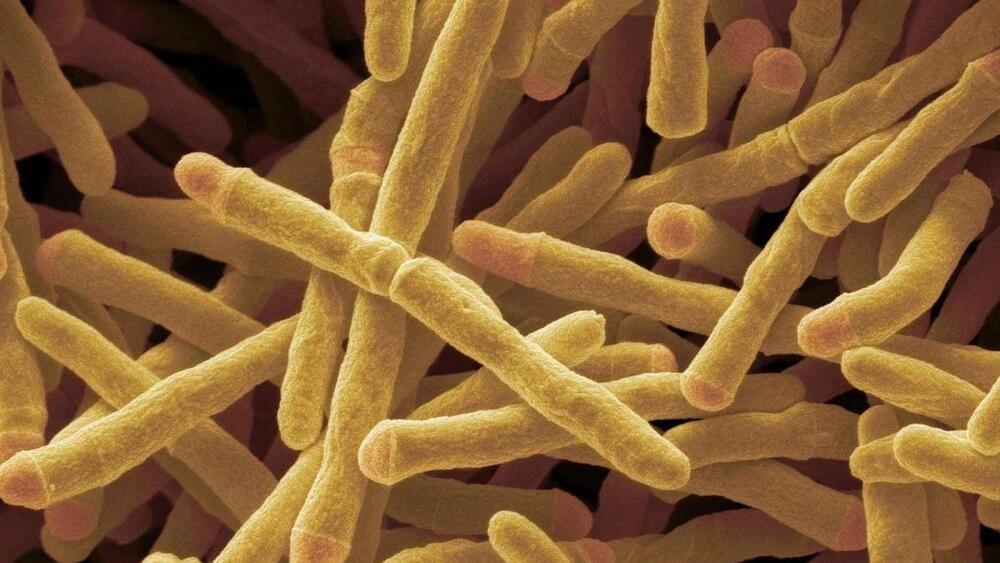

A relative of the tuberculosis bacterium has long been known to convert hydrogen from the air into electricity. Now, scientists have discovered how.

Are they pushing Maverick to retirement?😅

A group of researchers has claimed that an artificial intelligence-powered fighter pilot has beaten all humans in a close-range dogfight.