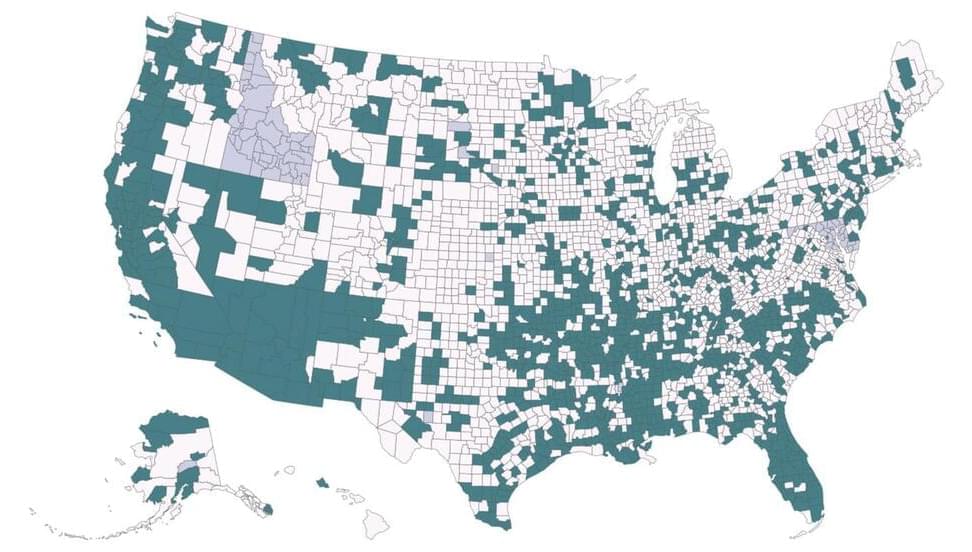

Cases of leprosy have increased in Florida and the southeastern United States over the last decade, according to a new report.

Leprosy, officially called Hansen’s disease, is a rare type of bacterial infection that attacks the nerves and can cause swelling under the skin. The new research paper, published in the Centers for Disease Control and Prevention’s Emerging Infectious Diseases journal, found that reported cases doubled in the Southeast over the last 10 years.

Central Florida in particular has seen a disproportionate share of cases, which indicates it might be an endemic location for the disease, meaning leprosy has a consistent presence in the region’s population rather than popping up in the form of one-off outbreaks.