Rigetti has launched its fourth-generation architecture with a single chip 84qubit quantum processor that can scale to larger systems.

A comprehensive new study provides evidence that various personality traits and cognitive abilities are connected. This means that if someone is good at a certain cognitive task, it can give hints about their personality traits, and vice versa.

For example, being skilled in math could indicate having a more open-minded approach to new ideas, but might also be associated with lower levels of politeness. These connections can help us understand why people are different in how they think and act.

The research has been published in the Proceedings of the National Academy of Sciences.

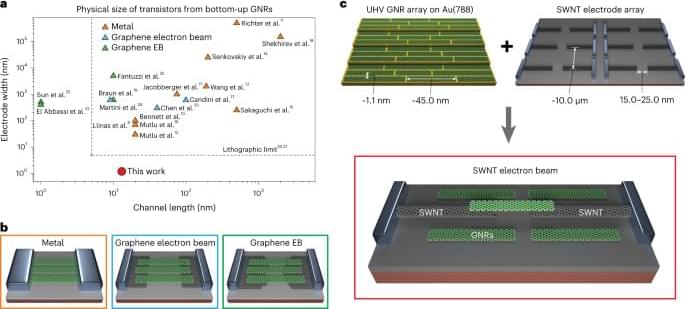

Individual graphene nanoribbons synthesized by an on-surface approach can be contacted with carbon nanotubes—with diameters as small as 1 nm—and used to make multigate devices that exhibit quantum transport effects such as Coulomb blockade and single-electron tunnelling.

Organoids have now been created from stem cells to secrete the proteins that form dental enamel, the substance that protects teeth from damage and decay. A multi-disciplinary team of scientists from the University of Washington in Seattle led this effort.

Organiods are the new thing, when you think about how AI, and nanotechnology changed the worldnwe live in, but years from now you will realize it, like all I have predicted since I played with a Kurzweil Keyboard when I was a child.

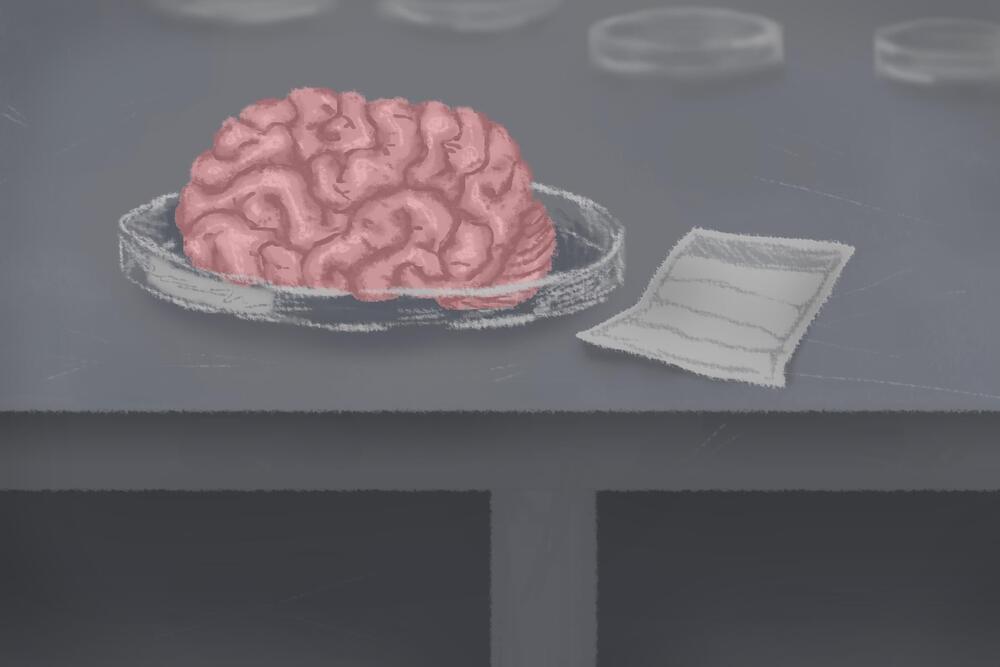

University of Michigan researchers published a study detailing a new method for making brain organoids, or miniature lab-grown brains used in neuroscience research, last June. Previously, the most common method for creating human brain organoids relied on Matrigel — a substance made of cells from mouse sarcomas — to provide structure for the organoids, but the new method uses an engineered extracellular matrix composed of human-derived proteins.

The lack of cells from other species in the new organoids means they more closely resemble actual human brains, opening up research possibilities on neurodegenerative diseases such as Alzheimer’s and Parkinson’s. U-M alum Ayse Muñiz, who worked on the research as part of her Ph.D. thesis while at the University, said in an interview with The Michigan Daily that having organoids with only human cells is advantageous for translational research — the process of turning knowledge from lab research into something with real-world applications.

“When you’re doing translational research, having contamination from other species will limit your ability to translate this into the clinic,” Muñiz said. “The presence of other species basically elicits immunogenic responses, and can just be a limitation for scale and other things like that. And so here now that you’ve taken that out, it makes the path to translation a lot easier.”

I first posted about Orgaanoids for the heart, beause I have a family member with heart problems, and I know how the innovation is a game changer. I like to think of myself as a futurist, and being ahead of most everyone. My post about Mastodon proves such…oh wait someone else posted it and threads uses the same ActivityPub protocol that is Mastodon. I should get Him to repost it if I know Him 🙄, while as if I thought it. Let us always embrace the future, and futurists. 😁

For over a century, scientists have dreamed of growing human organs sans humans. This technology could put an end to the scarcity of organs for transplants. But that’s just the tip of the iceberg. The capability to grow fully functional organs would revolutionize research. For example, scientists could observe mysterious biological processes, such as how human cells and organs develop a disease and respond (or fail to respond) to medication without involving human subjects.

As described in the aforementioned Nature paper, Żernicka-Goetz and her team mimicked the embryonic environment by mixing these three types of stem cells from mice. Amazingly, the stem cells self-organized into structures and progressed through the successive developmental stages until they had beating hearts and the foundations of the brain.

“Our mouse embryo model not only develops a brain, but also a beating heart [and] all the components that go on to make up the body,” said Żernicka-Goetz. “It’s just unbelievable that we’ve got this far. This has been the dream of our community for years and major focus of our work for a decade and finally we’ve done it.”

If the methods developed by Żernicka-Goetz’s team are successful with human stem cells, scientists someday could use them to guide the development of synthetic organs for patients awaiting transplants. It also opens the door to studying how embryos develop during pregnancy.

Behind the hype of the Google engineer who claimed an AI was ‘sentient’ is a history nearly as old as computers themselves.

Blog post with audio player, show notes, and transcript: https://www.preposterousuniverse.com/podcast/2020/01/06/78-d…fic-image/

Patreon: https://www.patreon.com/seanmcarroll.

Wilfrid Sellars described the task of philosophy as explaining how things, in the broadest sense of term, hang together, in the broadest sense of the term. (Substitute “exploring” for “explaining” and you’d have a good mission statement for the Mindscape podcast.) Few modern thinkers have pursued this goal more energetically, creatively, and entertainingly than Daniel Dennett. One of the most respected philosophers of our time, Dennett’s work has ranged over topics such as consciousness, artificial intelligence, metaphysics, free will, evolutionary biology, epistemology, and naturalism, always with an eye on our best scientific understanding of the phenomenon in question. His thinking in these areas is exceptionally lucid, and he has the rare ability to express his ideas in ways that non-specialists can find accessible and compelling. We talked about all of them, in a wide-ranging and wonderfully enjoyable conversation.

Daniel Dennett received his D.Phil. in philosophy from Oxford University. He is currently Austin B. Fletcher Professor of Philosophy and co-director of the Center for Cognitive Studies at Tufts University. He is known for a number of philosophical concepts and coinages, including the intentional stance, the Cartesian theater, and the multiple-drafts model of consciousness. Among his honors are the Erasmus Prize, a Guggenheim Fellowship, and the American Humanist Association’s Humanist of the Year award. He is the author of a number of books that are simultaneously scholarly and popular, including Consciousness Explained, Darwin’s Dangerous Idea, and most recently Bacteria to Bach and Back.

Blog post with show notes, audio player, and transcript: https://www.preposterousuniverse.com/podcast/2018/12/03/epis…imulation/

Patreon: https://www.patreon.com/seanmcarroll.

The “Easy Problems” of consciousness have to do with how the brain takes in information, thinks about it, and turns it into action. The “Hard Problem,” on the other hand, is the task of explaining our individual, subjective, first-person experiences of the world. What is it like to be me, rather than someone else? Everyone agrees that the Easy Problems are hard; some people think the Hard Problem is almost impossible, while others think it’s pretty easy. Today’s guest, David Chalmers, is arguably the leading philosopher of consciousness working today, and the one who coined the phrase “the Hard Problem,” as well as proposing the philosophical zombie thought experiment. Recently he has been taking seriously the notion of panpsychism. We talk about these knotty issues (about which we deeply disagree), but also spend some time on the possibility that we live in a computer simulation. Would simulated lives be “real”? (There we agree — yes they would.)

David Chalmers got his Ph.D. from Indiana University working under Douglas Hoftstadter. He is currently University Professor of Philosophy and Neural Science at New York University and co-director of the Center for Mind, Brain, and Consciousness. He is a fellow of the Australian Academy of Humanities, the Academy of Social Sciences in Australia, and the American Academy of Arts and Sciences. Among his books are The Conscious Mind: In Search of a Fundamental Theory, The Character of Consciousness, and Constructing the World. He and David Bourget founded the PhilPapers project.

Sabine Hossenfelder, Rupert Sheldrake and Bjorn Ekeberg go head to head on consciousness, panpsychism, physics and dard matter.

Watch more fiery contenet at https://iai.tv?utm_source=YouTube&utm_medium=description&utm…e-universe.

“Not only is the universe stranger than we think. It is stranger than we can think.” So argued Niels Bohr, one of the founders of quantum theory. We imagine our theories uncover how things are but, from quantum particles to dark matter, at fundamental levels the closer we get to what we imagine to be reality the stranger and more incomprehensible it appears to become.

Might science, and philosophy one day stretch to meet the universe’s strangeness? Or is the universe not so strange after all? Or should we give up the idea that we can uncover the essential character of the world, and with Bohr conclude that the strangeness of the universe and the quantum world transcend the limits of the human mind?

#DarkMatter #RupertSheldrake #SabineHossenfelder.

Influential scientist Rupert Sheldrake, prominent physicist Sabine Hossenfelder and esteemed philosopher Bjørn Ekeberg get to grips with whether the universe is stranger than we can imagine. Johnjoe McFadden hosts.