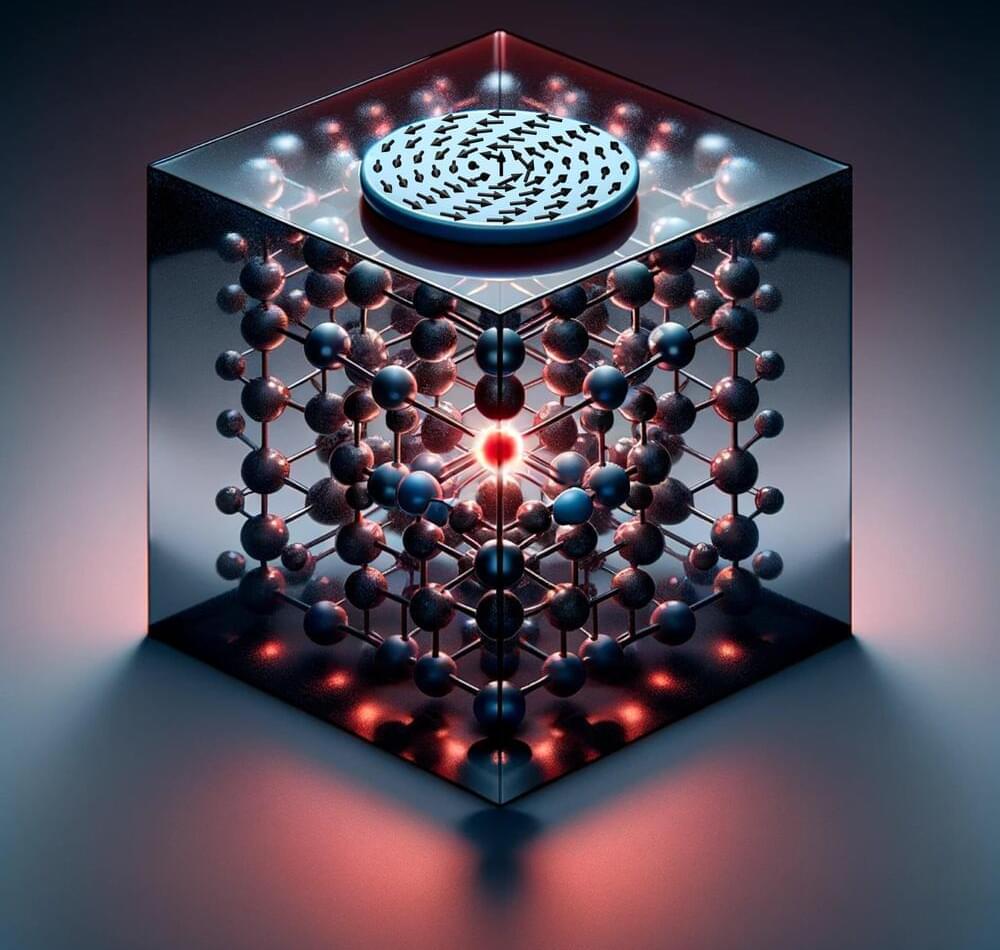

A superfluid vortex controlled in a lab is helping physicists learn more about the behavior of black holes.

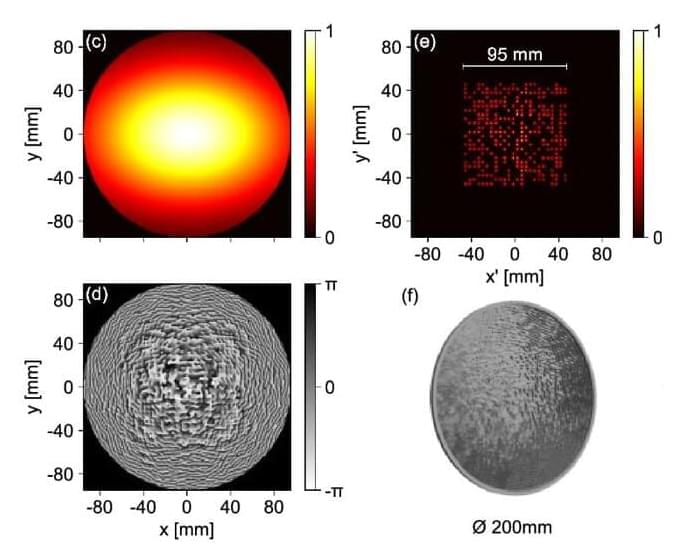

A whirlpool generated in helium cooled to just a fraction above absolute zero mimics the gravitational environment of these objects to such high precision that it’s giving unprecedented insight into how they drag and warp the space-time around them.

“Using superfluid helium has allowed us to study tiny surface waves in greater detail and accuracy than with our previous experiments in water,” explains physicist Patrik Švančara of the University of Nottingham in the UK, who led the research.