We’ve seen a lot about large learning models in general, and a lot of that has been elucidated at this conference, but many of the speakers have great personal takes on how this type of process works, and what it can do!

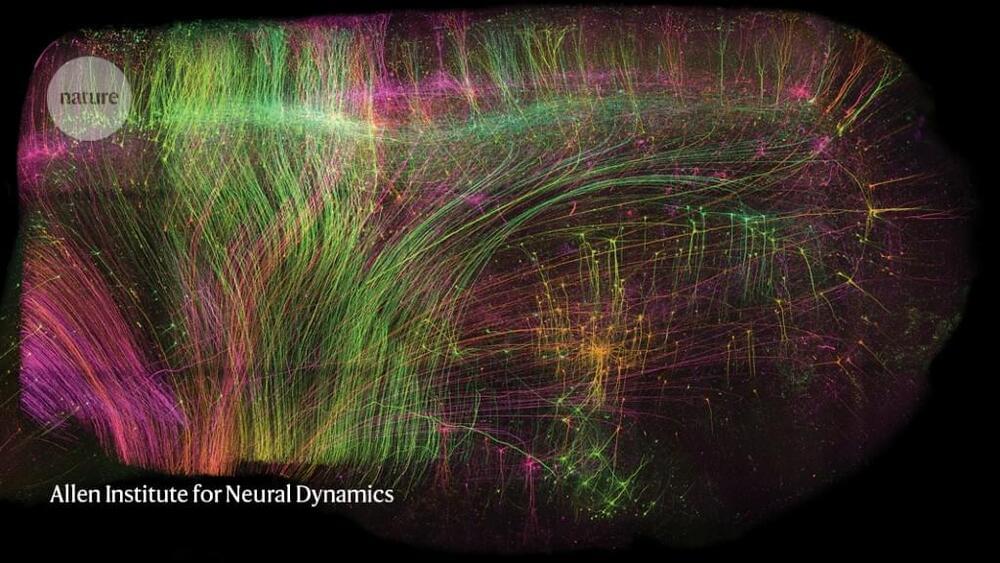

For example, here we have Yoon Kim talking about statistical objects, and the use of neural networks (transformer-based neural networks in particular) to use next-word prediction in versatile ways. He uses the example of the location of MIT:

“You might have a sentence like: ‘the Massachusetts Institute of Technology is a private land grant research university’ … and then you train this language model (around it),” he says. “Again, (it takes) a large neural network to predict the next word, which, in this case, is ‘Cambridge.’ And in some sense, to be able to accurately predict the next word, it does require this language model to store knowledge of the world, for example, that must store factoid knowledge, like the fact that MIT is in Cambridge. And it must store … linguistic knowledge. For example, to be able to pick the word ‘Cambridge,’ it must know what the subject, the verb and the object of the preceding or the current sentence is. But these are, in some sense, fancy autocomplete systems.”