When US biochemist Jennifer Doudna discovered the key for editing DNA, she opened scientific frontiers full of promise – and peril.

Quantum information theorists have turned Wigner’s friend into a powerful set of thought experiments for testing the plausibility of physical assumptions we make when we share information. These elaborated thought experiments involve multiple participants in multiple labs, entangled quantum states between friends and real-life entangled photon experiments to smoke out what our classical assumptions are.

Is there a fork in the road, classical or quantum? To stick with the classical interpretation that says Wigner’s friend involves two inconsistent descriptions of one state of affairs produces paradoxes. The quantum perspective implies there are descriptions of two different states of affairs. The first is intuitive but ends up in a contradiction, the other is less intuitive, but consistent. Quantum friendship means never having to say you’re sorry for your use of the formalism.

Robert P Crease is a professor (click link below for full bio), Jennifer Carter is a lecturer and Gino Elia is a PhD student, all in the Department of Philosophy, Stony Brook University, US.

The new map includes around 1.3 million quasars from across the visible universe and could help scientists better understand the properties of dark matter.

Astronomers have charted the largest-ever volume of the universe with a new map of active supermassive black holes living at the centers of galaxies. Called quasars, the gas-gobbling black holes are, ironically, some of the universe’s brightest objects.

The new map logs the location of about 1.3 million quasars in space and time, the furthest of which shone bright when the universe was only 1.5 billion years old. (For comparison, the universe is now 13.7 billion years old.)

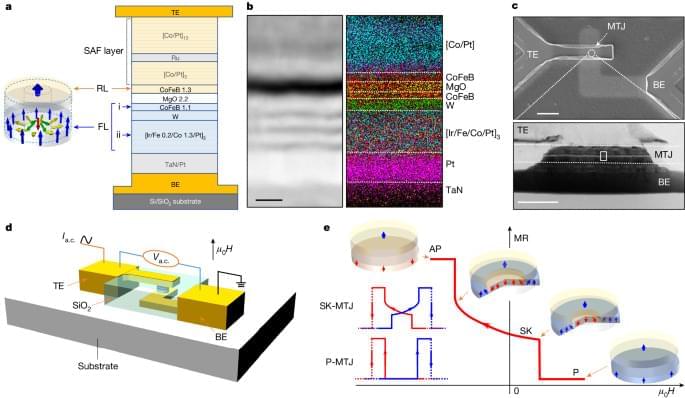

Chinese researchers are making variations of LK99 room temperature superconductor materials with more sulfur and copper in the chemistry. They are publishing results with stronger magnetic indications of a Meissner effect.

The chinese researchers have been online discussing their room temperature superconducting research and the challenges of the materials.

The field of aging research has made significant progress over the last three decades, reaching a stage where we now understand the underlying mechanisms of the aging process. Moreover, the knowledge has broadened to include techniques that quantify aging, decelerate its process, as well as sometimes reverse aging.

To date, twelve hallmarks of aging have been identified; these include reduced mitochondrial function, loss of stem cells, increased cellular senescence, telomere shortening, and impaired protein and energy homeostasis. Biomarkers of aging help to understand age-related changes, track the physiological aging process and predict age-related diseases [1].

Longevity. Technology: Biological information is stored in two main ways, the genomes consisting of nucleic acids, and the epigenome, consisting of chemical modifications to the DNA as well as histone proteins. However, biological information can be lost over time as well as disrupted due to cell damage. How can this loss be overcome? In the 1940s, American mathematician and communications engineer Claude Shannon came up with a neat solution to prevent the loss of information in communications, introducing an ‘observer’ that would help to ensure that the original information survives and is transmitted [2]. Can these ideas be applied to aging?

Whether in listening to music or pushing a swing in the playground, we are all familiar with resonances and how they amplify an effect—a sound or a movement, for example. However, in high-intensity circular particle accelerators, resonances can be an inconvenience, causing particles to fly off their course and resulting in beam loss. Predicting how resonances and non-linear phenomena affect particle beams requires some very complex dynamics to be disentangled.