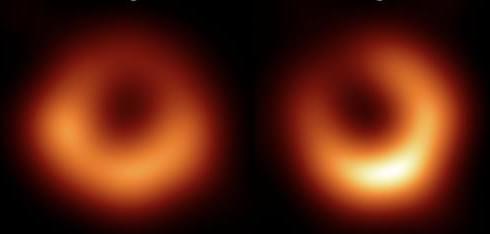

This year marks the fifth anniversary of the release of the first-ever image of a black hole, which revealed the glowing doughnut of the supermassive black hole called M87*. The research team that produced the image—the Event Horizon Telescope (EHT) Collaboration—recently released a second image of that same black hole, which lies 55 million light years from Earth [1]. This image comes from an updated version of the EHT and confirms key features of the black hole while also revealing changes over time in the pattern of light emanating from the disk surrounding the object. Starting with this release, the collaboration expects to issue increasingly frequent updates in support of the newly developing field of black hole imaging.

“Producing the first image of M87* was a herculean effort and involved creating, testing, and verifying many different schemes and approaches to analyzing and interpreting the data,” says Princeton University astrophysicist Andrew Chael, a member of the EHT Collaboration. “Now we are beginning to transition to a point where we understand our instrument and our analysis frameworks really well, so I think we are going to be releasing results a lot more quickly.”

Supermassive black holes are extremely distant and compact objects, two properties that make them extraordinarily difficult to image. For example, M87* appears to us as no bigger than an orange on the Moon as viewed from Earth. The 2019 image of M87* was pieced together using data collected in April 2017 from eight radio telescopes spread across the globe. All the telescopes in that array collected data simultaneously, allowing scientists to treat them as one giant radio-wave detector. The bigger a radio telescope, the smaller the objects it can image, and an Earth-sized detector opened the possibility of observing sources as small as supermassive black holes. So far, the EHT has imaged M87* and Sagittarius A*, the black hole at the center of the Milky Way (see Research News: First Image of the Milky Way’s Black Hole).