Astronauts Butch Wilmore and Suni Williams blasted off on June 5 — the start of a test flight that was expected to last just a week or so.

Starlink satellites were deployed by SpaceX. Watch multiple views captured by cameras aboard the Falcon 9 rocket second stage. Credit: Space.com | footage courtesy: SpaceX | edited by Steve Spaleta Music: New Age Solitude by Philip Ayers / courtesy of Epidemic Sound.

From human intelligence collection to information gathered in the open, the CIA is leveraging generative artificial intelligence for a wide swath of its intelligence-gathering mission set today, and plans to continue to expand upon that into the future, according to the agency’s AI lead.

The CIA has been using AI for things like content triage and “things in the human language technology space — translation, transcription — all the types of processing that need to happen in order to help our analysts go through that data very quickly” as far back as 2012, when the agency hired its first data scientists, Lakshmi Raman, the CIA’s director of AI, said during an on-stage keynote interview at the Amazon Web Services Summit on Wednesday in Washington, D.C.

On top of that, AI — particularly generative AI in recent years — has been an important tool for the CIA’s mission to triage open-source intelligence collection, Raman said.

“If you look at the trajectory of improvement, GPT-3 was maybe toddler level intelligence, systems like GPT-4 are smart high schooler intelligence and in the next couple of years we’re looking at PhD level intelligence for specific tasks,” she said during a talk at Dartmouth.

Some took this to suggest we’d be waiting two years for GPT-5 but looking at other OpenAI revelations, such as a graph showing ‘GPT-Next’ this year and ‘future models’ going forward and CEO Sam Altman refusing to mention GPT-5 in recent interviews — I’m not convinced.

The release of GPT-4o was a game changer for OpenAI, creating something entirely new from scratch that was built to understand not just text and images but native voice and vision. While it hasn’t yet unleashed those capabilities, I think the power of GPT-4o has led to big changes.

NVIDIA’s CEO Jensen Huang believes that the AI frenzy will automate a whopping $50 trillion worth of companies, stating that Blackwell will play a dominant role.

NVIDIA Isn’t Taking The Foot of The AI Accelerator Pedal Any Time Soon, Plans To Take Blackwell’s Adoption To a Whole New Level

NVIDIA has undoubtedly managed to pick up a market that will progress rapidly in the future. Not only is every big tech firm, whether Microsoft or Amazon, forced into the race of “AI automation,” but the demand for adequate computing power is rising massively.

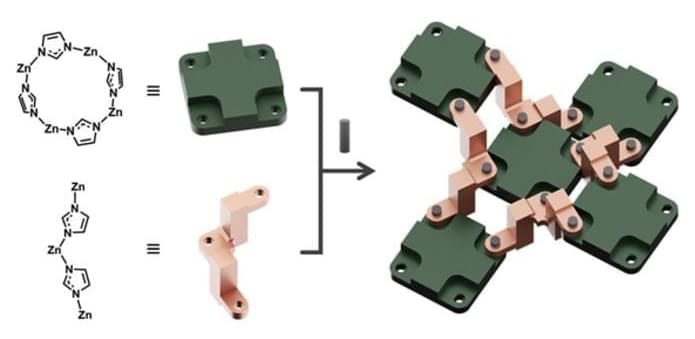

Machines have evolved to meet the demands of daily life and industrial use, with molecular-scale devices often exhibiting improved functionalities and mechanical movements. However, mastering the control of mechanics within solid-state molecular structures remains a significant challenge.

Researchers at Ulsan National Institute of Science and Technology (UNIST), South Korea have made a groundbreaking discovery that could pave the way for revolutionary advancements in data storage and beyond. Led by Professor Wonyoung Choe in the Department of Chemistry at UNIST), a team of scientists has developed zeolitic imidazolate frameworks (ZIFs) that mimic intricate machines. These molecular-scale devices can exhibit precise control over nanoscale mechanical movements, opening up exciting new possibilities in nanotechnology.

The findings have been published in Angewandte Chemie International Edition (“Zeolitic Imidazolate Frameworks as Solid-State Nanomachines”).

This may be about as wildly entertaining, disruptive and philosophically profound as legitimate scientific research gets. Michael Levin’s work in cellular intelligence, bioelectrical communication and embodied minds “is going to overturn everything.”