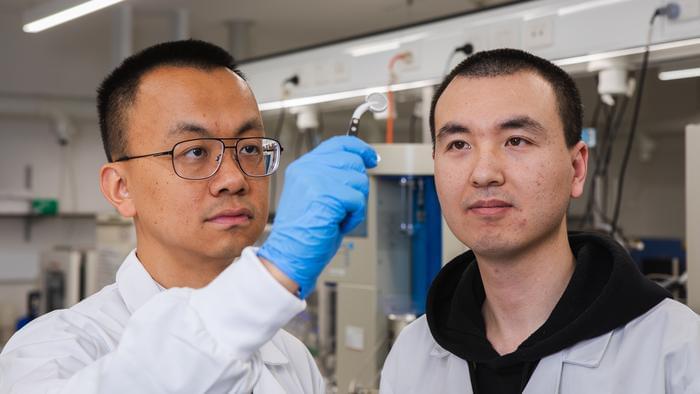

How can water-based batteries help improve lithium-ion energy storage and technology? This is what a series of studies published in Advanced Materials, Small Structures, Energy Storage Materials, and Energy & Environmental Science hopes to address as a team of international researchers led by Liaoning University in China have developed recyclable, aqueous-based batteries that won’t succumb to combustion or explosion. This study holds the potential to help researchers develop safer and more efficient water-based energy storage technologies for a cleaner future.

While lithium-ion batteries have proven reliable, they pose safety risks due to the organic electrolytes responsible for creating the electrical charge, which can lead to them catching fire or exploding, limiting their development for large-scale usage. To solve this problem, the researchers used water for driving the electric current between the battery’s terminals, nearly eliminating the chance for a safety hazard.

“Addressing end-of-life disposal challenges that consumers, industry and governments globally face with current energy storage technology, our batteries can be safely disassembled, and the materials can be reused or recycled,” said Dr. Tianyi Ma, who is a team member and a professor in the STEM | School of Science at RMIT University. “We use materials such as magnesium and zinc that are abundant in nature, inexpensive and less toxic than alternatives used in other kinds of batteries, which helps to lower manufacturing costs and reduces risks to human health and the environment.”